GitLab

Our first approach was to use the existing GitLab instance of HdM for our project. For them, a shared runner was already defined on which we could run our jobs, so we were able to focus on the CI process itself. This plan worked out at first. We simply defined build and test jobs, which passed without any problems. But when we tried to deploy to our staging server we were a bit confused, because no matter what we tried, the SSH connection to the server could not be established. Even after several reconfigurations of the keys and rewriting of the job we did not succeed, which was surprising, because we could connect to the server from our PC via SSH without problems. Finally, we found out that the HdM firewall blocked our SSH connection to the outside. Since the “HdM-GitLab solution” seemed to be a dead end, we decided to set up our own GitLab instance to be independent of external configurations.

Setting up your own GitLab instance

It is recommended to have at least 4GB of RAM available on the machine on which you want to host GitLab (more on that later). We decided to host it on a t2.medium instance on Amazon Web Services (running Ubuntu). It provides the necessary specifications without completely emptying one’s wallet. In the process of launching a new instance, you can choose an appropriate AMI of GitLab. This will basically relieve you of any further installation duties. Under the tag Community AMIs you will find different versions of the Enterprise or Community Edition. We went for the newest version of the Community Edition. It is essential to specify open ports for SSH (22) and HTTP (80). Therefore you need to configure a security group in your AWS interface. Under “Security Groups” you can edit existing groups or add new ones. After making sure the appropriate ports are opened for inbound traffic, you need to assign the security group to the EC2 instance.

After everything is set up you can access your GitLab instance via your preferred browser.

Setting up a GitLab Runner

Before we are able to use the Runner, which uses the Docker executor, we first need to install docker on our server. SSH into your AWS server and execute the following commands to install docker (updating the apt package index and then install docker-ce).

Now that we have got Docker installed, we can get to installing and configuring the runner. First we need to add GitLab’s official repository and then install gitlab-runner.

Now we can use the CLI to register a new runner, on which the jobs of our CI pipeline are executed. Just execute sudo gitlab-runner register and answer the questions of the the command dialog. When asked for the coordinator URL, simply put in the URL of your GitLab instance. You will find the token for registering the runner under Runner settings inside the CI/CD settings. There is no need to add any tags or set up the runner as unlocked. But all if this can also be changed later in the GitLab UI. As executor choose docker and as default image choose node. The default image will be used, if there is none defined in the .gitlab-ci.yml file (which we will do anyway).

Defintion of the pipeline

The essential file to specify the continuous integration pipeline is the .gitlab-ci.yml file, which needs to be put inside the root folder of the project. The file serves as the definition of the stages and jobs of the pipeline.

First of all we need to define the Docker image, which is used to run the jobs. In our case we want to use the Node.js image, therefore we simply add image: node to the yaml file. The CI executor will pull images from the the Docker Hub, so any of the pre-built images can be used here. The described code would use the latest node image, but you may also specify a different version by for example adding image: node:8:10:0 instead.

You can also define services (for example databases), which should be used, in the same manner. Any image available on the Docker Hub is possible. Since we use MongoDB as a database, we add the following to the file.

services: - mongo

Our pipeline consists of 4 stages, which are defined as follows:

- build

- test

- staging

- deploy

The jobs are specified in a similar manner. The naming of the jobs is arbitrary and completely up to the developers. For example we defined a job “build” which, as the name suggests, builds our project.

The stage, in which this specific job is supposed to run, is indicated, as well as the script, which we want to run inside this job. In this case it is fairly simple, as we do not really need to run any complicated build jobs for our Node.js application except for the installation of dependecies. There is a variety of configuration options possible. For an overview of all parameters see https://docs.gitlab.com/ce/ci/yaml/.

Furthermore, we want to define two jobs for our test stage, which look pretty similar to one another and deal with linting and testing.

run_linting:

stage: test

script: npm run lint

artifacts:

paths:

- build/reports/linting-results/

run_tests:

stage: test

script: npm run test

artifacts:

paths:

- build/reports/test-results/The code specified for the script parameter, runs the respective npm script in both cases. Those scripts are specified in the package.json file: “lint” triggers the execution of ESLint, whereas “test” makes use of the Mocha testing framework to run our implemented tests and Mochawesome to create HTML test reports.

The HTML pages containing the reports for the linting and testing results are stored in the folders build/reports/linting-results and build/reports/test-results. That’s why we specify those paths in the artifacts parameter, so that GitLab can offer the option to directly download those artifacts.

Finally, we have one job in each of our two stages staging and deploy, which pretty much look the same except for the definition of a different IP address of the appropriate server. The following code defines, what happens in our deployment job.

deploy_production: stage: deploy when: manual before_script: # Install ssh-agent if not already installed, it is required by Docker - 'which ssh-agent || ( apt-get update -y && apt-get install openssh-client -y )' # Run ssh-agent (inside the build environment) - eval $(ssh-agent -s) # Add the SSH key stored in SSH_PRIVATE_KEY variable to the agent store - ssh-add <(echo "$SSH_PRIVATE_KEY") - mkdir -p ~/.ssh - '[[ -f /.dockerenv ]] && echo -e "Host *\n\tStrictHostKeyChecking no\n\n" > ~/.ssh/config' script: - echo "Deploy to production server" - ssh deploy@159.89.96.208 "cd /home/deploy/shaky-app && git pull && npm install && sudo systemctl restart shaky.service"

A key difference in comparison to the staging job is the parameter when: manual. Although we want to automatically run the job to update the staging server to have the newest code, we do not want the same behaviour for our deployment job. We don’t want the job to automatically run, as soon as the ones before are finished, but we only want the job to run upon a manual triggering. Inside of the GitLab CI interface a “play button” for this specific job shows up, with the help of which the developer is able to manually trigger the deployment. Due to this setup one can check, if the application appropriately works on the staging server, before pushing to deployment.

The code, which is defined in the before_script parameter, is needed, because GitLab does not support managing SSH keys inside the runner. To deploy, we, of course, need to SSH into our server. By adding those commands, we can inject an SSH key into the build environment. For further details see https://gitlab.ida.liu.se/help/ci/ssh_keys/README.md.

The actual command (inside script) to deploy our code simply uses SSH to access the deployment server, where we have already set up a connection to the Git repository. Now we only need to pull the current code base, update the dependencies (npm install) and restart the service. This works the same for the deployment to the staging server.

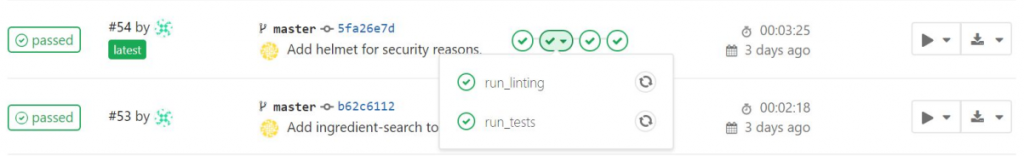

In the GitLab UI our pipeline is displayed like this:

We can see that the pipeline consists of 4 stages, if a stage holds multiple jobs you can inspect them via dropdown. With the play-button you can start the deploy-production job, additionally you can download the artifacts of each job by clicking on the “download”-icon.

GitLab Pages (used for test reporting)

In our project we have implemented various quality assurance measures (unit tests, static code analysis and code coverage). Reports were created for each test to make it easier to analyze the results. Unlike Jenkins, there is no plugin for GitLab CI to publish test results directly in GitLab. So we had to look for an alternative solution and came across GitLab Pages. With GitLab Pages you can host static websites for your GitLab project. We planned, to publish our test results to GitLab Pages, to have them attached to our repo.

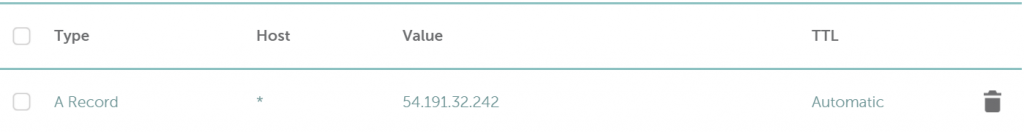

Because we ran our own GitLab instance, we first had to enable GitLab Pages. We encountered a problem: for our project, even before we came across GitLab Pages, we had purchased a domain on which we registered our servers (staging and production) and our GitLab instance. However, to be able to properly configure GitLab Pages for your own instance, you have to register a wildcard DNS record, pointing to the host on which the GitLab instance runs. Unfortunately our provider did not bring wildcard subdomain support for our domain package. Therefore we had to register a second domain at another provider, for which the creation of wildcard DNS records was possible. In our case the configuration looked like this:

NOTE: when planning to make use of GitLab Pages, be sure you can register wildcard DNS records for your GitLab instances’ domain.

After we managed this challenge we had to set the external URL for GitLab Pages in the GitLab configuration (gitlab.rb).

At last we had to make some small modifications in our gitlab-ci.yml file in order to publish the test results to GitLab Pages. For each test report we had to create an artifact. Additionally, we defined a new job, called pages, which moves all artifacts from their original directory, to the public directory, in which GitLab pages expects to find the static website.

pages:

stage: staging

dependencies:

- run_linting

- run_tests

script:

- mkdir public

- mv build/reports/linting-results/* public

- mv build/reports/test-results/* public

- mv build/reports/lcov-report/* public

artifacts:

paths:

- publicFor each test report an HTML file with a different name was created, so after the pages job was completed, the 3 different reports were accessible under:

- http://project-name.gitlab-domain.io/static-test.html

- http://project-name.gitlab-domain.io/unit-test.html

- http://project-name.gitlab-domain.io/coverage.html

For better usability, it would have been nice to create an index.html which would have served as a dashboard and would have linked to all reports. Unfortunately, there was not enough time to implement this additional feature.

Production and staging server

For us, the setup of the Ubuntu servers (staging, production) was a bit tricky at first, because neither of us had done this completely before, but we successfully made it at the end. Our goal was to set up a web server to handle the requests, as well as ensuring that our application is always running and automatically reloading whenever the server crashes or gets restarted.

First, we created a deploy user and installed the needed software (node.js, mongodb & git). Then we established a SSH connection to our repository, cloned the repo and started the application to test whether everything worked up to this point.

Always-on service

Next, we configured the “always-on mode” for our application. We used the default init system systemd for Linux systems.

For this purpose, we created a configuration file /etc/systemd/system/shaky.service in which we specified that the application is started or relaunched at system start, on which port it runs and where the files are stored.

After this, we were able to start our application with the command sudo systemctl start shaky. To enable starting the application when the server (re)starts, we ran sudo systemctl enable shaky.

With “sudo systemctl restart shaky” the service could be restarted. We later used this command in our gitlab-ci.yml File in the deploy jobs. The first attempt to execute this command during the deployment process failed because our deployment user had to type his password every time he executed the command (which was obviously not possible from GitLab). Therefore we added a custom file to /etc/sudoers.d/ as root user, which enabled the deploy user to restart the shaky.service without typing his password with this command:

$ %deploy ALL=NOPASSWD: /bin/systemctl restart shaky.service

Nginx

We used the Nginx web server (and load balancer) to handle all requests from the web. Therefore we downloaded and installed nginx and created a configuration file /etc/nginx/sites-available/shaky.

upstream node_server {

server 127.0.0.1:8080 fail_timeout=0;

}

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name shaky-app.de www.shaky-app.de ;

location / {

proxy_pass http://localhost:8080;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_cache_bypass $http_upgrade;

proxy_redirect off;

proxy_buffering off;

}

}We defined our web server to listen to requests on port 80 and forward all requests to our shaky app (listening on port 8080). Additionally we set the server name as well as some request headers. Then we replaced the default Nginx configuration with our own config and restarted Nginx.

When we checked whether the configuration was correct, we found that the page was only accessible if we explicitly specified the port in the request. Later we noticed a small error in the configuration. We forgot to include the port in the proxy_pass property so the request would be automatically forwarded to the correct port (we had set http://localhost instead of http://localhost:8080).

Leave a Reply

You must be logged in to post a comment.