Category: Secure Systems

Supply Chain Cybersecurity – Wie schützt die Industrie ihre digitalen Lieferketten?

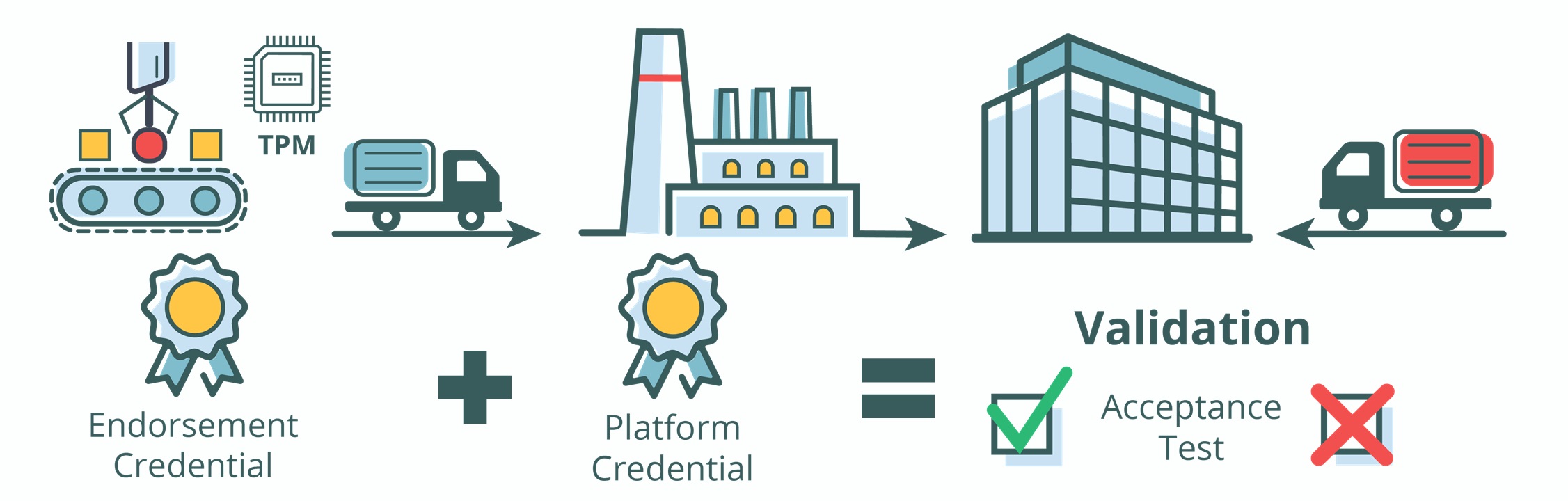

Lieferketten sind über Jahrzehnte hinweg das traditionelle Modell für Angebot und Nachfrage. Sie reichen von der Herstellung von Rohstoffen über die Verarbeitung und Produktion bis hin zu Verkauf und Nutzung der Endprodukte. Dieses Lieferkettensystem kann Dienstleistungen für Menschen, Unternehmen und Institutionen in einem relativ sicheren Rahmen erbringen. Durch die Einbindung von technischen Methoden und Software-Komponenten…

Cyberangriffe zum Anfassen – Schutz physischer Systeme im IoT

Das Internet of Things (IoT) – ein faszinierendes Konzept, das unser Leben und unsere Arbeitsweise revolutioniert. Es verspricht eine Welt in der nahezu alles miteinander vernetzt ist, von unseren Haushaltsgeräten bis hin zu komplexen industriellen Anlagen. Eine Zukunft, in der Effizienz und Produktivität in der Wirtschaft steigen und unser Alltag bequemer und nachhaltiger wird. Klingt…

Ring Kameras – Überwachst Du Dein Umfeld oder Amazon und Fremde hier Dich?

Es ist bereits aus vielen vorangestellten Blogbeiträgen, Videos und Social-Media-Beiträgen bekannt, dass Amazon eines der Unternehmen ist, das alles über uns zu wissen scheint. Die letzte größere Akquisition liegt inzwischen fast fünf Jahre zurück und handelt von dem Kauf der US-amerikanischen Firma Ring. Ring läuft im Vergleich zu Alexa und Echo nicht direkt als Amazon-Produktkategorie,…

“Botnets on Wheels” – How Hackable Are Connected Autonomous Vehicles And What Are We Doing About It?

Can you imagine the vehicle of the future? The vehicle of the future will not have a steering wheel, no pedals for acceleration and brakes – you will not be able to drive it at all! Most – if not all – of you will have heard a lot about autonomous vehicles by now. It…

Sicherheit geht vor: Die soziale Verantwortung der Unternehmen in Bezug auf die IT-Security

In einer zunehmend vernetzten und digitalisierten Welt sehen sich Unternehmen einer Vielzahl von Cyberbedrohungen gegenüber. Laut einer Studie der Bitkom wird praktisch jedes deutsche Unternehmen irgendwann mit einem Cyberangriff konfrontiert sein, wodurch im letzten Jahr ein Schaden von 203 Milliarden Euro durch Diebstahl, Spionage und Sabotage entstanden ist [1]. Die Gewährleistung der IT-Sicherheit ist nicht…

Sicherheit von i-Voting-Systemen: Chancen und Risiken von online Wahlen

Ein paar kaputte Tonkrüge mit eingeritzten Namen darauf – so begann die faszinierende Geschichte der Wahlen. Im antiken Griechenland fanden die allerersten Abstimmungen statt, bei denen die Bürger die Namen von unliebsamen Zeitgenossen auf Tonscherben kratzten. Diejenigen, die am häufigsten genannt wurden, mussten für zehn Jahre ins Exil gehen [1]. Von diesen bescheidenen Anfängen bis…

Machine Learning: Fluch oder Segen für die IT Security?

Im heutigen digitalen Zeitalter ist die Sicherheit von IT-Systemen ein allgegenwärtiges Thema von enormer Wichtigkeit. Rund um die Uhr müssen riesige Mengen an sensible Daten sicher gespeichert und übertragen werden können und die Funktionalität von unzähligen Systemen muss zuverlässig aufrecht gehalten werden. Industrie 4.0, unzählige Onlinediensten und das Internet of Things (IoT) haben zu einem…

Sicherheitscheck – Wie sicher sind Deep Learning Systeme?

In einer immer stärker digitalisierten Welt haben Neuronale Netze und Deep Learning eine immer wichtigere Rolle eingenommen und viele Bereiche unseres Alltags in vielerlei Hinsicht bereichert. Von Sprachmodellen über autonome Fahrzeuge bis hin zur Bilderkennung/-generierung, haben Deep Learning Systeme eine erstaunliche Fähigkeit zur Lösung komplexer Aufgaben gezeigt. Die Anwendungsmöglichkeiten zeigen scheinbar keine Grenzen. Doch während…

Sicherheit und Langlebigkeit der Global-Tech-Player

Bei der Frage danach, wie sicher unsere Anwendungen sind, sind Threat Analysis und Threat Modelling bewährte Methoden. Dabei wird klar, dass alle Angriffe aus einem Ziel, den Mitteln und dem Motiv bestehen. Ziele können von der internen IT und Security versteckt und vermindert werden. Die Mittel zum Angriff sind heutzutage quasi unbegrenzt und wenn überhaupt…