Written by: Pirmin Gersbacher, Can Kattwinkel, Mario Sallat

Connect Gitlab with Kubernetes

With the Review Apps Gitlab offers an excellent improvement of the Developer Experience. More or less Gitlab enables the management of environments. For each environment, there is a CI task to each set-up and tear down. It is important that the dynamic URL under which the environment can later be reached is made available to Gitlab. Then Gitlab will kick in and preview each Merge Request with the corresponding environment.

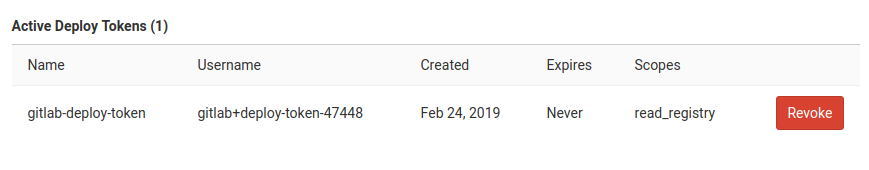

Before this part can be started some housework has to be done. First of all, a new deploy token must be obtained in Gitlab in the repository settings. This is necessary for the Kubernetes cluster to be able to access the Gitlab registry later to pull the Docker images. Make sure to name it gitlab-deploy-token as it will be then available for use in the CI pipeline as an environment variable. As the scope only read_registry is required.

Next, create a new cluster or connect an existing one. The dialogue can be found under Operations – Kubernetes. The nodes of the cluster can be set up with the smallest configuration. For a successful connection there were problems with the smallest machines of the Google Cloud – maybe this problem is solved by now – if not pick an instance type larger.

After a short while, the cluster will be set up and can be used. Now Helm Tiller, Ingress and Cert-manager can be installed. The ingress installation takes some minutes, once succeeded the Ingress IP Address will be available. In oder to skip the setup of a domain, this example uses XIP.io. The service provides DNS wildcard domains. The IP is simply used as subdomain of XIP.io. Therefore enter 35.184.252.80.xip.io as Base domain on the Kubernetes Page of Gitlab – make sure to replace the IP with the one displayed as `Ingress IP Address’. The cluster is now successfully set up and connected!

Deploy Environments to Kubernetes

The required Gitlab File is comprehensive and therefore not fully explained. However, the most important steps are briefly summarized. The build stage will take care of createing a Docker image for both, server and client. In order to push this image into the Gitlab Registry a login is required – this can be done with the docker login-command, the values are provided as environment variables by default in each job.

Later, Kubernetes will access the images, for this purpose a secret is stored in the cluster which contains the previously created deploy token. Due to its name, this can also be accessed via an environment variable.

Create the Environment

In order to create the environment on the cluster first, the namespace is ensured – since it might be the first deployment to this environment. E. g. when a new feature branch is created. After this the Tiller installation is verified – Tiller is the counterpart of Helm, which currently still has to be installed within the cluster. In the meantime, it may have become noticed that some commands were outsourced to Bash functions for the purpose of composition. These are available in all jobs.

Afterwards the previously created secret is written or updated to the cluster and only then the deployment is started. Really important is the environment-block, since this tells Gitlab about the URL of the environment. While the URL is static for fixed environments like Staging, Production or Dev it will differ for each feature environment. In order to stop the environment, the on_stop job is linked. So Gitlab can tear down the environment if e.g. the merge request is closed.

So the bash function deploy is the key to our continuous delivery pipeline. The helm chart is called with some variables that are assembled during the build – e. g. the service urls. Noticable is also the call of upgrade rather than install. This is due to that the environment might already exist, and in this case should only be updated.

What is set up, should usually be able to be removed again, so take a quick look at the on_stop task. The task is marked as manual – otherwise it would get executed right away. Behind the delete bash function is a simple call to helm delete --purge "$name" – where name reflects the current release.

Conclusion

This article showed how to create an advanced continuous integration and delivery scenario with reasonable effort. Thanks to the integration of Gitlab, this fits neatly into the developer workflow and allows you to view each change in a separate environment.

The following environments are therefore used in this project and can be reproduced by checking out the follow-along repository and hooking it up with a Kubernets Cluster.

| Environment | Branch | |

|---|---|---|

| Feature-x | origin feature/x | Volatile |

| Dev/Canary | origin master | Persistent |

| Production | origin production | Persistent |

| Staging | origin production | Persistent |

The inspiration for this project was the GitLabs Auto Dev Ops Template, so we would like to take this opportunity to thank Gitlab for it.

Parts:

Part 4 – Creating Environments via Gitlab

Leave a Reply

You must be logged in to post a comment.