Security hit a high level of importance due to rising technological standards. Unfortunately it leads to a conflict with Usability as Security makes operations harder whereas Usability is supposed to make it easier. Many people are convinced that there is a tradeoff between them. This results in either secure systems that are not usable or in usable systems that are not secure. Though developers are still struggling with the tradeoff, this point of view is outdated somehow. There are solutions that do help to design secure systems people can use.

The more secure you make something, the less secure it becomes.

Before we start, first let’s check out the importance of Security and Usability within systems.

On the one hand there is a higher necessity for security since cyberthreats increased. Also more and more important and private data are digitally stored or accessible. These days we are all so connected and used to have everything available all the time. Who doesn’t execute money transactions via Online Banking or PayPal, buys his public transportation tickets online or might schedule his next doctors appointment online? In all those cases we operate with sensitive data, we don’t want anyone else to get access to. Therefore personal privacy needs to be kept in mind, when talking about security. The user wants to be able to trust a system, especially when operating with sensitive data.

Usability on the other hand is important, because it’s the users main goal to have a usable system. By definition a usable system must be effective, efficient and satisfy the users needs, what means it has to do what the user expects it to do, as easy, understandable and fast as possible. That is why only a good usability helps the user to accomplish his task.

But what are the general problems that came up with the rising significance of security and how do they challenge good Usability. Due to the rapid development of technology a higher standard of security is needed. One example that erupted in May 2018 are “Data Protection Rights” that, amongst other things, forced systems to make transparent where they collect what kind of data. Lots of systems had to overthink the way they operate with data, change several things, and try to find a resolution that makes the system still usable.

Nevertheless, out of fear there might be a security overkill to prevent possible attacks as it is shown in the dillbert comic above. This leads to a great amount of security within a system what most certainly hinders the users productivity. To obtain their productivity again, users find ways to side-step the security and achieve a better usability again. Not only the “normal” user, but also professionals create workarounds, though they know they should not. The most famous and so powerful example are passwords. A system might require a special sequence of characters, including special characters, upper- and lowercase, numbers, the password has to be changed from time to time and so on. By the amount of logins we have, it gets harder to invent that many secure passwords and remember all of them. Common solutions are using the same password for different logins, writing down the password on the monitor or saving the password within a file on your computer. With this approach the system might get more usable but will endanger the security.

This points out that the threats don’t always come from the “bad guys” that harm a system on purpose but also from users that are unaware of producing security leaks on their own.

There is not only the side where the user creates problems but also the stage during which the system is created. Having a lot of different experts developing something, they often do not have view on the whole. So even when there is a security and an usability expert in the team it might be that they both think their view is the most important one, without knowing about what the other one is doing. Being an expert in one area doesn’t mean they have an understanding for other areas. So an security expert can build a very secure system, without thinking about whether it is usable, as well as an usability expert might not integrate any security mechanisms.

So the main goal of systems is one that is secure and enhances the privacy and at the same time is usable, effective at performing its task and provides a high user experience at reasonable cost. Despite the tradeoffs of security and usability this goal can be achieved, when being aware of some simple solutions as well as special design principles.

If a solution is easy to use, the user will choose to work securely over choosing not to.

Security needs to be usable, than the user is willing to accept any kind of barrier, in case there even is one. One example is the way you unlock your smartphone. As basically everyone has private information stored on his phone, we surely don’t want any strangers to have access to it. Therefore we are willing to have some kind of security mechanism. However nobody wants to type in a 10 characters long password every time unlocking a phone. Fingerprints on the other hand are a way more usable solution. Rapid development of new technology and technological solutions offer appropriate possibilities that can make systems more easy to use and enhance security on the same time. A fingerprint can’t be copied in a way a normal password could. Unlocking the phone via fingerprint offers a good experience for the user, it is “usable”. That’s why security also needs to motivate a good user experience. As a conclusion we can say security and usability need to be met at all levels, because the highest level of security can only be achieved with equivalent highest standards of usability. And keep in mind that more security doesn’t necessarily mean less usability as a more secure lock doesn’t have to be more difficult to operate.

Another useful aspect is the classification of data that has to be secure by their importance. Sensible data need a higher security level whereas for less sensible data the security barrier can be minimized. And that’s actually fine, because security is more accepted by the user when there is a reasonable necessity. Doing e.g. a bank transaction the user has to overcome several security barriers. First he has to unlock his phone, as a second step he has to authenticate himself when opening the app and when finally doing the actual transaction he has to confirm it with a TAN. Though this is still more effort than doing a picture with the phone it’s a reasonable amount, the user is ok with, as he is dealing with sensible data.

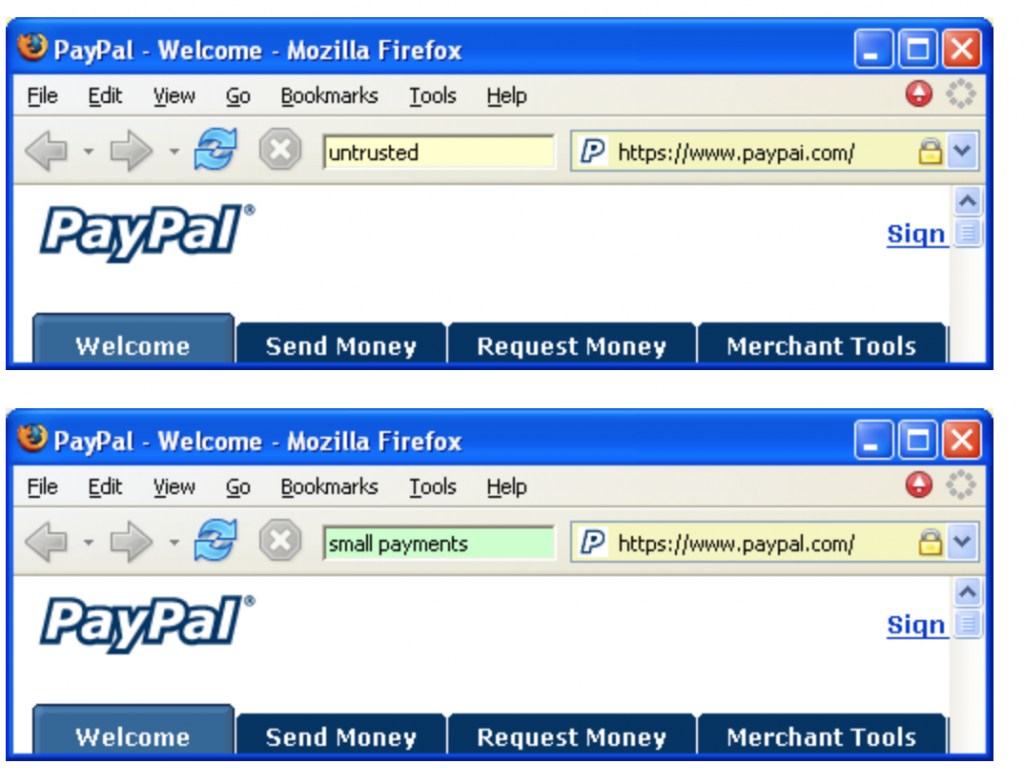

Regardless of the security level, the user needs to be able to trust a system. A lack of trust will result in a system being ill-used or, worst case, not used at all whereas a lack of understanding of trust might result in the wrong decisions. Also too much trust can be as dangerous as not enough trust. Though there are mechanisms that can convey trust immediately, trust often is build during time. Trust can be distinguished into the three layers dispositional trust, learned trust and situational trust. Dispositional trust means psychological disposition or personality trait. The person’s general tendency to trust as a result of experience is what we understand as learned trust. Situational trust are tendencies in response to situational cues. These layers can help us to understand how to develop trustworthy system. Create systems and interfaces that are as familiar as possible so the user doesn’t have to make a dispositional trusting decision and make more learned decisions. Security and Privacy will allow a user to make these decisions with as many positive situational cues as possible or allow users to provide and maintain his own situational cues. Phishing is one of the best known examples where the users trust gets exploited. He might receive an email, looking like it is from PayPal, where he is asked to update his records. What he doesn’t know is, that by clicking on the mails link he will get forwarded to a site, that’s not from PayPal. But he will trust the site, and he doesn’t suspect any harm.

Now let’s get back to the main question, “How to design secure systems people actually can use?” One important solutions is called “Security by Design”, so let’s have a closer look what that means.

In the beginning systems were made just functional, Usability and Design were added afterwards. After the system was developed, they checked for vulnerabilities and tried to fix them e.g. with updates or patches, as they didn’t think of possible vulnerabilities during the development process. Security by Design includes security not only in the development but early in the design process. Including continuous testing as well during the development process, Hard- and Software can be developed as free from vulnerabilities as possible.

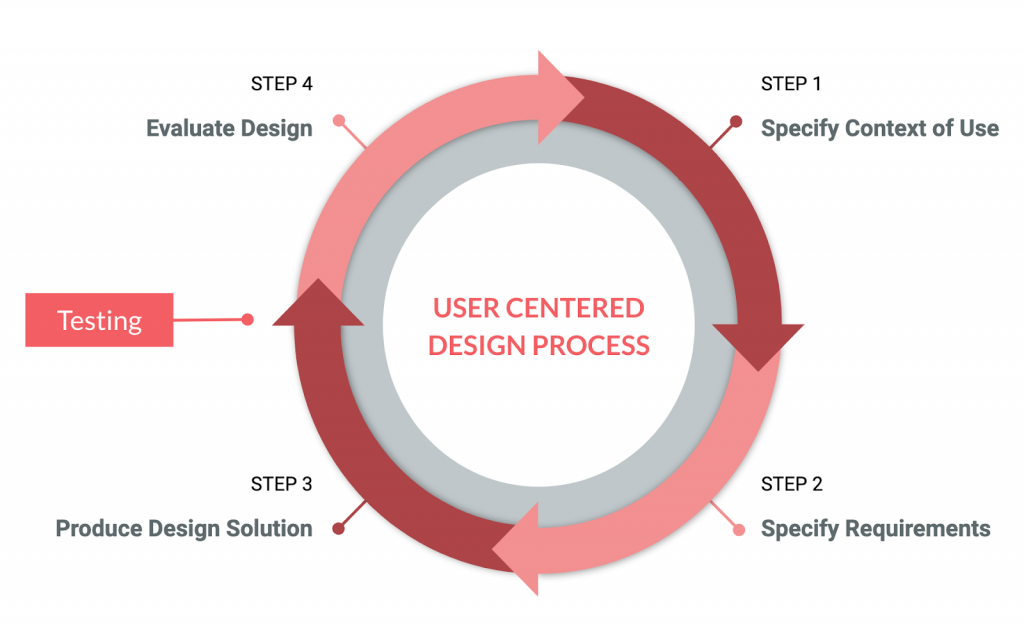

This approach is based on the User Centered Design Process as it can be seen in the following graphic.

Security should no longer be added after the system was developed but be included early in the UCD Process. The UCD Process analyses the users behaviour and needs, and therefore makes sure a system is usable. Furthermore there are not only Design Experts included in the UCD but experts from all different kind of areas. Including security experts into the product team throughout the whole development process, it is more likely they will understand more about the users real human behavior. Having experts from different kind of areas in one team it can achieve systems that are understandable and usable, functional and safe, secure and private.

The outcome will be a usable interface. Interfaces should be easy to navigate with little thought about what to do. The system should not depend on a user using it in a certain way to prevent it from security faults (“If the user doesn’t enter a wrong number, the system will work as expected”). Also security decisions shouldn’t be made by the user (“I don’t want two-factor-authentication”). We can say that a good interface should improve the security while it lessens the liability on the user.

To make sure of secure usable software there are guidelines and strategies for secure interaction design established by Ka-Ping Yee. They shouldn’t be seen as a complete solution to design secure software but they can be helpful.

The ten guidelines can be separated into authorization and communication. The guidelines that are dealing with authorization specify the topics of the path of least resistance, active authorization, recoverability, visibility and self-awareness. Users should always be offered a comfortable way to do tasks with the least granting of authority. It should be possible to take back authority. Furthermore there should be an awareness about of others as well as the users own authority. Communication guidelines handle the trusted path, expressiveness, relevant boundaries, identifiability and foresight. The user has to be able to trust the systems he is using. Security policies need to be expressed so the user can understand them. Distinct what a user needs to be able to see and what not to do his task, make objects distinguishable and indicate the consequences clearly.

Some of these guidelines can be implemented by the design strategies security by designation and user-assigned identifiers. With security by designation the user designates an action and receives the authority to perform the action. User-Assigned Identifiers means that the user can decide on an identifier that refers to and object or action. If an identifier is already assigned, the identifier can be misleading or confusing and offer potential attacks.

Getting back to the problem of trust and the example of phishing attacks security by designation is not possible here because the users has to identify the site as not trustworthy on his own. A possible solution would be a user-assigned name with a petname toolbar, where the user can define a specific name to a site to declare it trustworthy, as shown in the following image.

As a conclusion we can say, that Security and Usability have come a long way. They are getting along together quite well. Still we have to be aware of the fact that the user is a very important factor, as no system will be useful if nobody is using it. This awareness and when a system understand the users desires are surely is a first step towards usable secure system. There doesn’t have to be a tradeoff.

Sources

- Security and Usability – Designing Secure System That People Can Use, Lorrie Faith Cranor and Simson Garfinkel, O’Reilly Media, 2005

- Sichere Systeme, Walter Kriha und Roland Schmitz, Springer, 2009

- https://jnd.org/when_security_gets_in_the_way/

- https://www.funkschau.de/telekommunikation/artikel/162959/

- https://www.computerwoche.de/a/warum-ihre-mitarbeiter-die-security-hintergehen,3332163

- https://whatis.techtarget.com/definition/security-by-design

- http://techgenix.com/security-vs-usability/

Leave a Reply

You must be logged in to post a comment.