This article gives an introduction to some important aspects of system theory and system analysis. We present some frameworks and toolsets used for system analysis and summarize some key points of these tools. The article aims to show some of the complexity behind system analysis and why there often is no simple solution. It then follows up with some (not so positive) real-world examples to show what can happen when one does not fully understand the complexity of systems and the causal relations within them.

by Paul Fauth-Mayer and Alexander Merker

Introduction

Reading the news can be frustrating. Everyday we’re bombarded with information for much of which you cannot help but ask the question: “how did we even get here? While the solution sometimes seems obvious at first glance.

There’s a striking connection to high school, back when we learned about human history. History books are full of seemingly needless conflicts, manufactured catastrophes and late intervention. Arguably, most of the largest catastrophes of humankind were avoidable. Thus also comes the saying

“Those who cannot remember the past are condemned to repeat it. ”[10]

Current and past events suggest that we’re not doing overly well at this. When it comes to escalating conflicts and looming catastrophes, cynical minds might argue that we haven’t learned anything at all. More and more countries are edging closer and closer to authoritarianism, the wealth gap is at an all time high and the combined efforts against climate change dwarf the projected impact it will have even on our near future.

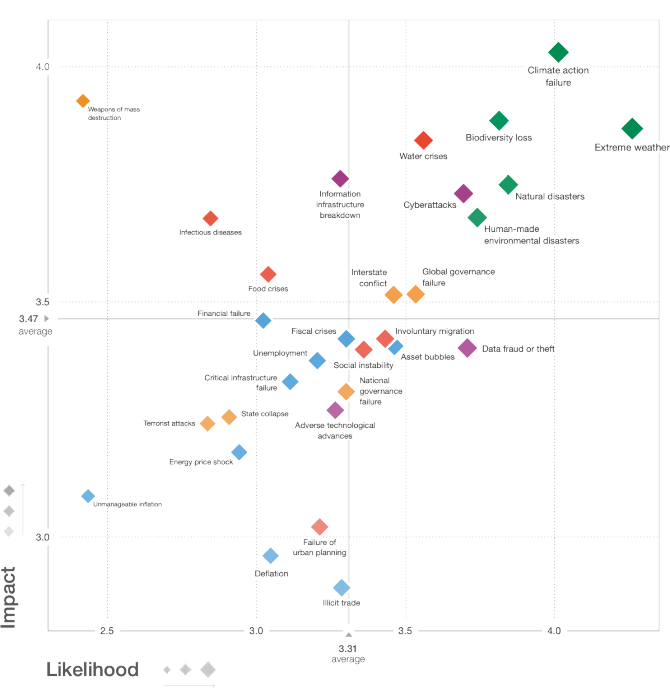

In this year’s iteration of the Global Risks Report, commissioned by the World Economic Forum, the top 5 most likely Risks to occur in the near future are listed under the environmental category. In terms of impact, 7 out of the top 10 risks in terms of projected impact are directly related to climate change. And, what’s the most concerning: The highest impact risk isn’t called climate change, it’s called climate action failure, as can be seen in the following graph (Figure 1). This shows that while we know that we’re en route to a catastrophe, our current mechanisms do not do a good enough job at changing course. Maybe the problem needs to be tackled at a different spot in the system altogether?

To put it briefly: things don’t seem to be looking up. And it’s not that this is recent news. When put up to the test, many climate models from as far back as the 70s correctly predicted the current CO2 and temperature levels around the world [9]. Despite being known in the scientific community since the seventies, meaningful countermeasures haven’t taken place yet. This could suggest that finding, implementing and/or integrating them into our society isn’t as easy as one might think.

Again, most of these issues seem solvable (or at least avoidable) but the exact when, how and whom don’t seem to come as easily as the realisation that something is going wrong. And even then, what would the consequences of these solutions be? Can we be sure to have thought of every possible effect? Is there a way to measure or anticipate these effects beforehand?

In other words: is it really that easy? Things might be a bit more complex than we’d like to think.

System Thinking and Complex Systems

Upon closer inspection, you come to the realisation that these issues do not exist in a vacuum, but are rather elements of often incredibly complex, hard to comprehend systems. Especially when looking for articles on climate change, you cannot avoid stumbling upon systems theory. A frequently discussed topic is the handling of complex systems. However when trying to figure out what those systems actually are, you’re presented with a plethora of options. Most papers and articles portray different images of these systems, adding additional requirements or stripping away from them. In general, there doesn’t seem to be a consensus on when a system can be considered complex. For the purpose of this blog post, we’ll try to define a couple of required core concepts.

Sets. A set is a loose accumulation of elements, with no relation to one another besides being in the same set. An element may be in multiple sets at once, yet the relation between individual elements and individual sets isn’t important at this point. Think a heap of bricks lying on the roadside, discarded with no particular order.

Systems. A set becomes a system when the elements contained within form connections, or rather, relations of cause and effect with one another. Coming back to our brick example, think of a system as the heap of bricks being restructured into a brick wall. The topmost layer of bricks interacts with the layer beneath to form a stable structure. The same is true for every layer beneath.

Complex Systems. As mentioned before, there’s no clear definition of what a complex system is. However, there are certain characteristics that all definitions share. Most importantly, a system can be considered complex when it isn’t anymore comprehensible in its entirety. This can be due to a number of reasons: the individual elements may already be too complex in of themselves to understand, their interactions may be too complex or their sheer number makes observation of the entire population impossible.

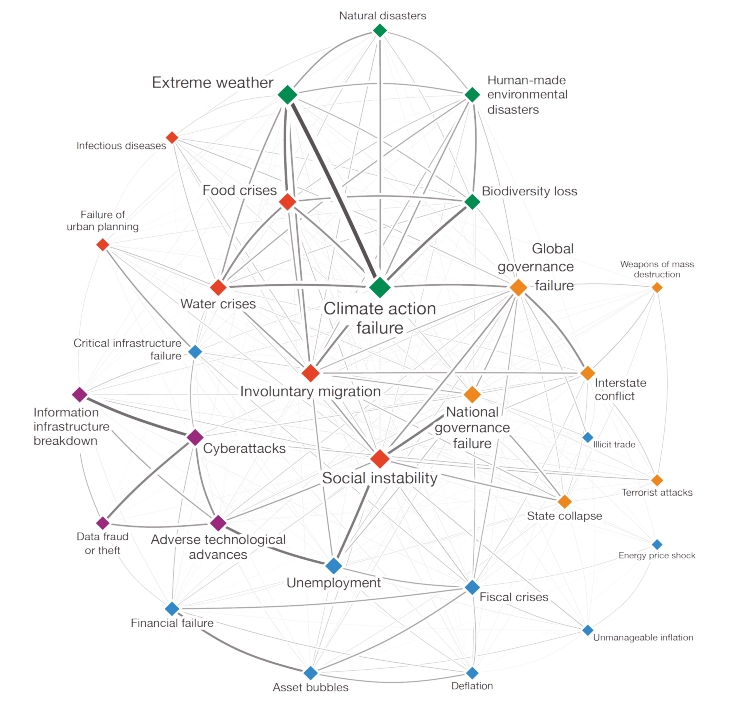

Complexity is also considered in the aforementioned Global Risks Report: almost all global risks are related in one way or another – if not directly, then at least tangentially. The connections can be observed in this network graph (Figure 2). This shows that not one problem can be solved separately but rather is interconnected with many other problem domains:

So, what do we do when we do not understand a system? We try to analytically break it down into smaller parts. If we still can’t understand the smaller elements, we continue this process until we arrive at a level where we can understand and make meaningful changes to them. This is also very true for us programmers – we typically try to split logical blocks into their own individual functions, partly because this additional level of abstraction can make it easier for us and our colleagues to understand the whole purpose and flow of the program.

However, when we perform this abstraction, we trade complicated access to information for additional top level clarity. This might work well for most computer programs, but will fall flat for any truly complex system (if you’re surprised to hear that we don’t call computer programs complex systems, hold onto it for a bit longer. We’ll get to that in a bit). So where’s the issue? The issue lies within one of the core concepts of system thinking. While for programming, we often rely solely on analysis, system thinking relies on analysis and synthesis in an equal manner. This means that instead of seeing a system as a sum of its parts, it’s also seen as what comes out of their interactions. Systems theory thus takes a more holistic view of the system than any analytical concept ever could.

Leverage Points

As with any meaningful science, systems theory comes with its own language and toolsets. One such toolset was coined by Donella Meadows, an environmental scientist and proponent of systems theory.

Meadows provides an interesting view of system analysis in her 1997 book “Thinking in Systems: A Primer”. Among other points she describes leverage points as “places to intervene in a system”. A leverage point thus describes a point of interaction in a complex system that has a high impact on the entire system, providing the possibility to improve the entire system – or make everything worse. According to Donella Meadows leverage points are factors of massive influence on a system that are not intuitive, even counterintuitive in most cases. Influencing factors in complex systems can be both material and non-material changes in system states. Inflows and outflows regulate a certain system state with stocks/ capacities increasing or decreasing [1].

Donella Meadows concluded the following 12 general places to intervene in a system, in increasing order of effectiveness:

Places to intervene in a system

- Constants, parameters, numbers (such as subsidies, taxes, standards).

- The sizes of buffers and other stabilizing stocks, relative to their flows.

- The structure of material stocks and flows (such as transport networks, population age structures).

- The lengths of delays, relative to the rate of system change.

- The strength of negative feedback loops, relative to the impacts they are trying to correct against.

- The gain around driving positive feedback loops.

- The structure of information flows (who does and does not have access to information).

- The rules of the system (such as incentives, punishments, constraints).

- The power to add, change, evolve, or self-organize system structure.

- The goals of the system.

- The mindset or paradigm out of which the system — its goals, structure, rules, delays, parameters — arises.

- The power to transcend paradigms.

In this part of the article we want to explain a few of the concepts behind the points on this list and show how they take influence on the entire system. We want to show how different factors and methods influence the system and where “leverage” can be gained. Then we follow up with some (historic) real-world examples.

Constants, Parameters, Numbers

In system thinking parameters are the most basic point of intervention on a system’s behavior. Parameters determine the amount of inflows and outflows of the system. An example can be government income and spending. The government gains money by taxation and spends this money on whatever it deems useful for the system. Government members continuously argue which tax inflows and spending outflows can be opened or closed and how high or low the values can be. This requires large amount of effort. Donella Meadows claims that 99% of the attention (*) goes to changing parameters. But with the regular changes of governments and presidents not much changes in the entire system. A newly formed government changes a lot of these parameters and therefore increases inflows and outflows but does not fundamentally change the system. A new government plugged into the same political system will make changes that have effects in the short term but not have that much impact on the entire system. All in all, little leverage is gained with parameters but almost all our attention goes towards them [1].

(*): 99% of all our attention in all systems goes to changing parameters (not just governments).

Feedback Loops

Feedback loops are recurring structures in Meadows’ work. Categorized into negative and positive feedback loops they represent natural mechanisms that continuously influence certain system states in a loop-like structure. Feedback hereby means that one iteration of the loop is applied to a system state and the outcome is used for the next iteration.

Negative feedback loops actually do not mean a necessarily negative effect on the system but rather a controlling mechanism that keeps a system state from “getting out of hand”. An example that the author mentions is the emergency cooling system of a nuclear power plant. While most of the time not necessary, in an emergency case it can prevent the system from collapsing entirely. The leverage point in these negative feedback loops is their strength to correct certain system states. The strength of a feedback loop is hereby relative to the impact it is designed to correct. In conclusion a negative feedback loop is a self-correcting feedback loop [1].

Positive feedback loops equally do not only have positive consequences. They are rather self-reinforcing loops that exponentially increase a certain system state until the system destroys itself completely. Uncontrolled growth of a certain factor in a system would lead to a total collapse unless there was a controlling mechanism (a negative feedback loop). The global pandemic will eventually run out of people it can infect when either all people are already infected or effective measures are taken to ensure that infection numbers are being controlled (as it happens this is actually an example from the 1997 article that is highly relevant today). The leverage point in positive feedback loops is limiting the exponential effects so that control mechanisms can effectively preserve the system from getting out of balance [1].

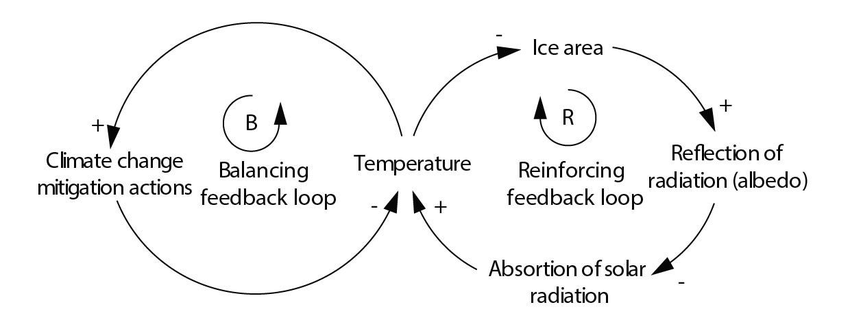

As Figure 3 shows the strong correlation between the controlling/balancing feedback loop and the reinforcing feedback loop which both influence the same system state [1].

Information flows

Even more leverage can be gained by making information available to the people inside a system. The availability of information changes the “awareness” of people towards a certain system aspect. As an example Donella Meadows gives the following story:

There was this subdivision of identical houses, the story goes, except that for some reason the electric meter in some of the houses was installed in the basement and in others it was installed in the front hall, where the residents could see it constantly, going round faster or slower as they used more or less electricity. With no other change, with identical prices, electricity consumption was 30 percent lower in the houses where the meter was in the front hall [1].

Due to having additional information available without additional effort consumers acted differently. A new feedback loop was created revealing new information that was not available before. As this indicates, missing feedback might even cause system malfunctions. Establishing information flows can therefore be a leverage point in a system. Availability of information is often demanded by the citizens of a country but unwanted by those in power. A well-suited historic example for this leverage point is the Glasnost campaign [5]. The Glasnost campaign in 1986 was an important step towards increased government transparency and availability of information to the general public in the Soviet Union.

A real-world example of system complexity

The misinterpretation of feedback loops is often the source of many technically avoidable catastrophes in human history. They are the driving force behind climate change, pandemics and some of the largest famines in human history. There’s a reason why climate scientists talk about tipping points: they lie at the end of a reinforcing feedback loop and mark the end of where the partnering balancing feedback loops can still act as stabilizers. The loop ultimately collapses – with a large measure of uncertainty of what’s going to follow.

One of the most tragic examples of the misjudgement of feedback loops happened in the late 1950s to early 60s. As part of the “Great Leap Forward”, the People’s Republic of China went through a series of campaigns aimed to establish the republic as a large, united state to support the additional strain put on and resources required by this new society. The campaigns ranged from agricultural reforms and restructuring of communities to large scale economic transformations.

One such campaign was the “Four Pests Campaign” illustrated in Figure 4 , which sought to eradicate 4 different kinds of pests: flies, mosquitos, rats and sparrows. Flies, mosquitoes and rats were all seen as significant plague spreaders and thus an even larger risk for a population strong nation. Sparrows on the other hand were seen as a threat to agriculture, as sparrows used to feed on the seeds sown by farmers and were thus holding back the full potential of the harvest, which again posed a risk for a rapidly growing population. The first three pests were mostly combated with poison, while the war against the sparrows was a more complicated endeavour, which required support from large parts of the Chinese people and ultimately resulted with the near complete eradication of sparrows on mainland China.

During the next season, no sparrows were around to eat the seeds and the early plants promised a bountiful harvest. That is, until swarms of locusts rolled over the countryside and annihilated almost the entire crops.

What happened? A detail that did not find consideration in the campaign was that eurasian sparrows do not only feed on seeds but also on insects – and thus provided a form of population control for grasshoppers and other insects. The tipping point came with a characteristic of some species of grasshoppers: when their population density reaches a certain threshold, they grow larger and become ravenous, now referred to as locusts and traveling in giant swarms, feasting on everything they come across – in the end often even cannibalising upon themselves. Without the balancing effect provided by the sparrows, the population of grasshoppers spiraled out of their equilibrium, reached a tipping point and destroyed their environment. When the decision was made to eradicate the eruasian sparrow, the ecosystem wasn’t sufficiently understood and thus not all consequences of the planned actions could be considered – effectively worsening the problem instead of alleviating it.

The solution was as simple as it was unnecessary: The reinstantiation of the balancing feedback loop formed by the sparrows. Over 200.000 animals were imported from all over the USSR and released in the Chinese countryside, feeding on both seeds and insects. Until the end of the campaign, China went through the most severe famine in human history, with an estimated 15 to 45 million people starved to death.

Although this incident is a crass example of mishandling of feedback loops, it’s far from the only one in recent history, might these be environmental, technical or environmental issues. Generally, a tendency of humans to misjudge exponential growth can be found in most of these examples. One might say that we’re bad at anticipating these collapses, however we somehow manage to balance this with being not as bad at reacting to these changes, once they happen.

Beyond Complex Systems

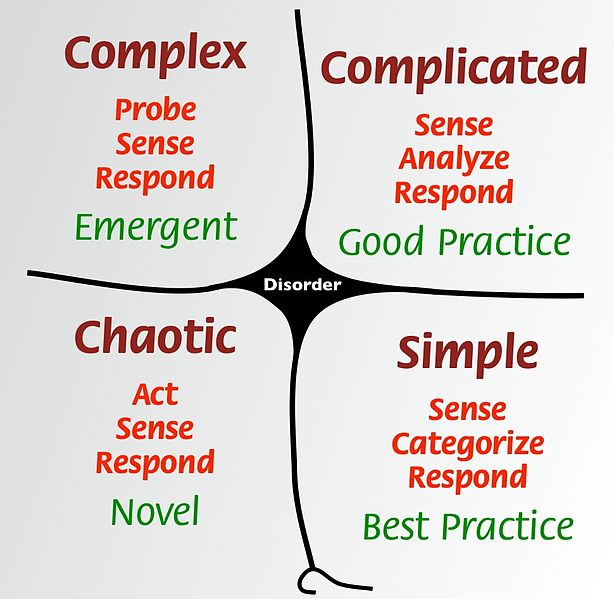

Of course, dividing systems into complex and non-complex systems oversimplifies the thematic quite a bit. The Cynefin framework therefore defines multiple categories: clear, complicated, complex and chaotic systems. It is frequently used for decision making by locating issues in a four-point matrix in order to determine the type of a system (or problem) [3]. The categories can be described as follows:

Clear. The Information and context of certain causes and their effects is clear and well-known to everyone. Best-Practices can be applied to such a system and will deliver predictable results. [3]

Complicated. The Context between cause and effect is known to domain experts, which can gather the facts, analyze them and come to conclusions, and act upon them. [3]

Complex. Context of cause and effect of system states is only observable in hindsight. The relation may be clear and understandable but was not predictable beforehand, even with expert knowledge. [3]

Chaotic: Cause and effect is completely unclear. The goal is therefore not to discover patterns but to diminish negative effects in the first place. Innovative techniques can be used here. [3]

Disorder: In the centre of the matrix there is the “not yet categorized” state where nothing is known. [3]

With this practice the Cynefin framework aims to help identify which type of system is in question and which course of action is appropriate. The framework is widely used in both enterprise and governmental structures.

Conclusion

As with any good science, systems theory opens up more questions than it does answer. But it also does offer a language and tools to make sense of problems that are too large to entirely comprehend.

The holistic view assumed within this field is often in clear contrast to how we as developers work on a daily basis. Many modern programming languages abstract so much of the underlying complexity, that many of us never need to worry about the structure of the machines our code is running upon. This more often than not pushes the development workflow out of the complex into the complicated category.

When having a closer look at what systems we are interacting with and at which level of abstraction we’re at, it becomes clear that as developers, we are often at a comfortable distance to complexity. As much as it hurts to admit, as humans we have a limited capacity of what we can remember and understand at any given time. This however isn’t necessarily a bad thing. After all, we don’t need all information available to solve most issues and the selection of the right information for each topic is a valuable skill to learn. Nevertheless, we’re willing to argue that most developers aren’t as good system thinkers as we like to believe.

One direction that seems to contradict this analytical view is the adoption of agile project management in more and more areas of (not just) tech companies. In contrast to traditional project management, agile acknowledges the complexity of the issues it’s trying to solve. Instead of having a perfect master plan at the start of the project, the focus lies on quick adjustment to new circumstances and iterative improvements – in other words it’s trying to cram as much ‘hindsight’ into the planning phase as possible.

Another topic that needs discussion when talking about abstraction is the field of machine learning. Machine learning models have reached surprising milestones in the last decade, in some parts even surpassing human performance – all thanks to finding the right way to abstract the data they’ve been given. In the end, these algorithms are just that: information refinement machines. However, an important point of criticism comes from classical statistics: that correlation does not mean causality. The currently employed algorithms are still oblivious to the complexity of cause and effect, which we’ve talked about in previous paragraphs. An interesting point of research is provided by Judea Pearl and Dana Mackenzie in their “book of why” [8]: machine learning models applied in the field of causal inference. These machines could bridge the gap between correlation and causality and ultimately help in understanding more and more complex systems – how long that will take and how well they’ll actually perform is however still hard to guess.

Abstractions are a necessity if we want to achieve anything meaningful – it just depends on where and at what costs these abstractions are made. If the wrong type of information is lost, stable systems can be turned into insecure, unstable systems – even without any malicious intent. Systems theory can help with identifying points within a system that should not be lost during abstraction and thus help in designing more secure systems – or just not to destroy those that we live in.

References

[1]: http://donellameadows.org/archives/leverage-points-places-to-intervene-in-a-system/

[2]: https://www.researchgate.net/profile/Rafael_Laurenti/publication/301560026/figure/fig3/AS:388510730211331@1469639584872/Example-of-balancing-and-reinforcing-feedback-loops-diagram-adapted-and-modified-from.png

[3]: https://hbr.org/2007/11/a-leaders-framework-for-decision-making

[4]: https://en.wikipedia.org/wiki/File:Cynefin_framework,_February_2011_(2).jpeg

[5]: https://en.wikipedia.org/wiki/Glasnost

[6]: http://www3.weforum.org/docs/WEF_Global_Risks_Report_2019.pdf

[7]: https://www.wildercities.com/post/refuge-in-the-embassy-the-four-pests-campaign-subnature-series-1-10

[8]: http://bayes.cs.ucla.edu/WHY/

[9]: https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2019GL085378

[10]: https://www.jstor.org/stable/j.ctt5hhk7j

Leave a Reply

You must be logged in to post a comment.