Foreword:

This article reflects my experiences while developing a real time browser-based game. The game of choice was Tic-Tac-Toe as it is straight forward to implement and does not have complex game mechanics. The following paragraphs explain my experiences I got while developing this game with a cloud-based infrastructure in mind. The article is not much of a manual on how to create a game in the cloud, it is more of a diary showcasing all the pitfalls and impressions I collected. This is more focused on beginner developers and first timers in projects as I share common pitfalls about my first bigger project which you should totally void.

To try out the prototype that I have created, check out the GitHub repository. There is a complete manual on how to start the application as well.

Initial project goal:

The initial goal of this cloud project was to create a simple browser game which automatically scales with increasing concurrent players. The key part for any game are game servers which players need to use to play against each other. Having no available game server means that no additional player can join in and have fun playing your game. The seemingless integration of additional game servers is a key point, no one wants to shut down the whole backend and bring it back up to just increase the server size. So, one goal was to achieve the seaming less integration of game servers and when they are not needed, the game servers should be removed without any hassle

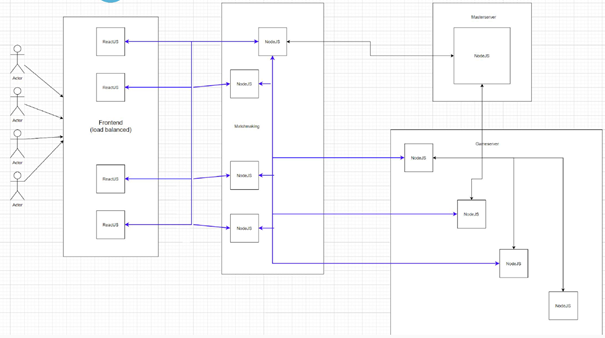

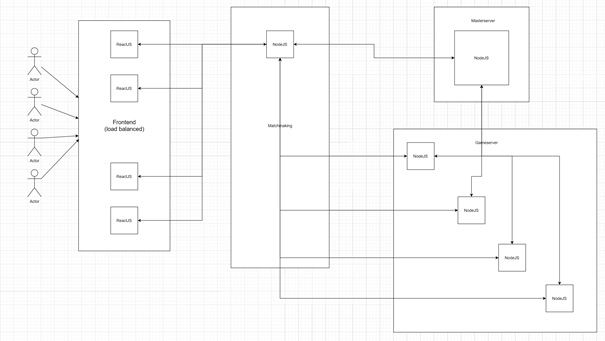

The whole structure of the app is thought to fight against load in every possible part. For example, the frontend part, which consists of ReactJS should be relatively easy to scale. A load balancer would just redirect the request to the frontend to one of the available servers. The next server which then gets requested would be the matchmaking server. Here, several matchmaking servers should be free to choose from. However, it’s important to keep the connection to the same server every time, as these connections consist of socket connections which make it possible to transfer changes form the servers, which the frontend can’t access by default, in real time.

Technology stack

The technology used in this project is simple and easy to use. It mostly consists of technology I used in the past and I am quite familiar with. It saves a good amount of time not needing to be actively learning a new technology and using technology you are familiar with and which meets the requirements.

Frontend technology stack

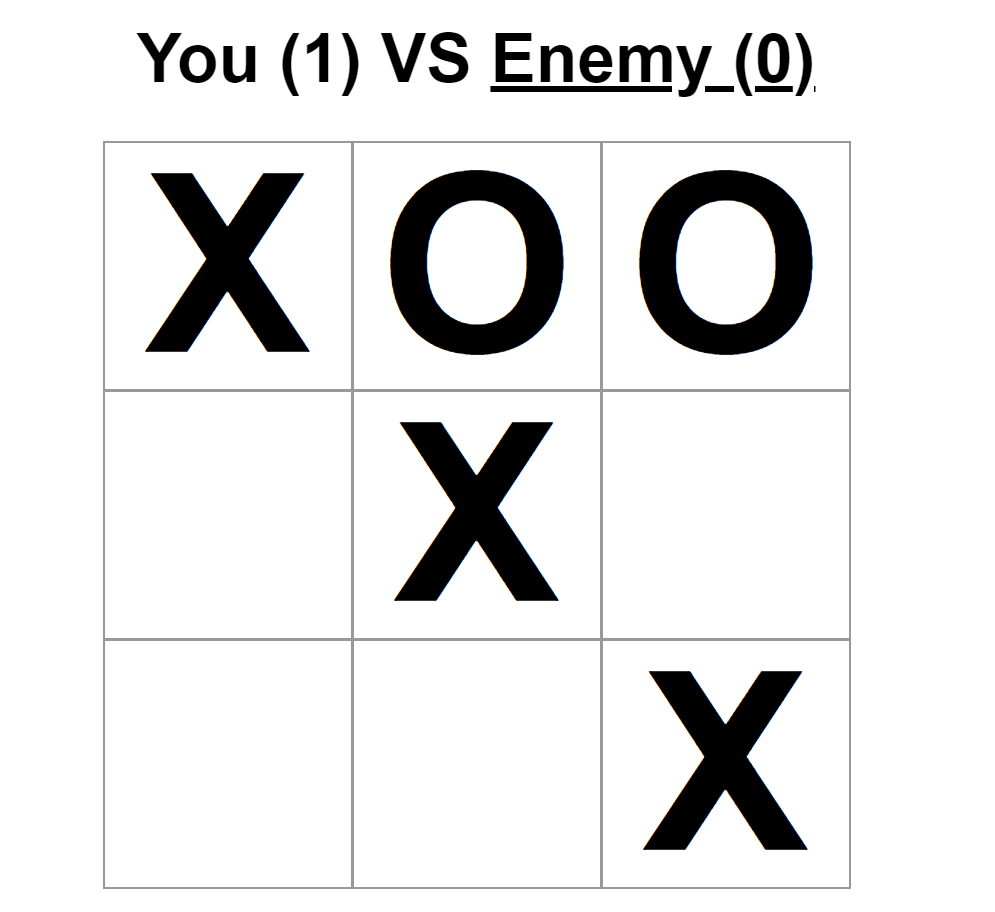

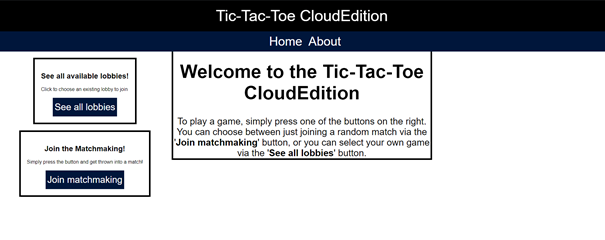

For the frontend part I sided with ReactJS. It is more a personal preference to use ReactJS instead of Vue.js or plain HTML with JavaScript. ReactJS makes it easy to transform changes in data to the rendered HTML without ever writing a function to actively change your DOM by yourself. Changes to the DOM are easy and lightweight making it a great performance deal when doing frequent changes in the DOM. In my use case, a browser game, it was the perfect solution. Just get the data from the game server, push it into the fitting variable in the frontend and ReactJS magically adjusts according to the given data. ReactJS profits form huge community support as well. There are several packages that you can integrate in your project. In this project I integrated two rather famous packages, React-Router and React-Redux. React-Router makes routing between different pages easier without reloading the whole page. In my use case, the page consists of several components. Traditionally there is a header, a navigation bar and then all your information about the page you are on. If you are on the home page, it displays the home page, when you are on the about page, it displays the about page. With React-Router, it just loads the components that are changing. So, when going from the home page to the about page, only the component holding the about page re-renders. The header and navigation bar stays the same, as nothing changed there. It would be a huge waste of resources, re-rendering components which have not changed and are still used by the page. React-Redux is used to achieve a global state. Each React component has a state in which you store information. For example, the value of the input field in your form. But the problem that occurs when having multiple components is that you cannot pass this state to you siblings. Most likely you can pass the state to you children components, but that is it. React-Redux introduces global state that you can freely declare and use wherever you want. In this project it is used to save the information about the game you want to enter. From the lobby component you’d get the room name and the server name, then get redirected to the play component and the play component reads the information about the game you want to join from the global state. Talking about the play component, sockets are used to achieve real time communication between the client and the server. Socket.IO is used to establish a connection between the client and the game server. The game server holds a connection to both players. Each player’s interaction gets send to the game server, validated if needed, and then both players get the resulting game state form the game server back. Socket.IO is a proven framework with good community support and has great features such as rooms, which make it easy to use with a game project. Socket.IO’s rooms are used to create the different game rooms each server has. When a player joins a server, the game servers Socket.IO socket puts it into the matching room. All communication between the players in this room can now be easily emitted to just the room, and not all connected sockets.

Backend technology stack

The backend uses NodeJS servers with Express to provide an easy way to handle requests. Each server has its own different API-interfaces which are used by either other servers or for debugging purposes or general information. Additionally, the game servers and matchmaking servers have Socket.IO socket connections to communicate to the game server, the matchmaking server, or the frontend. With Socket.IO it is easy to listen to connects, disconnects and user defined room events, making managing the sockets not a total nightmare. Listening to disconnects is important for the matchmaking server to remove a game server from its list of available game servers and sends a request to the master server to check for the game server’s health. In case that the game server does not respond, the game server is removed from the master server’s server list as well, because the game server is not reachable anymore and therefore cannot be used to play matches on.

Two npm-packages have shown to be a great gift setting up these servers and making requests to other APIs simple. The first package Is node-fetch which, just like in plain JavaScript, has the fetch() method to asynchronously fetch information from an API. Unlike the standard JavaScript you use on your frontend, the fetch() method is not natively included in NodeJS. The other package is called minimist. It is a great convenience in reading the parameters the servers gets started with. To locally use multiple servers, each server needs adjustable ports. So, most servers created have a fitting parameter to set the port number.

Testing wise, Mocha and Chai are widely used in testing NodeJS applications. Mocha is a very common JavaScript test framework and Chai is a fitting assertion lobby extending Mocha’s asserting capabilities. Chai’s syntax is fairly easy to learn and easy to read as well.

Due to poor structural choices in development, most of the servers I created can not be tested without the others actually running. For example, the test case for the game server requires the master server to run, as a game servers first step is to register itself with a master server. The testing is set up, so that all required servers for testing are running before the test started.

Current state of the project

As of writing this article, the project is in a prototype state. All the servers work like they are meant to, and game servers can be seamlessly integrated into the running application. The whole application was deployed to Azure Virtual Machines and proved to work.

When trying out a different Azure service, like App Service, the application did not deploy as intended and would not work out of the box. When actually deciding on which Azure service to use, you need to check your different services for “compatibility”. For example, the game server uses two ports for sockets, one for the socket to connect with the player and the other one for a socket to connect to the matchmaking server. The Azure app service however only allows your application to use port 8080, so you either change your application to use that port, or completely switch to a different Azure service, virtual machine for example.

The biggest problem I encountered so far is to find a reliable way, to deploy my application to Azure Virtual Machines. Originally, I wanted to use Azure DevOps Pipelines which, after a successful build, then deploy the whole application to different virtual machines, but that did not work out right of the box as I thought. More on that in the ‘Cloud Integration’ chapter.

Application structure

The optional and aimed at structure looks like this:

Frontend, matchmaking and game server can be turned on and off depending on the current amount of players and the current load. Unfortunately, in the current state, there is no way implemented and tested, that one matchmaking server gets chosen when a player connects for the first time. It might work, but the frontend needs a couple of changes to dynamically change the address of the matchmaking server. At the moment its hard coded. The current structure looks more like this:

Cloud Integration

Out of the several known cloud service providers, I sided with Microsoft Azure to get to know this service. During the cloud development lectures I have already tinkered AWS, IBM Cloud and Google Cloud but to further expand my basic knowledge about cloud services, I went with Azure. Adding to that, creating an Azure account gets you 170€ (200$) of free credit for the first 30 days, but you must verify yourself with a credit card. Payments only start if you switch your account from the free tier to a subscription-based tier.

Cloud Structure

Azure offers a variety of cloud services like virtual machines, load balancing clustered databases and Azure DevOps. Azure DevOps is basically your cloud enabled Jenkins instance allowing you to connect to your GitHub repository and automatically run pipelines depending the actions you take in your repository. For instance, when you push to the master branch of your repository, your DevOps pipeline automatically builds your projects, runs unit tests, and then can deploy your application to the Azure service of your choice. It is highly customizable and offers a variety of template applications to get started understanding how these pipelines work and are set up.

Cloud Pipelines

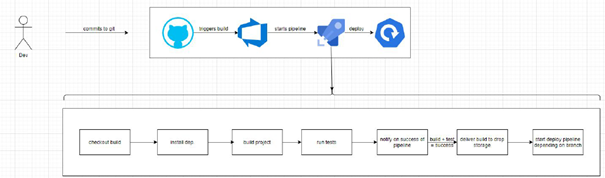

The development process should seemingless migrate from local development to deployment. Meaning, that every server can be set up locally, used for development and testing, and when finished, the changes can be pushed into the repository and a current build with all features gets set up automatically. The “dream pipeline” would look like this:

A deployed and running application is just a push away from being ready to use without ever setting up something by hand afterwards. Having such a powerful pipeline has several improvements while developing:

- Automatic project building and running tests

- Deployment happens automatically

- All deployments are handled the same way and are consistently

- Decreases time fiddling with deployments done by hand

Choosing the fitting cloud services is a key requirement before you actually start developing your application. I already mentioned the problem that I got myself into because I did not research the fitting cloud technologies beforehand. I’m not saying that the azure services I chose were the right and only fitting choice, the problem was, that I did not spent enough research on actually working out the different approaches I could take with Azure’s cloud services and what requirements the Azure services have. After a good amount of fiddling around, which got me to know the Azure App Service better, I understood that my current structure of the application simply could not use this service. The benefits from using Azure App Services would have been huge, as it would automatically scale depending on the load. It does however limit your abilities to directly debug and manage your application. It is not really possible to just login to your service via SSH, look at the logs or start/stop the application. A fully detailed comparison between the different services shows the azure documentation here: Azure Technology Choices

Project challenges

This chapter splits up into two different parts. Challenges in developing the application itself and the other part is about the challenges working on this project.

The biggest problem I encountered while developing the application was socket management in the frontend. This problem encountered, because two different components needed information from the incoming game event data of the active game. The ideal solution would be to share the socket across the application in a global state manner so that each component would set its listeners on the needed information. But that did not work out as a global state with React-Redux. The solution then was to actually get all the information in the game board component and then push it into a global state. The other component, the game status, would then retrieve it from the global state and update its values according to the data. This worked in the end and is sufficient for the prototype, but in a real-world production ready application, some sort of “socket-manager” or “socket-controller” would be needed to be implemented.

Another problem I encountered with the current prototype was testing. Especially the socket connections sometimes make it hard to create reliable tests as each test would need its own socket set up and ready to emit and retrieve data. The straight forward solution is to create “before” and “after” functions that ran before and after each test to setup sockets and afterwards closing them. In the test itself, only the listeners would be set, and data could be emitted through the set-up sockets. The really tricky part about this is to determine when to stop the test. A normal test calling a REST-API would be finished when the call was received and the data got evaluated. With sockets, especially when testing two player operations such as joining and emitting a player move, you have to carefully watch when to stop the test. Stopping the test is done by calling “done()”. In Mocha it’s a simple parameter that gets setup in the test. When “done()” is called, the tests stop. Sockets however can continue to receive information about events they are subscribed to. If two sockets have to receive the same event, on socket gets the information first and the second one last. The order of the sockets receiving information could be mixed up when networking does not deliver packages due packet loss for example. Meaning that the first socket receives the package after the second socket received the package. The test would end after the socket received the data, but the second socket still has to receive data and evaluate it. When running these tests locally, nothing like this occurred, but it is still a viable problem that can cause failure on the tests.

Most of the problems I encountered were on the more formal side of this project. A huge problem that I just realized when there were two to three weeks left until the presentation of the project was my time management concerning the development and deployment of the application. The development was going slower than I expected because of a slow month of August and a packed month of September in which my practical semester started meaning after sitting over 8 hours in front of a computer doing some sort of developing tasks, I had to spent my whole free time after to work on my project. I’d never expected it to be that hard to get things done after work, but after 8 hours, doesn’t matter what I’ve worked on, I simply wasn’t as concentrated, focused and quick while developing and driving the project forward, I was rather exhausted and that caused the project’s progress to slack.

As this is my first bigger project which I decided to do on my own, I got to know the difficulties planning and managing a project on my own, which led to quite some problems during the whole project. Time management got already mentioned, additionally the architectural side would need some great refactoring if the application ever would go into a productive environment. This happened due to poor knowledge about handling all these servers and components and just “coding away”.

The whole idea of this project was to develop something for the cloud. Unfortunately, I set my expectations quite too high for a single person, especially a beginner, to achieve something that big. I did however manage to create some kind of overview of my expectations. I already mentioned the pipeline that would get triggered on an action in the GitHub repository. This pipeline was made to capture everything I would need to research in order to create this kind of pipeline.

Without proper architectural knowledge it is quite hard to keep clean code and a reasonable structure inside each server application. For prototyping this is somewhat sufficient, but to actively develop and maintain a project, a clean structure and clean code is a must.

Learning for future projects

This being my first bigger project that results in actual software that has a real use-case, many different things have approached, whether they were good or bad. In the end the whole project thought me very valuable things about how I should approach the very next project during my studies. There are several key points that are worth pointing out.

The first one being a clear project scope that once defined, it should not suffer from huge changes. The project scope, especially for a timed project, needs to be adjusted just right to match the available man power and the available knowledge. Using new and not yet used technology is great, no arguing there, but getting started with new technology takes a lot of time, especially when going beyond the “tutorial” stuff. In my next project, I will make sure to account enough time for learning new stuff. This kind of goes hand in hand with proper architectural planning. Having no structure and plan to go along, makes it very hard to maintain and expand code. Other people may have a very hard time understanding the project at all.

Cloud architecture and cloud services come with the benefit of having huge resources on demand. It is definitely a topic that is going to be present for quite some time, so I’ll continue using them. Especially the benefits of cloud computing versus traditional computing, like load balancing and creating resources with one call or click, are very promising and easy to take care of resources and managing them. In combination with DevOps, an automatic deployment can save a huge amount of time over the time of developing the application.

Realization after finishing the project

During this project, I learned a lot about developing an application that makes, or should make, use of the cloud as a distributed platform enabling my application to scale and run however and wherever I want.

The key realization about project management is, that such a rather complex and feature rich application needs more time and more developers to get done in time with a releasable build. It is surly doable, but you really need to know your stuff. There would only be little time to get to know additional technologies so that you have enough time focusing on releasing a finished build that meets the requirements. It’s more a matter of knowing things and how they work, instead of being a high tier developer. A lot of time got spent on researching and trying things out than actually working with them.

Azure’s cloud services have shown me several possibilities to publish my application with totally different needs and benefits. Understand what you need and how you implement it, is something I have to dig deeper in my own research time. There is huge potential, that can be discovered, but you actually need time to integrate and get comfortable with cloud as your infrastructure provider.

The whole project made a lot of fun even though I just got around to make a working prototype and just got to touch the glimpse of cloud computing, I realized the huge potential for further projects and the necessity to get to work on cloud backed projects.

Leave a Reply

You must be logged in to post a comment.