Note: This article was written for the module Enterprise IT (113601a) during the summer semester of 2025.

Introduction

AI has taken over the tech landscape, going from novelty and experimental technology to a critical piece of infrastructure in enterprise, and is perhaps the most spoken of technology related topic of our century. Often even compared to the historical dotcom bubble of the 90s. [1]

Overview of modern AI and associated terms

The current state of AI development is particularly dynamic, with major breakthroughs taking place in what feels like monthly intervals, hence new terminology, technologies, and research make it difficult to distinguish the current “state of the art”, or

what terminology might emerge as the next big thing. In the following section, I will give a brief overview of a few terms and buzzwords necessary to understand and contextualize this paper appropriately.

AI terms and their relation

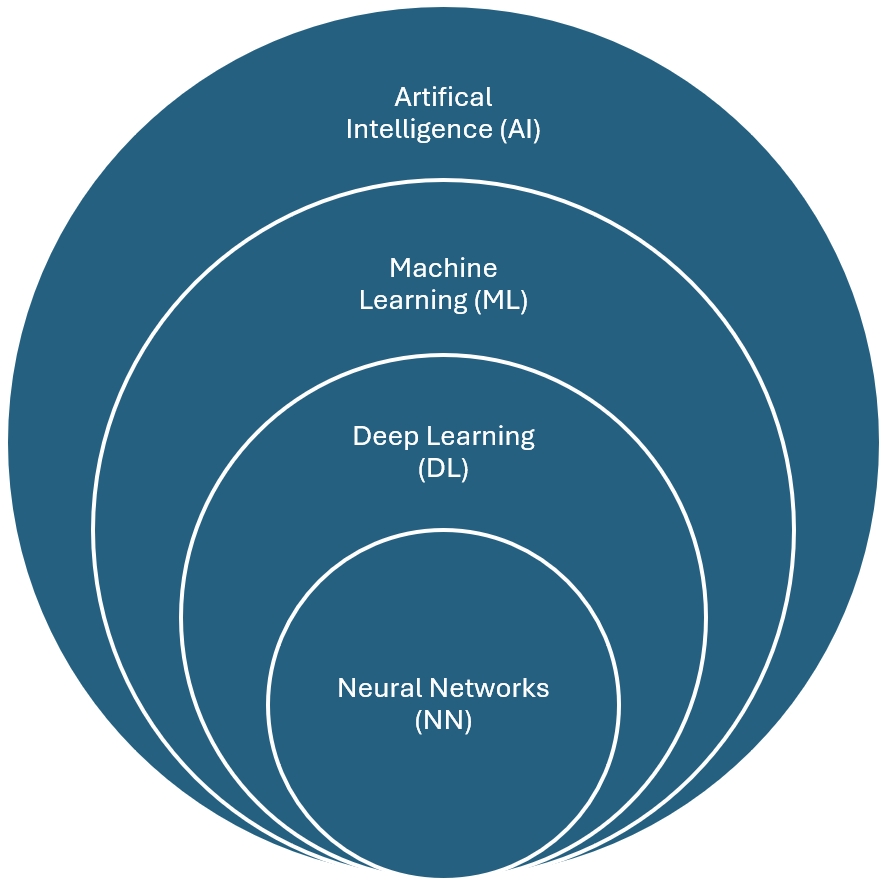

You may be aware of many AI-related terms. To clarify what they are and their relationship, I have created the following graph to illustrate the “is-a” hierarchy of AI terms: [2]

Starting at the top, Artificial Intelligence encompasses all the terms below and is loosely defined as machines that mimic human intelligence or cognitive functions in any form or way. Following, Machine Learning, is a subset of AI that allows for a first degree of optimization and more precise definition of application, being able to help make predictions off of previously “learned” data, for example. Deep Learning is consequently also a subset of Machine Learning. The primary difference lies in how the algorithms learn and the volume of data they consume, as well as the use of multiple learning layers. Neural Networks are at the bottom of the hierarchy and are at the core of Deep Learning algorithms. Briefly explained, they mimic how neurons interact in the human brain.

To put these terms into more practical perspective, here are some typical applications per term: For AI, associated applications are expert systems, chess computers or other algorithmic applications. For ML there will be more nuanced algorithmic applications, such as Email spam filtering or recommendation systems for streaming music or video (think Netflix or Spotify recommendations). Deep Learning is where AI becomes very powerful, and it is usually this layer that is referred to when discussing AI. Some of the more common applications here are: Computer vision (e.g. facial recognition), natural language processing (e.g. translation, sentiment analysis), voice assistants (such as Siri or Alexa), image and video generation (e.g. Midjourney, Sora) and finally chatbots such as Claude or ChatGPT fall in this category. Finally, at the lowest level, Neural Networks consist of interconnected nodes (neurons) that each perform simple mathematical calculations. When combined into networks, simple tasks such as basic pattern recognition, simple classification, basic image filtering or elementary prediction are typical applications.

Two terms also often heard are LLM (Large Language Model) and Generative AI. Generative AI is a term to group together several types of applications from the Deep Learning level applications, that synthesize new content such as: texts, videos, images or audio. Large Language Models also fall under the umbrella term Generative AI, and describe AI models that are concerned with understanding and generating human language, such as Claude or ChatGPT.

Open source in context of AI

Open source is generally regarded as a piece of software that is freely publicly accessible, by publishing the underlying source code to the piece of software. In the case of AI, claiming to be open source is a little more complicated as there are new types of licenses and multiple parts to a complete AI that may or may not be covered by said licenses,

resulting in degrees of openness rather than a binary distinction between open or closed source. Mainly, for a truly open-source AI, both the code of the AI itself and the associated training data need to be fully publicly accessible. It is also important that the architecture and documentation associated with the AI should also be publicly released alongside the code and data, as this technology is particularly new and may still need more than just the code to be able to be comprehended fully. Also often overlooked is which data is fed at which stage of training to the AI, as there is a considerable difference in different stages of AI training like general training, alignment and fine tuning for the final result. Blindly feeding all training data to the AI is not optimal in this case. [3]

While tech giants like Meta and Google have received widespread praise for their efforts in open sourcing many of their AI developments, they often only open source their models at the deployment stage (final stage), where any fine tuning, alignment or customization is difficult or no longer possible at all, or in other cases only releasing the code and not the training data associated. In addition, the datasets and code released are often under restrictive licenses which cannot be called truly open source, as they limit access with restrictive licensing terms, or only through paid API access. [4]

Some noteworthy open-source AI models include:

- LLaMA by Meta excels at multimodality with text, video and image handling, is known for robust performance. Often used in applications that require image processing but also in general Generative AI applications. Licensed under Metas LLaMA license, often not considered truly open source.

- Mixtral by Mistral AI uses unique “experts” architecture and beats many of other competing open-source AI models, only open-source frontier level model developed in Europe, particularly liberal licensing. Licensed (deployment stage) under Apache 2.0

- Gemini by Google has extensive Google Suite integrations, excels at reasoning and mathematical applications. Very tightly licensed, where some training data is obtainable under restrictive access. Altneratively, Google offers the Gemma family of large language models, also loosely licensed as open source, but less restrictive than Gemini

- Qwen 3 by Alibaba excels in agentic deployments, is able to handle large context windows and competes among the top AI models in code generation. Qwen 3-coder is released under an Apache 2.0 license.

Use Cases and Opportunities

Enterprises can employ AI in many ways and for different purposes. I will go further in depth for Generative AI, AI Agents and MCP later in this section, as these are currently the most relevant ways to utilize AI in an enterprise. Some applications are listed in the table below. [5]

| Area | Application |

| Supply chain optimization | AI can monitor inventory, predict demand, and identify possible disruptions in the supply chain |

| Financial fraud detection | AI can analyze patterns in transactions to flag fraudulent behavior |

| Personalized marketing | AI can generate customized marketing material, hypertargeting specific customers |

| Enhanced customer service | AI chatbots and service agents can provide round the clock customer service |

| Human resources management | AI-driven HR can accelerate candidate matching; help identify best prospects for certain positions and aid in tailored training |

| Cybersecurity threat detection | AI can analyze network traffic, unusual patterns, detect phishing |

| Healthcare diagnostics and research | AI can assist in diagnostic evaluation of patient records and predict patient outcomes. |

Some use cases in practice are:

- Netflix: Netflix recommendations systems process around 500TB worth of data

- JPMorgan Chase’s COiN reduces 360,000 hours in document review yearly

- Walmart uses supply chain optimization to prevent $2B losses in sales proactively

- Maersk uses predictive maintenance to reduce ship downtime by 10%

Generative AI

The tools most known under Generative AI are chatbots like ChatGPT, Claude and Perplexity, which generate and process text. Often utilized in software development to generate code, brainstorm ideas, create meeting notes or even solve math problems. They are especially useful in enterprises for quickly finding information in large collections of texts such as documentation or internal wikis; they can also help create automations quickly and with little technical knowledge and design content or ad campaigns tailored to every customer profile.

At the core of Generative AI are large datasets, code bases and images used to train said LLMs. In practicality, Generative AI is mostly used to generate code or to quickly retrieve information within wikis or large document databases. GitHub Copilot is one example, contributing to up to 40% of code in environments where its integrated, according to Microsoft.

Associated challenges with Generative AI are primarily increased energy costs but also the potential need to fine tune the model with selected data and finding ways to connect the model to existing data and information repositories. Another relevant technology I will explain below, AI Agents, are able to keep track of more complex goals and break them down into smaller steps, using different tools and methods to achieve said goal incrementally, all the while self-supervising progress and accuracy of responses, being able to tailor problem solving approaches on the fly based on changing environments or different responses. [6]

AI Agents

AI Agents are more about toolchains, decision making and workflows than individual prompts and questions. While at the core AI Agents also utilize generative AI, they have the ability to solve multi layered tasks by breaking them down into managable steps, self choosing tools, such as prompting Generative AI as needed, and connected data sources to solve problems in smaller steps, which is what sets them apart from mere Generative AI. AI Agents are often used in the context of customer service processes. For example, the RV retailer Camping World, seeing an increase of 40% in customer engagement and wait times dropping from hours to mere 33s. [7]

MCP

Model Context Protocol (MCP) is a new advancement in enterprise AI integration, aiming to solve one of the most persistent challenges organizations face when deploying AI systems: seamless communication between different AI models and existing enterprise tools.

Traditionally, enterprises struggled with AI systems that operated independently, requiring custom integrations for each tool, database, or service they needed to access. MCP solves this by establishing a standardized communication protocol that allows AI applications to interact with enterprise systems through a unified interface. MCP can be understood as a translation layer for AI systems, eliminating the need for custom integrations with every tool or service. [8]

Challenges and opportunities for enterprises

Deciding to deploy and host your own AI may bring different sets of challenges but also opportunities for enterprises, below I will list and compare some points and their according challenges or opportunities. [9]

| Topic | Challenge | Opportunity |

| Customization and Flexibility | May incur additional data processing efforts to be able to be used in training or fine tuning of AI and expertise in tuning procedures | Open models allow for training data to be adjusted in either pre training phases or in fine tuning to generate custom fit solutions |

| Cost efficiency and control | Ownership costs increase with growing infrastructure and maintenance demands, worsens at scale | Cheaper when hosting AI within own systems, higher ROI, avoiding licensing fees |

| Compliance and legal risk | Volatile AI regulation, may lack compliance and legal documentation, possible exposure to additional liability in customer facing applications | Open-Source AI offers transparency beneficial to auditing processes, decision making of models can be fine tuned to compliance needs |

| Security and privacy concerns | Possibly insecure code, potentially poorly maintained or vulnerable code, additional security measures for AI needed | Complete control over data processing and deployments, data never leaves own infrastructure |

| Maintenance and support | Requires domain knowledge and maintenance efforts, no dedicated support | Enterprise can tweak and maintain AI at will, allowing custom adaptations and fixes without relying on vendor |

| Integration and infrastructure complexity | Significant technical expertise required, different data formats and varying performance across systems | Can be adjusted to be deeply integrated into existing tooling and infrastructure |

| Quality and reliability of Open Models | Lacking access to complete or unbiased training data due to financial constraints, quality may vary vastly between different open-source projects | Open source community boasts large community and rapid development, swarm intelligence |

| Vendor lock-in | Restrictive ecosystems may make migrating to other vendors not financially feasible, or vendors may restrict migration altogether | Nonexistent, as long as data can be transferred in usable format |

| Data ownership | Data resides with owner of AI, may be within politically disadvantageous physical location for customer, vendor is responsible for data security availability | Enterprise has full control over data and data residency, enterprise may control physical location and access to data |

The conclusion to be drawn here, is that in smaller scale, open-source AI opportunities outweigh challenges under consideration of expert knowledge. However at larger scales costs such as compliance and infrastructure may rise exponentially and would diminish the ROI of open-source AI significantly

When is AI enterprise ready?

Another major factor for, or challenge rather, is to consider when is any given open-source AI model ready for enterprise deployment?

Scalability is the obvious challenge here, as an AI needs to be able to sustain increased loads for peak times and serve responses in timely manner to be able to be used at enterprise scale. Hand in hand to this is reliability, not only in the regard of availability, but also data integrity and quality of response under changing circumstances and system loads.

Security may be the most important point for any enterprise choosing to deploy open-source AI; given that at enterprise scale it is highly possible that confidential information is being processed by AI, a need for robust security measures and guidelines arises. This leads to the topic of integration; while open source allows for virtually limitless customization, a significant effort needs to be made to maintain integrations, ensure secure communication, and protect sensitive data. Following, governance entails the requirement to create enterprise ready guidelines and policies regarding the use and employment of AI within the scale of the enterprise.

A key factor in the choice for open-source AI is often also a promising ROI, requiring the AI positively deliver value by saving time and providing clear benefits for its users. High ease of use is also crucial at enterprise level, as at such large-scale deployment, people of all walks of life and different affinity to technology will need to be able to interact with AI. Finally, AI is often criticized for consuming large amounts of electricity and thereby contributing negatively to climate change. Prompting not only a certain required level of efficiency in terms of climate sustainability, but also the ability to adapt the AI at its current state to future technologies and infrastructure in terms of technological sustainability. [10]

Outlook: Social Implications of open-source AI

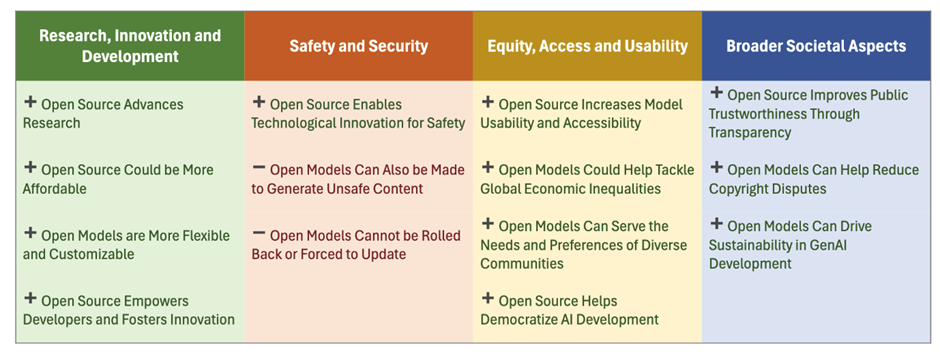

It is also worthy to briefly note, that while AI is certainly exciting for enterprises, there are also social implications worth considering. With many major companies having made contributions to open-source AI to compete in the AI race, enabling unprecedented levels of control and transparency in utilizing cutting edge technology, it is easy to simply label open-source AI as “good”. Regardless, there are still certain social aspects worth mentioning when thinking about open-source AI, as illustrated in the following table adapted from “Risks and Opportunities of Open-Source Generative” [11]

Conclusion

Open-source AI presents an exciting opportunity, as is, for enterprises. After overcoming the initial hurdles of acquiring expertise and infrastructure, it offers high ROI for deployment of applications like AI Agents or Generative AI. When making the decision between proprietary and open-source AI, the scale of deployment plays the most important role, since maintaining self-hosted AI deployments becomes more demanding at scale. More areas of required maintenance, such as governance and security may arise aswell. However, this does not mean open-source AI is not feasible at scale, as when robust guidelines and policies around security, governance, integration and maintenance are put in place, they are able to be scaled along with AI deployment. As AI is a technology that is still very volatile and maturing before our very own eyes, it is very possible that many of these earlier named hurdles such as integration may become less for open-source models with innovations such as MCP. Another area where open-source AI clearly outperforms proprietary solutions is transparency, flexibility and fine tuning to tailor AI applications to special needs such as strict data sovereignty or governance, where proprietary vendors often cannot make exceptions or custom solutions.

Finally, regardless of whether it is a proprietary vendor or open-source AI, building robust policies, integrations, and training around AI use in enterprise is absolutely necessary for a sustainable and effective use in any capacity. A pragmatic prediction is that, in the future agentic AI toolchains will employ a mix of open-source and proprietary AI and no-AI tech to solve increasingly complex tasks and problems.

References

[1] Reuters (2025). Is today’s AI boom bigger than the dotcom bubble? https://www.reuters.com/markets/europe/is-todays-ai-boom-bigger-than-dotcom-bubble-2025-07-22/

[2] IBM (2023). AI vs. machine learning vs. deep learning vs. neural networks: What’s the difference? https://www.ibm.com/think/topics/ai-vs-machine-learning-vs-deep-learning-vs-neural-networks

[3] RedHat (2019). What is open source? https://www.redhat.com/en/topics/open-source/what-is-open-source

[4] Eiras, et al. (2024). Risks and Opportunities of Open-Source Generative AI https://arxiv.org/abs/2405.08597, IBM (2025). What is open-source AI? https://www.ibm.com/think/topics/open-source-ai

[5] IBM. What is enterprise AI? https://www.ibm.com/think/topics/enterprise-ai

[6] IBM (2025). Generative AI use cases for the enterprise https://www.ibm.com/think/topics/generative-ai-use-cases

[7] IBM (2025). Agentic AI vs. generative AI https://www.ibm.com/think/topics/agentic-ai-vs-generative-ai#7281538

[8] IBM (2025). What is Model Context Protocol (MCP)? https://www.ibm.com/think/topics/model-context-protocol

[9] IBM (2025). Open-source AI in 2025: Smaller, smarter and more collaborative https://www.ibm.com/think/news/2025-open-ai-trends, Cloud Security Alliance (2025). AI and Privacy 2024 to 2025: Embracing the Future of Global Legal Developments https://cloudsecurityalliance.org/blog/2025/04/22/ai-and-privacy-2024-to-2025-embracing-the-future-of-global-legal-developments, Open Source Security Foundation (2025). Predictions for Open Source Security in 2025: AI, State Actors, and Supply Chains https://openssf.org/blog/2025/01/23/predictions-for-open-source-security-in-2025-ai-state-actors-and-supply-chains/, Computer Weekly (2025). The hidden security risks of open source AI https://www.computerweekly.com/opinion/The-hidden-security-risks-of-open-source-AI, IBM (2025). The 5 biggest AI adoption challenges for 2025 https://www.ibm.com/think/insights/ai-adoption-challenges, Google (2025). Level up your AI skills with open-source AI tools https://cloud.google.com/use-cases/open-source-ai

[10] IBM (2025). What is open-source AI? https://www.ibm.com/think/topics/open-source-ai

[11] Eiras, et al. (2024). Risks and Opportunities of Open-Source Generative AI https://arxiv.org/abs/2405.08597

Leave a Reply

You must be logged in to post a comment.