Introduction

With information technology today we can easily get any kind of information someone is interested in. Whether you want to know how the weather will be tomorrow or how to cook your favorite cake, you can find out almost anything today. But as a user it’s getting more important to gain information quickly, and in a comfortable way. Google for example did just that. If you are using Google’s search engine, then you either type in what you are searching for or you can just say it. With spoken language this feature can be used easier and the response you get is quicker.

We as a team decided, that there is a lot of potential in applications, that can be used by spoken language. That’s why we have built an Alexa Skill for HdM Students, where they get information of their personal timetable. Every HdM student, owning an Amazon Alexa device, can ask Alexa something about his personal timetable.

Well, the benefits of this skill are clear. Students will have a more comfortable way to gain such information. A big advantage of such a skill is, that it will not be necessary anymore to use a computer, smartphone or tablet then to visit the web page and then to enter the personal credentials for gaining such information. This way includes many steps that have to be taken, considering the fact, that you want to gain only a few information sometimes. With this skill, students can just ask Alexa almost anything about their timetable, without taking the previous described steps.

General Information

Alexa is Amazon’s voice service. To use this voice service Amazon has released two devices, the Amazon Echo and the Amazon Echo Dot. With these devices you can interact with the Alexa Voice Service (AVS). With spoken language you can play music for example or you could ask about the latest sport results.

Amazon also enables you, as a developer, to use AVS, to add Alexa to your own devices. Which means, that you are not strictly bound to Amazon’s devices.

At the beginning, your device provides standard applications like playing music or retrieving weather information for example. As a developer you can add more applications, so that they can be used as well. This can be achieved by developing an Amazon Alexa Skill (AAS). An AAS is an extension to the default skill set.

To build an Alexa Skill you need to sign up for an Amazon Developer Account. The Alexa Skill consists of three main parts: the logical part, intents with slots and Alexa App to provide configuration possibilities to the user.

The logical part is the typical piece of software that contains all links between the Alexa API and (in this example) the HdM API.

Authentication in Alexa Skills

For many skills the user needs to authenticate to use it. To provide the login credentials the user needs the Alexa App installed on his smartphone where he can configure anything possible.

We, as developers have to build a website with input fields for dealing with the user credentials. During the process of authentication a authentication token is generated by the request processing service.

The user has to activate the skill in the Alexa App Skill Store. After the activation the app will show a tab for the skill configuration. If the user activates the skill on the echo device it will call the website with the configured credentials to get the token. This token is now used in every request, sent by the Alexa Skill.

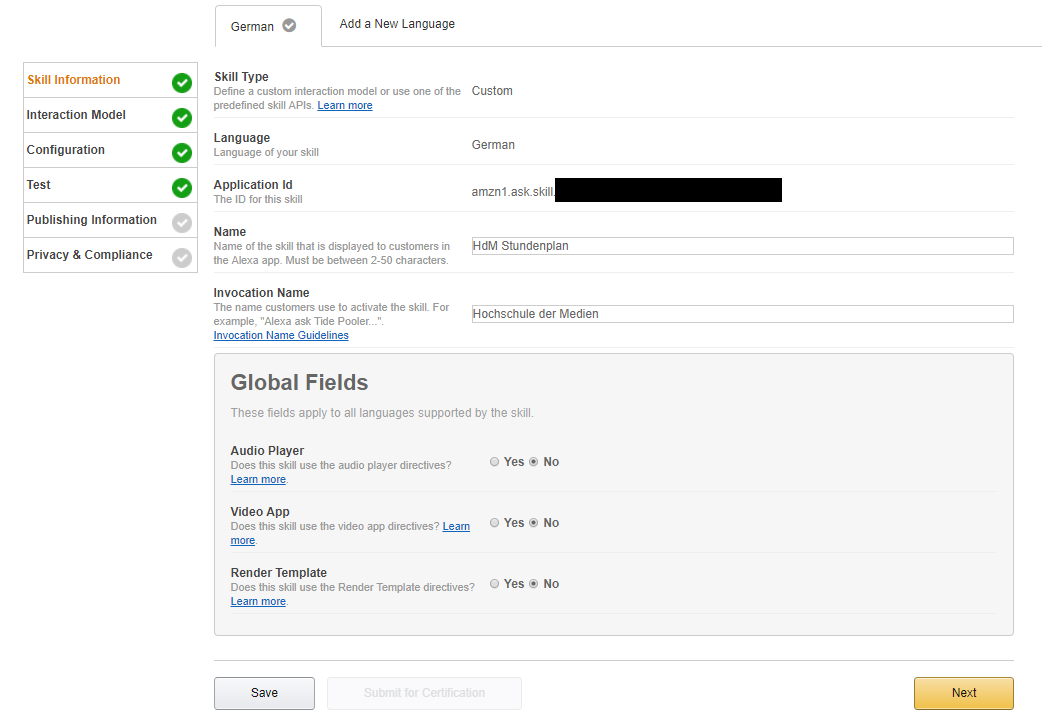

Create an Alexa Skill on Amazon Backend

When you have signed up, you need to create a new skill in the Amazon backend. After that you need to configure it to get the skill working. The configuration starts with the display and activation name of the skill.

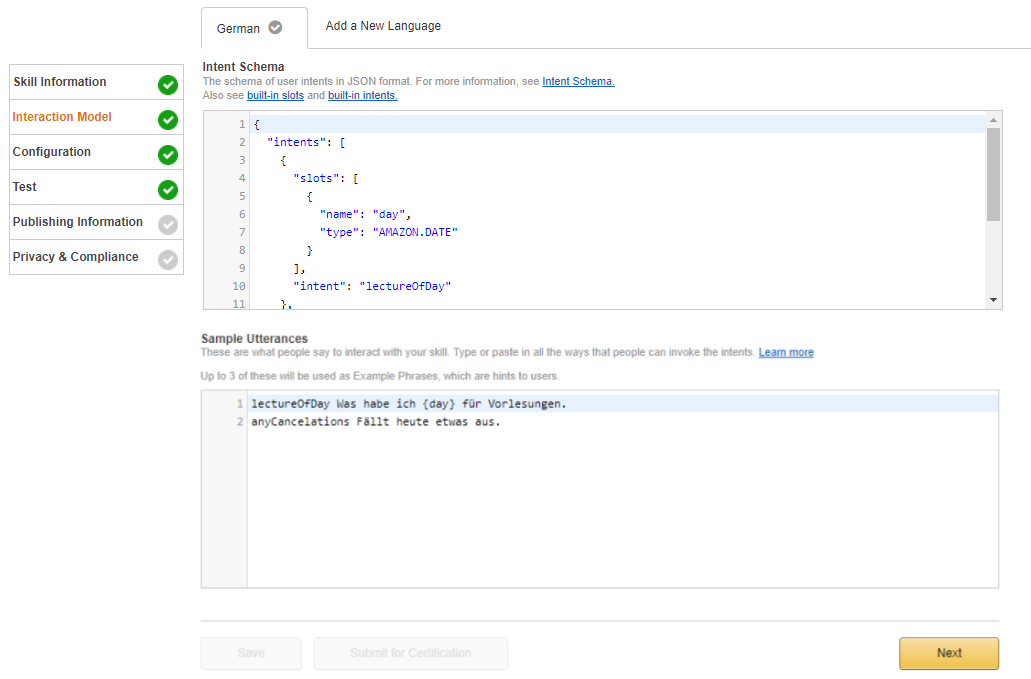

Amazon Echo works with so called intents and slots. An intent is something like a function where slots are parameters. Echo will recognize which intent a user is asking for and automatically fills the slots. All the information to perform this action have been configured by us with intents and the corresponding slots. On the bottom of the same site you write the speech commands mapped to the intents.

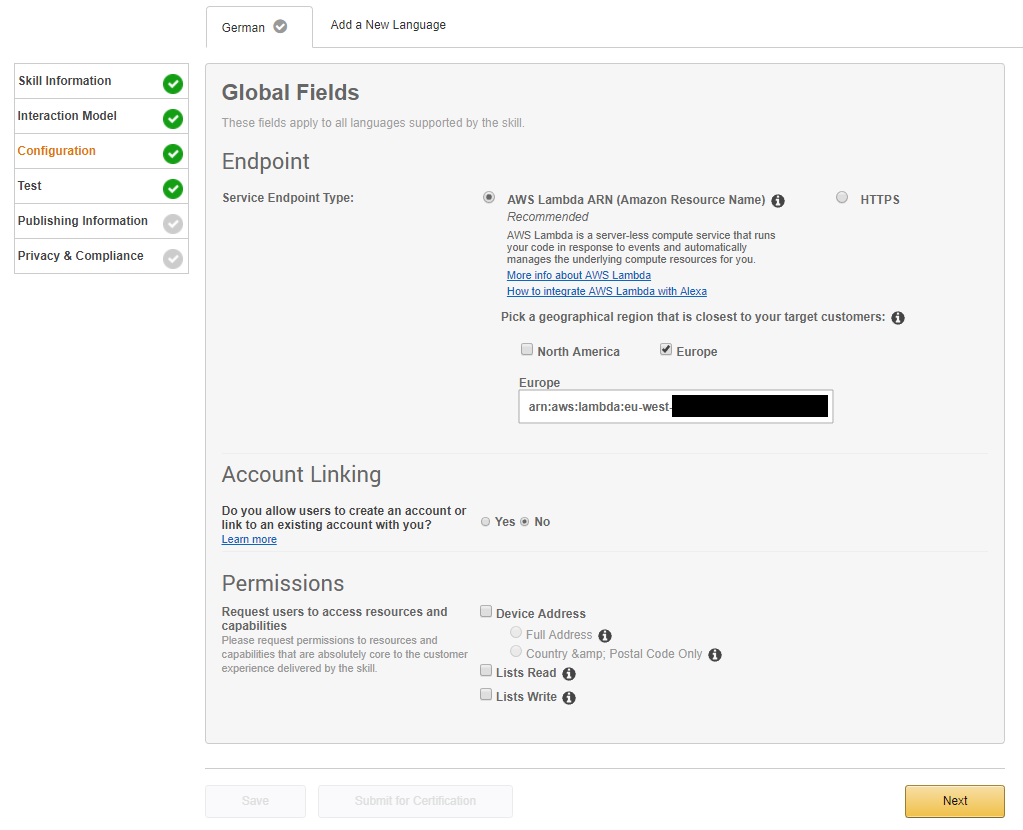

To test the skill it’s sufficient to configure the host where the skill is hosted. In our case the skill was hosted on AWS Lambda.

Implementation

Development with Alexa Skills Kit for Java

Amazon provides a library for skill development with java. It manages the parsing of the in- and output JSON strings.

So we can focus on the skills logic. The library provides an interface named Speechlet. There are four predefined methods which are called by the skill.

@Override

public SpeechletResponse onIntent(IntentRequest request, Session session) throws SpeechletException {

// TODO Auto-generated method stub

return null;

}

@Override

public SpeechletResponse onLaunch(LaunchRequest arg0, Session session) throws SpeechletException {

// TODO Auto-generated method stub

return null;

}

@Override

public void onSessionEnded(SessionEndedRequest arg0, Session session) throws SpeechletException {

// TODO Auto-generated method stub

}

@Override

public void onSessionStarted(SessionStartedRequest arg0, Session session) throws SpeechletException {

// TODO Auto-generated method stub

}The methods onIntent and onLaunch are most important for our skill..

Like the name says, the onLaunch method is called when a user starts the skill. Here we simply generate a greeting for the user and ask him how the skill (in ‘person’ of Alexa) can help.

@Override

public SpeechletResponse onLaunch(LaunchRequest arg0, Session arg1) throws SpeechletException {

PlainTextOutputSpeech speech = new PlainTextOutputSpeech();

speech.setText("Willkommen im Stundenplan der Hochschule der Medien. Wie kann ich helfen?");

return SpeechletResponse.newAskResponse(speech, createRepromptSpeech());

}

The method onIntent is called when a user calls an intent of the skill. Information about the intent is passed in the request parameter. So we can simply read an intent name to determine which function of our code should be called.

private static final String INTENT_LECTUREOFDAY = "lectureOfDay";

@Override

public SpeechletResponse onIntent(IntentRequest request, Session session) throws SpeechletException {

String intentName = request.getIntent().getName();

if (intentName != null) {

switch (intentName) {

case INTENT_LECTUREOFDAY:

return handleLectureOfDay(request.getIntent(), session);

case INTENT_STOP:

return this.handleStopIntent();

}

}

throw new SpeechletException("Invalid intent");

}The method shown above lets us read the slot values from the intent. Therefore the skill libraries class Intent provides the method getSlot(string slotName). The slot itself has a method getValue.

intent.getSlot(“MySlot”).getValue()

HdM Timetable API

In general the HdM Timetable API gives an overview of the current week. So it’s not possible to gain information about the upcoming or previous week(s).

When calling the API link (http://www.hdm-stuttgart.de/studenten/stundenplan/pers_stundenplan/stundenplanfunktionen/all_in_one_sql/timetable) by using the basic authentication method, a JSON like this will be responded:

{

"lectures":[

{

"vorlesung_id":"5214854",

"sgblock_vorlesung_semester_id":"5170336",

"name":"Software Development for Cloud Computing",

"type":"regular",

"tag_id":4,

"zeit_id":5,

"edvnr":"113479a",

"raum":"136",

"findet_statt":1

},

{

"vorlesung_id":"5214854",

"sgblock_vorlesung_semester_id":"5170336",

"name":"Software Development for Cloud Computing",

"type":"regular",

"tag_id":4,

"zeit_id":6,

"edvnr":"113479a",

"raum":"135",

"findet_statt":1

}

]

}

The example shows the lecture Software Development for Cloud Computing which takes place in the 5th and 6th lecture block on a Thursday. That means from 2:15 PM to 3:45 PM and from 4 PM to 5:30 PM. First part of the lecture is in room 136, and second in room 135. As you can see, both of them took place (if you make the same request e.g. during the time of holidays you will get a ‘0’ for ‘findet_statt’ instead). At least ‘edvnr’ which is the university’s internal unique id for a lecture.

There is a few more information provided but due missing documentation we unfortunately can’t give a clear explanation for it.

For our skill we used this fields:

- name ([string] which is directly used by alexa voice service)

- tag_id ([int | 1-7] ‘day of week’ to map the pronounced version bidirectionally)

- zeit_id ([int | 1-8] to to separate a day into the lecture blocks)

- raum ([string] also used directly)

- findet_statt (int | 0-1 used as boolean whether the lecture takes place)

Test driven development with Java

Our Alexa Skill – the code – runs in a cloud where we are not able to simply debug it. That’s the reason why unit tests get more important to ensure the correct functionality of our software. The components were easy to test through the encapsulation we made.

At first we planned our architecture with its components, classes and their public methods. Then we wrote the unit tests which defined the expected results. Next task was to implement the logic until all tests passed.

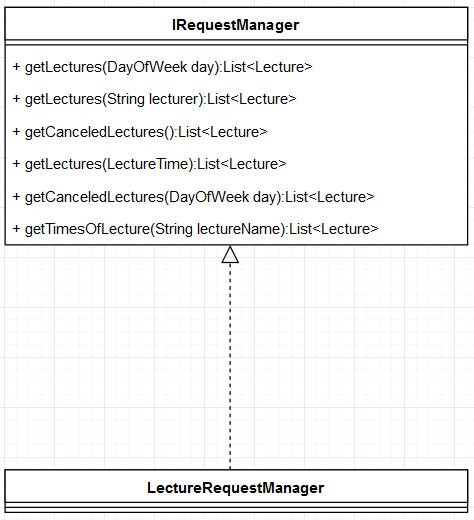

Request-Management

Our Alexa skill can handle a set of requests. For example you can get information about which lectures you have on a specific day or when a lecture is being held. To get that kind of information programmatically we provide an interface IRequestManager. The functions within this interface have been implemented in a concrete class LectureRequestManager. A roughly, not completed architecture diagram, is shown below:

For each request, sent to HdM-timetable-system’s API, you get a JSON response, containing all lectures of the personal timetable.

All needed information is being extracted from this JSON-data and stored in different Lecture-Objects. These objectes are based on a custom-created Lecture class.

In class LectureRequestManager each method gets a list of Lecture-Objects. From the given list, only lectures will be filtered out, matching a certain criteria. An example of such a method provided by LectureRequestManager is given in the code below:

@Override

public List<Lecture> getLectures(String lecturer) {

this.loadLectures();

final List<Lecture> result = this.lectures.stream()

.filter(lecture -> lecture.getLecturer().equals(lecturer))

.collect(Collectors.toList());

return result;

}The criteria for filtering can be obtained by the function’s parameters. What this method does is, it takes a list of lectures and filters out only the lectures, which have the lecturer-name in their lecturer-property. The result is then stored and returned as a new list.

To get a corresponding and correct answer for every kind of request, all functions in class LectureRequestManager have been implemented in a similar way.

Hosting an Alexa Skill on AWS Lambda

While an Alexa Skill could be hosted on any server that supports a https service request we decided to host it on AWS Lambda. That’s a service provided by Amazon itself. A Lambda function uses the serverless computing construct. That means, that the code is not running all the time. In the moment of a request the server starts our application, handles the request and stops the application. This way the servers can handle more applications, because they only use resources when they are really needed.

In the AWS developer console we had to configure some values. For example we had to choose a runtime (Java 8), the full qualified name of the entry point in the source code and the trigger what starts the Lambda function (Alexa Skills Kit).

After the configuration of the AWS Lambda function we needed to implement the entry point for that in our java code.

The Alexa Skill Kit provides a class named SpeechletRequestStreamHandler. We only needed to create a class that extends this class. We created a constructor that calls its super constructor.

In the configuration of the lambda function we had to set the fully qualified name of the class as entry point. Otherwise AWS Lambda wasn’t able to start our piece of software.

Last but not least we had to compile our code and uploaded the jar file to the AWS developer console.

For compiling we had to ensure that all dependencies are deployed in the jar file.

Therefore we started the maven build process with the following parameters:

mvn clean assembly:assembly -DdescriptorId=jar-with-dependencies package

Sample video

Conclusion

One problem we faced during development was that we didn’t knew exactly what the values of some fields, in the JSON data of the HdM-Timetable-API, mean (see “HdM Timetable API”). For some fields we had to guess what the values really mean (e.g. what the value behind the field “zeit_id” means). The problem was, that the API we used was undocumented. For example when asking Alexa about lectures on a given day – for example Wednesday – she responded with the lectures of Tuesday every time. Suddenly we found what was going wrong, the implementer of the API decided to start a week not with 0 for Sunday (American format) or Monday, no – instead Monday was mapped to ‘1’ increasing every day up to Sunday, ending with ‘7’.

If you are interested in developing a piece of software that uses data provided by HdM, make sure to get access to a fully and well documented API (maybe there is a way to use the HdM-App API or something equal like this more properly). Otherwise you will end up wasting your time with fixing stupid problems which could have been avoided by using a clearly defined interface to communicate with.

Amazon Voice Service has such a potential – but never expect a solution for all and everything or to many open opportunities from it (e.g. no custom strings).

Also it’s pretty hard to get reliable information from Amazon to develop a skill. Sometimes there are more than one documentations or instructions that do not match each others way to fix a problem. There was also a high chance getting outdated information.

All in all it was a nice project and we were really proud when Alexa started to react on our questions with suitable answers.

Title Image: Amazon Press Media (http://phx.corporate-ir.net/phoenix.zhtml?c=176060&p=irol-imageproduct41)

Leave a Reply

You must be logged in to post a comment.