Through networking and the Internet, more and more people can share their opinions and spread news. This has positive and negative consequences. On the one hand, it is much easier to get the news you want and to publish it. On the other hand it is relatively easy to influence other users. An insight into the search on Google Trends for the term ‘fake news’ shows the current relevance. Since October 2016, people are increasingly searching for the term. There are individual rises in search peeks which can probably be linked to various events. We can expect to come into contact with fake news every day.

In the lecture Secure Systems we dealt with different areas of security. Who is ‘we’? The specialization of us, Benedikt Reuter and me, was fake news. We want to summarize this knowledge in this blog entry. Our questions are: What are fake news? Where do fake news come from? Who creates them? What can you do against fake news? Here we will go into some areas in more detail which we have only skimmed over during the semester. Especially in focus are social bots, fighting fake news by artificial intelligence and the connection between fake news and information warfare. Finally, we will answer the question if fake news are a security issue [1, 2].

Fake news – What are they?

False information, false news or fake news? All of them sound similar but they describe different things. There are different interpretations, but here is our take: False information and fake news can be used in the same context. However, fake news are associated with politicalissuesmostofthetime. Falseinformationismorelikelytobeassociatedwithother issues. Both of them are news that spread misinformation by using a false or altered story. False news are mostly created because of lacking research. All fake news and false information are false news, but not the other way around. However all of them will misguide the reader.

The term fake news or false information is not a new term. It has clearly gained in importance in recent years. By now, it is known to nearly everyone.

Normally, news should come from trustworthy journalists and other publishers who work according to certain rules. But it is much easier to release your own articles through the internet and especially social media. You can also publish something as an alias and thus gain reach. Based on the goals and sources, fake news can be classified into categories [7]. Examples:

● Clickbait

With an image or a title the creator tries to draw attention to a certain area, e.g. to place advertisements and generate clicks.

● Propaganda

Facts are invented, e.g. to create prejudices in the audience against the political camp of the opposite side, against minorities or ‘enemy’ countries/ organisations.

● Satire

Fake news for entertainment purposes.

● Sloppy journalism

Not all fake news are intentionally created. Also errors or inaccuracies in the research can lead to fake news.

● Misleading Headings

A specific area is extracted from a report and used as a headline. However, this headline is not understandable without a certain context and therefore misleading.

● Biased News

One usually gets more content the system considers appropriate for the user. This can be exploited by fake news, because in such areas users are more likely to believe false information if it comes from the ‘right’ side.

Sources and distribution of fake news

So there are different types of fake news. But where do they come from? And how do they spread on the Internet? There are several possibilities.

Fake news websites

Websites can be hosted by various services at very low cost. The costs for a domain sometimes amount to $1 per year. Storing the code on a server costs about $3 per year. These are very low costs. With ‘fake news websites’ people take advantage of this. They create websites with a domain that could point to a trustworthy source if the spelling was exact (which it is not). This way the name of the domain can resemble the real name of a news source. See below for an example. Next, the website usually resembles a typical news website. For this purpose the layout and appearance of real news websites should imitate web pages. The operators must also fill the page with content. Some operators create them themselves. But, most of them steal posts to keep the designing process as simple and cheap as possible. Content that has a ‘clickbait’ title is especially interesting. To make money with such a website, it is common to place ads. Advertising usually brings revenue per click. This means that the operators receive a certain amount of money for each visitor to the website. Because of the low cost of the website, this method makes it easy to create large profits.

Finally, the owners have to share the website. This is usually done via social media. Clickbait titles also help here to direct more people to the fake news website [3].

Here is an example of such a website: a snapshot from a web archive. The domain http://cbsnew.com.co was used for such a site during the election campaign of Donald Trump in 2016 [4]. At first glance, the domain appears to belong to CBS – a large radio and (trustworthy) television network in the USA. However, the ending of the URL (.co) suggests that the domain belongs to Colombia.

Operators of fake news sites hope for both: financial profit and on the manipulation of opinion. Such websites belong to the categories propaganda, clickbait and misleading headings.

Influencers

Influencers may be spreaders of fake news (intentionally or unintentionally). Whenever people share articles, news or information with many followers, the audience is multiplied. Followers may come to the conclusion that everything their ‘favourite influencer’ shares is correct. The fans spread these bits and pieces even further which can lead to a chain reaction. Click numbers are also important for influencers. They can also make use of approaches like clickbait [13].

Social Targeting

Social platforms analyze and track the interests of users to show them content they may find interesting because it matches their (political) views and attitudes. This also works during the distribution of fake news. If a user shows interest in a certain topic, the respective social platform suggests further contributions from this area. In this way, the user quickly comes across clickbait content and thus potential fake news. This distribution belongs to the category biased news [14].

Trolls

‘Trolls’ are real people on the Internet who want to spread topics by provocation. The topics are supposed to create reactions from other participants who will pass on these discussions or contents to others. Trolls act in a controlled, targeted and harmful manner. They try to

manipulate and damage the community on a platform. In such a community, this can lead to conflicts that can be exploited afterwards.

The trolls’ motivation can be fun, entertainment, but also revenge or the desire to do harm.

An example of this is a case in the USA. A troll accused a homepage editor at The Washington Post of taking pictures of a politician’s notes during a confirmation hearing. But she was not even there. The only thing she noticed a few hours later was the flood of comments on social media. You can see from this that a single troll can make a huge mess [30].

Social Bots

Bots are not robots, but small software programs that execute scripts to perform simple actions on social media. So they’re impersonating people. The advantage: these programs can execute actions at a higher rate than any human being. This feature can be used on social media platforms to quickly execute certain steps. For example, a bot can search on Twitter for all posts with a keyword and leave them a like and a comment. This would be extremely time-consuming for human users. Bots do so in just seconds. With such a method, bots can claridy opinions and stimulate a discussion. If a bot has a “positive” attitude towards a topic, it can, for example, criticize people who have expressed opposing opinions in a post.

There are many instructions for creating social bots online. It is estimated that bots cause half of all web traffic worldwide. On Twitter alone it is estimated to be about 15%.

Using such a strategy, bots can try to manipulate opinions on platforms. Although discussions with bots alone are not very profound, they are said to influence elections or military conflicts. They can also set trends and spread fake news because of their speed [5].

A study by the University of British Columbia shows how easily social bots can mingle with people. For the study, 120 social bots were created on Facebook. These bots sent random friend requests in the first round. 20% of the respondents confirmed them. In a second round, the bots sent friend requests to friends of the people from round 1. 60% of the requests were confirmed in this round. So the bots were able to collect a lot of data like email addresses or real addresses within a short time.

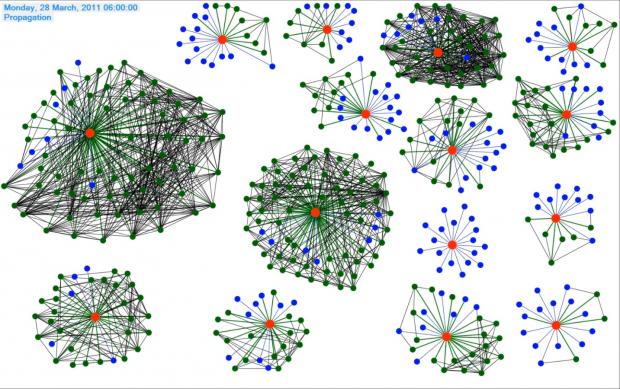

Overview of the contacts of social bots from the University of British Columbia study [6]

Above, you can see an overview of some social bots and their friends. Each red dot represents a social bot. Blue dots are successful friends of the first round and green dots friends of the second round. Each line represents a friendship. Once again you can realize how easy it is to network with friends on social media [6].

As already mentioned: social bots usually are not very complex. However, this can change. With the further development of artificial intelligence, the probability of usage in social bots will most likely increase [21].

The fight against fake news

After the sources of fake news have been explained, this section will deal with the fight against fake news.

By the end user

Users can find out for themselves which news are possibly fake news. They can choose between several options.

● Fact check

By now, several websites specialize in detecting and explaining incorrect articles and news. One example is Correktiv, a Berlin-based start-up company that was founded in 2014. So if a user finds a questionable article or contribution, he may search for suitable explanations here. Disadvantage: these websites react with delay. This means that users may need some time to find suitable clarification. So, if an article is not yet on Correktiv, for example, is it real news? Or has the fake news not yet been discovered or clarified [15]?

● Reverse Search/ Check the sources

The user may use a search engine to question the contents. Especially the search for images from fake news articles can provide answers. If an article indicates sources, these can also be checked. If no sources are given, the article does not have to contain fake news. But the reader should take everything with a grain of salt [31].

● Recognizing Social Bots

To identify social bots, users can, for example, look at the number of their tweets. Bots often have substantially more tweets than normal users. The profile pictures of bots can also often be examined using a search engine. Finally, users can check the content of posts. If they are always similar and only on a certain topic, it is quite possible that it is a social bot [5].

● Browser-Plugin

There are some plugins for the browser to examine articles. However, there are few reviews here, so the accuracy is not clear. These plugins also have problems with articles in non-english languages. In addition, you have to consider the privacy policy. Many browser plugins store the history of the user to resell this data. This can eventually lead to social targeting again [11]. All in all, the user often has to take the initiative himself. He must question and check every source and every article himself. Since this is costly and impractical, it is unlikely that many users will do this.

All in all, the user often has to take the initiative himself. He must question and check every source and every article himself. Since this is costly and impractical, it is unlikely that many users will do this.

Through the platforms

Social media platforms have long since become aware of the problem of fake news. So there are different methods which are offered by the respective platforms.

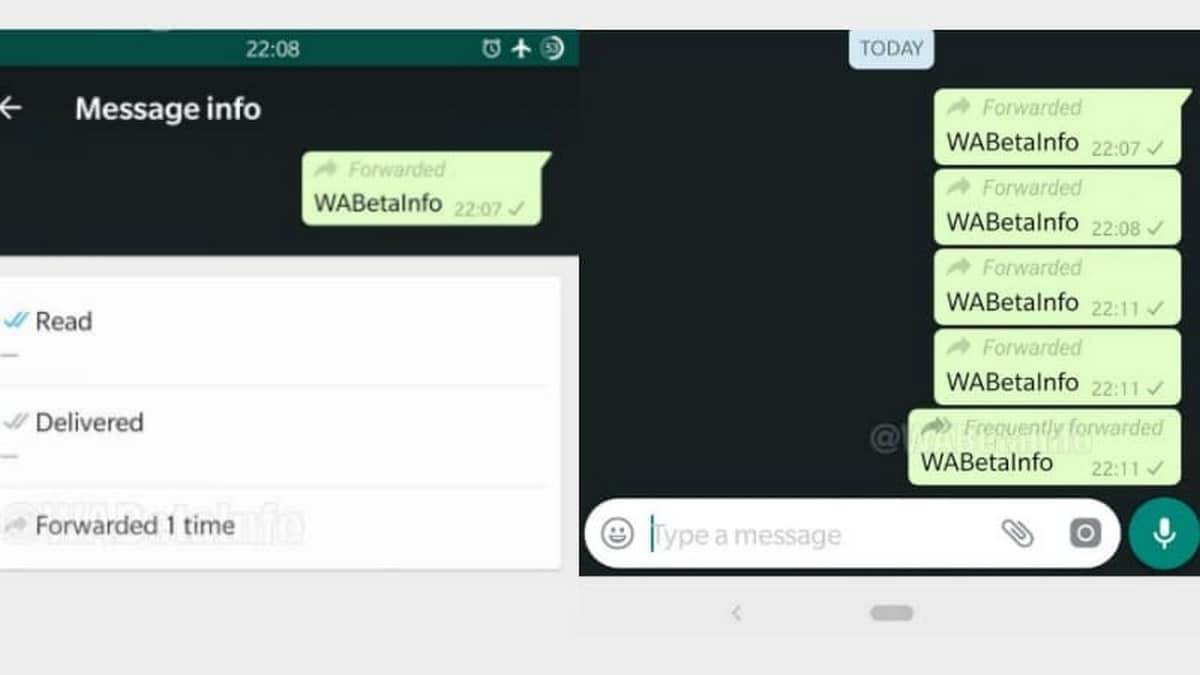

Display of frequently forwarded messages in WhatsApp [18]

In WhatsApp, messages that users frequently forward are highlighted as such. This is to help users identify and handle such messages. WhatsApp has E2E encryption. This means that the platform cannot block fake news messages. However, the forwarding function can be stored as ‘meta-information’.

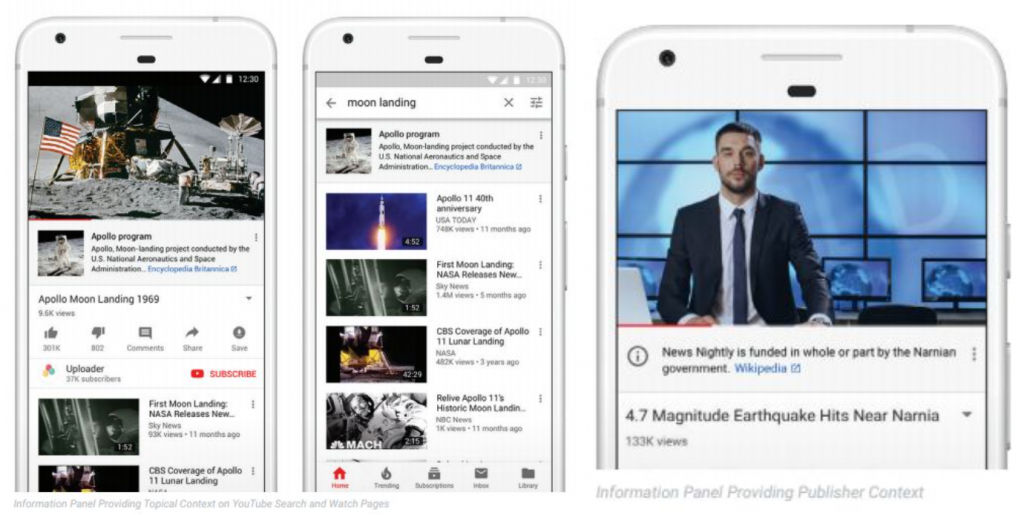

YouTube: Give Users more Context

Display on YouTube to provide more context to the user; left: moon landing; right: note about the channel of the video

YouTube always shows a banner under videos to give the user the possibility to inform himself more about the topic. There is also a link with more information. Sometimes, you can also find information about the channel of the video.

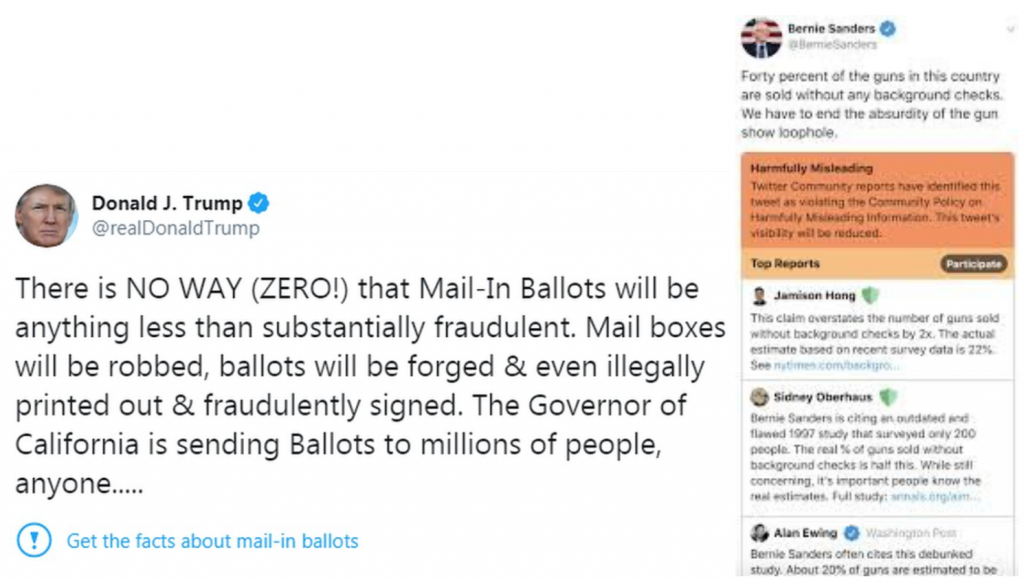

Twitter gives a hint among questionable posts. In newer cases the posts are also deleted.

Notes on Twitter about posts with possible fake news; left: Hints on how to collect more information on the topic; right: warning about possible fake news

Instagram uses third-party controls to verify the authenticity of images and content [19].

Display of Fake News Posts on Instagram [19]

Lately, social media platforms are taking action. Here, the user is given hints by which he can recognize that certain contents are (not) questionable. For details he has to do research again and form his own opinion. However, it is easier for him than with the measures presented in section ‘by the end user’.

Fake news vs. Freedom of Speech

Fighting fake news is definitely an important issue. That’s why social media platforms not only offer hints but also delete questionable content in some cases. This is where freedom of speech comes into play at some point. It is difficult to draw a clear line between freedom of speech and fake news. It is especially hard when the content is not about clear fact-based claims.

Due to the rather difficult distinction between the two points, laws against fake news are not welcomed by everyone. Especially with laws that are supposed to make the platform responsible. A platform which is afraid of consequences like fines or trials will rather block more content than necessary. Thus it will possibly limit the freedom of speech.

Some people are completely against punishments of fake news for these reasons. One must be able to tolerate fake news, because the freedom of speech is much more important, they say [27].

To introduce an example: YouTube blocked a video of a press conference where two doctors were talking very critically about Corona. Among other things the initial measures taken by the American government were considered as correct. But they stated that it is important to return to normal everyday life, because the risk is higher within an isolation. YouTube has commented that the video and the statements in it violate local guidelines on distance rules [26].

Facebook, on the other hand, did not delete the video. You can see here that different parties have different views [28].

Through Artificial Intelligence

There is another approach to fighting fake news: artificial intelligence. However, there are hurdles.

Finding fake news – What makes it special?

It looks like a simple question. But what is the difference between false information and fake news? Is an article simply false or misleading? Has the author misinterpreted the content of a source? Is the content ironic or satirical? All these questions are crucial for the identification of fake news to classify articles into the above mentioned categories.

Even the training of an artificial intelligence is not easy. Many articles can be interpreted in different ways. And to get a proper training record, developers have to read and review a lot of articles. The process is tiresome and often does not show good results. Here the second approach may be better [20].

Finding real news

This approach makes it much easier for developers to identify datasets for training. And it is also easier to categorize: real and not real. Non-real data include fake news, satire, irony, etc. That means: everything that cannot be interpreted as traditional news.

With such an approach, the AI can find content you would find on real news sites. An example for such an AI is Fakebox [22]. This is an application that can run locally on your own computer. You can feed articles into it which will be analyzed. According to the author, the AI had a success rate of 95% in the test results. So maybe this approach is the future way to detect fake news [20].

Impact

Fake news can have an impact on any area of society, whether personal, economic or political.

On a personal level, fake news can be reputation-damaging (see the example of the troll). Fake news are also used as bait for fraudulent websites. Here the attackers could get personal data or credit card information.

Politically, there is a lot of discussion about the impact of fake news on the US presidential elections in 2016. Researchers have found that more than 40% of Americans have visited untrustworthy websites and more than 5% of news articles were false. Still, it is controversial how much the election was actually influenced [8]. There is also speculation that Russian trolls may have tried to demoralize certain groups in the election by spreading fake news. As an example, African Americans were shown articles claiming that Hillary Clinton has no interest in African American rights. The goal was to make them boycott the election [9].

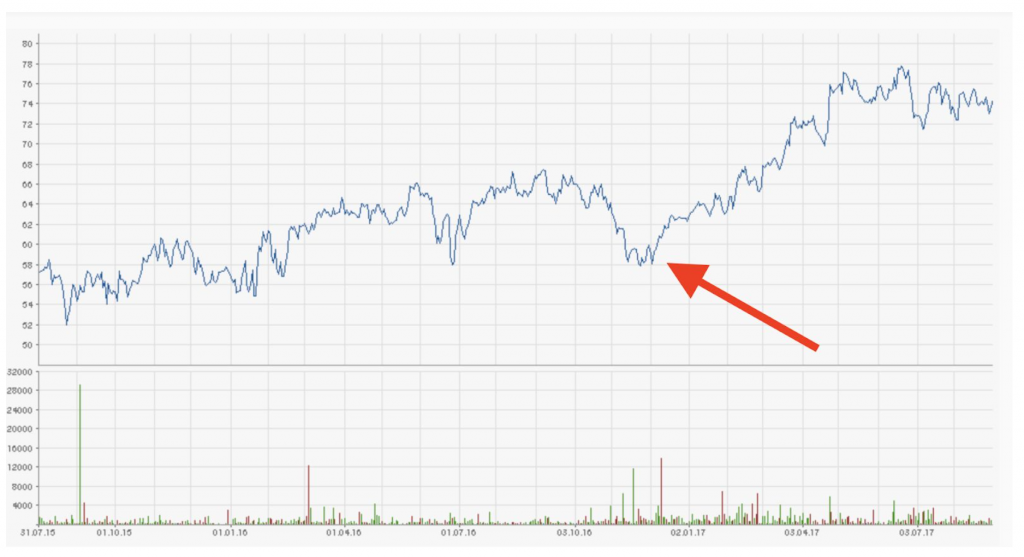

Economically, fake news can also cause damage. In 2016 a fake press release was published by the international construction company Vinci. It caused the company’s share price to fall by 20% within a short period of time. The share price recovered quickly, because the company reacted very quickly. Nevertheless, it is clear what a ‘simple’ wrong message can do [10].

Vinci share price from August 2015 to August 2017. [25]

Algorithms can also be manipulated by fake news. For example, bad rumors about a company could be spread which a trading algorithm then takes up. This algorithm tries to analyze the rumors in order to make or recommend decisions on this basis. In the case of systematically spreading fake news, the algorithm will probably come to wrong results.

The corona topic is also susceptible to fake news. In Iran, at least 300 people are said to have died from drinking methanol. A fake news article claimed that it should help against the coronavirus. Since alcohol is forbidden in Iran, many people bought illegal methanol which can be dangerous due to the ingredients. In some families, children were forced to drink methanol which can lead to serious health problems [12].

Information warfare

Fake news can be used as a weapon in information warfare. Ethically, information warfare can be brought to the same level as ‘normal’ war. Here too, people can be harmed, whether by reputation damage or by the failure of important systems that are affected by hacking attacks. By using such methods one is clearly trying to harm the other party.

What is information warfare?

A definition of Information Warfare is ‘… a concept involving the battlespace use and management of information and communication technology in pursuit of a competitive advantage over an opponent. … ‘ [16].

So in this kind of warfare, information and communication are used for weakening or harming a competitor. But the concept can also be applied to any other situation where two parties face each other in a competitive environment with relevant information existing.

To make components of information warfare somewhat clearer, we try to explain the different areas [17]. There are 5 different categories:

● Information Collection

The more information a person has, the better the person can assess the situation. And that is likely to lead to a more positive outcome. The appropriate countermeasure is to try to give the counterpart as little information as possible. This could deteriorate his options.

● Information Transport

A comprehensive collection of information is good. But it is useless if it does not reach its goal. Therefore, an intact infrastructure is needed which must be protected.

● Information Protection

There are 2 parts to this: on the one hand you have to minimize the volume of information your opponent receives as low as possible and on the other hand you have to protect any information you receive from the other side. Physical protection and protection during transmission is important.

● Information Manipulation

This is where fake news are mainly integrated. By manipulating information, the opponent can be lulled into a false sense of security. In order to make this strategy a success, you have to get hold of information first and circulate it again in a changed form and in a plausible way.

● Information Disturbance, Degradation, and Denial

The last point is in a certain way the counterpoint to Information Collection. You try to keep the information collection of the opponent as small and incomplete as possible, e.g. by disturbing the communication and thus reducing the quality.

Information warfare is described as a combination of electronic warfare, cyber warfare and psychological operations. It places information warfare at the center of all future warfare between countries or alliances. Electronic warfare is about disrupting electronic connections. Cyber warfare, or more precisely cyber attacks, are attacks on IT infrastructure. Psychological operations are operations that take place on the psychological level, e.g. to reduce the morale of the opposing nation through fake news or propaganda [23].

Is there information warfare already?

Because of the different definitions, it is not possible to say clearly whether information warfare is already practiced or not. Still, military experts suspect that both China and Russia attack their neighbours with methods that originate from electronic warfare, cyber warfare or the psychological operations’ area. The terror militia called Islamic state is also active in this area, e.g. with the help of manipulation on social media [23].

However, this type of warfare is much more inconspicuous and anonymous, e.g. cyberattacks cannot always be traced and fake news will probably not always be uncovered. Yet, since fake news and the general manipulation of data is part of information warfare, one can say that the methods of information warfare is already more in use than one might suspect at first.

Summary

Do fake news pose a security risk to society? In a way, yes. Users must scrutinize any content they read and question it. Fake news use gullible humans as a weak point in the system. From the usual social platforms there are some helpers. But despite all source-checking, it is unlikely that fake news will become irrelevant. A former fake news writer writes: ‘Nothing will change until readers actively change their reading behavior online — by refusing to read or share news by disreputable sites or by paying for quality editorial that doesn’t rely on advertising. We all need to think critically about what we are reading. We need to think for ourselves.’ [29]

Also, the use of artificial intelligence promises some changes in the future. AI can be used for defense or detection. On the other hand, it can be used by attackers to spread and create fake news.

It is therefore crucial that readers are more attentive and get a feeling for fake news before it has a negative effect on society. It is safe to assume that in the future fake news will have many more forms to influence public opinion. And who knows, maybe we’ve already come to this point…

References

[1]: https://trends.google.de/trends/explore?date=all&q=fake%20news (Accessed 12.08.2020)

[2]: https://journolink.com/resource/319-fake-news-statistics-2019-uk-worldwide-data (Accessed 12.08.2020)

[3]: https://www.cits.ucsb.edu/fake-news/where (Accessed 12.08.2020)

[4]: https://web.archive.org/web/20161204232524/http://cbsnews.com.co/ (Accessed 12.08.2020)

[5]: https://www.youtube.com/watch?v=G0skVFvn5sk (Accessed 12.08.2020)

[6]: https://www.ece.ubc.ca/news/201112/socialbots-infiltrating-your-social-networking-sites (Accessed 12.08.2020)

[7]: https://www.webwise.ie/teachers/what-is-fake-news (Accessed 12.08.2020)

[8]: https://www.faz.net/aktuell/feuilleton/medien/fake-news-bei-us-wahl-2016-nicht-entscheidend-16663536.html (Accessed 12.08.2020)

[9]: https://www.t-online.de/nachrichten/ausland/id_84961716/wahl-manipulation-durch-russland-zwei-studien-zeigen-wie-trump-gewann.html (Accessed 12.08.2020)

[10]: https://www.complianceweek.com/the-vinci-code-fake-news-press-releases/9877.article (Accessed 12.08.2020)

[11]: https://tlkh.github.io/fake-news-chrome-extension/ (Accessed 12.08.2020)

[12]: https://www.ibtimes.sg/coronavirus-poisoning-kills-300-iran-after-people-believe-fake-news-drink-methanol-based-alcohol-41843 (Accessed 12.08.2020)

[13]: https://engineering.stanford.edu/magazine/article/how-fake-news-spreads-real-virus (Accessed

12.08.2020)

[14]: https://www.onlinemarketing-praxis.de/glossar/social-targeting-social-media-targeting (Accessed 12.08.2020)

[15]: https://correctiv.org/faktencheck/ (Accessed 12.08.2020)

[16]: https://en.wikipedia.org/wiki/Information_warfare (Accessed 12.08.2020)

[17]: https://www.cs.cmu.edu/~burnsm/InfoWarfare.html (Accessed 12.08.2020)

[18]: https://gadgets.ndtv.com/apps/news/whatsapp-rolling-out-frequently-forwarded-label-in-india-2079471 (Accessed 12.08.2020)

[19]: https://about.fb.com/news/2019/12/combatting-misinformation-on-instagram/ (Accessed 12.08.2020)

[20]: https://towardsdatascience.com/i-trained-fake-news-detection-ai-with-95-accuracy-and-almost-went-crazy-d10589aa57c (Accessed 12.08.2020)

[21] https://blog.mi.hdm-stuttgart.de/index.php/2019/09/19/social-bots-an-attack-on-democracy/#more-9013 (Accessed 12.08.2020)

[22] https://machinebox.io/docs/fakebox?utm_source=medium&utm_medium=post&utm_campaign=fakenewspost (Accessed 12.08.2020)

[23]: https://www.weforum.org/agenda/2015/12/what-is-information-warfare/ (Accessed 12.08.2020)

[24]: https://www.baks.bund.de/sites/baks010/files/arbeitspapier_sicherheitspolitik_2017_24.pdf (Accessed 12.08.2020)

[25]: https://www.finanzen.net/chart/vinci (Accessed 12.08.2020)

[26]: https://www.turnto23.com/news/coronavirus/video-interview-with-dr-dan-erickson-and-dr-artin-massihi-taken-dow n-from-youtube (Accessed 12.08.2020)

[27]: https://www.faz.net/aktuell/politik/inland/meinungsfreiheit-fake-news-muss-man-aushalten-koennen-16683413.html (Accessed 12.08.2020)

[28]: https://www.nbcnews.com/tech/tech-news/youtube-facebook-split-removal-doctors-viral-coronavirus-videos-n1195276 (Accessed 12.08.2020)

[29]: https://medium.com/s/story/confessions-of-a-fake-news-writer-62d8c3d28c1b (Accessed 13.08.2020)

[30]: https://medium.com/thewashingtonpost/what-its-like-to-be-at-the-center-of-a-fake-news-conspiracy-theory-d344c06f937f (Accessed 12.08.2020)

[31]: https://medium.com/@timoreilly/how-i-detect-fake-news-ebe455d9d4a7 (Accessed 12.08.2020)

Leave a Reply

You must be logged in to post a comment.