This post is about specific performance issues of the Web Audio API, especially its AudioNodes. It also briefly explains what this API was developed for and what you can do with it. Finally, it mentions a few tips and tricks to improve the performance of the Web Audio API.

This Article Contains

- What is the Web Audio API?

- The different implementations

- Performance relevant AudioNodes

- Tips and tricks

- Conclusion

What Is The Web Audio API?

The Web Audio API is an interface for creating and editing audio signals in web applications. It is written in JavaScript. The standard is developed by a working group of the W3C. The Web Audio API is particularly suitable for interactive applications with audio.

Before Web Audio API

Of course, sound could also be played in browsers before the Web Audio API. However, this was not so simple. Two possibilities are mentioned below, each of which was revolutionary when it was introduced.

Flash Player

Adobe Flash was a platform for programming as well as displaying multimedia and interactive content. Flash enabled vector graphics, raster graphics and video clips to be displayed, animated and manipulated. It supported bidirectional streaming of audio and video content. Since version 11 it also allowed the display of 3D content.

Flash version 1 was released by Macromedia in 1997. The Shockwave Flash Player was included accordingly. The integration of audio was possible with it. Flash version 2 and an extended Shockwave Flash Player appeared in the same year. New actions were available to the developer. With these, simple interactions could be realised.

The programming of content for Flash Player was done in the object-oriented script language ActionScript. User input could be processed via mouse, keyboard, microphone and camera.

Adobe stopped distributing and updating Flash Player on 31 December 2020, and it was no longer made available after 2020.

Programming with the Adobe Flash Player was very time-consuming and the performance was considered poor and insecure.

HTML5 Audio

The W3C published the finished HTML5 in 2014. HTML5 became the core language of the web. The HTML5 language offers new features such as video, audio, local storage, and dynamic 2D and 3D graphics. That previously could only be implemented with additional plug-ins, such as Flash Player.

For embedding audio and video data, HTML5 defines the elements audio and video. Since no format was defined that had to be supported as a minimum standard, for a long time there was no format that was supported by all browsers. A major issue was the licensing fees for various formats, such as H.264. Now that internet streaming of H.264 content should no longer be subject to licensing fees in the long term, this format is supported by all modern browsers.

The audio element is supported in most browsers with a small player function that often allows fast forward, rewind, play, pause and volume adjustment. However, this still makes basic functions of a modern DAW largely impossible.

How Does The Web Audio API Work?

The Web Audio API enables various audio operations. It allows modular routing. Basic audio operations are performed with audioNodes, which are connected to each other and form an audio routing graph. Thus, it follows a widely used scheme that is also found in DAWs or on analogue mixing consoles.

Image by TheArkow auf Pixabay

AudioNodes are linked via their inputs and outputs to form chains and simple paths. This string of nodes is called a graph. They typically start with the source. This can be an audio file, an audio stream, a video file or an oscillator. There is even an extra OscillatorNode for this, but more on that later. The sources provide samples with audio information. Depending on the sample rate, there are tens of thousands per second.

The outputs of the nodes can be linked to the inputs of other nodes. In this way, the samples can also be routed into several channels and processed independently of each other and later reassembled. Each node can change the signal with mathematical operations. To make a signal louder, for example, it is simply necessary to multiply the signal value by another value.

Finally the last node is connected to the node AudioContext.destination. This sends the sound to speakers or headphones on the end device. However, you can also do without this. This makes sense if a signal is only to be displayed visually and you do not need to hear it at all.

This structure makes it possible to play back sound from streams or files in browsers and to create sound in real time. In addition, the sound can also be edited interactively in real time. This ranges from changing the volume via various filters to the creation of realistic room sounds like Doppler effect, reverb, acoustic positioning and movement of the user. The signal processing mainly is done by the underlying implementation of the API. Custom processing in JavaScript is also possible.

The AudioWorklet

The Web Audio API thus enables interactivity and complex operation tasks with audio. Thus, it already fulfilled many requirements when it was introduced and was well suited for many use cases. One point of criticism, however, was the lack of extensibility for developers. As already mentioned, the API also offered developers a way to execute their own JavaScript code via the ScriptProcessor node. This function was perceived as insufficient.

Therefore, the W3C Audio Working Group developed the so-called AudioWorklet to support sample-accurate audio manipulation in JAVA without compromising performance and stability.

The first design of the AudioWorklet interface was presented in an API specification in 2014. The first implementation was published in the Chrome browser at the beginning of 2018. Especially for the computer music community, this opened up many new options. Thus, the AudioWorklet is considered a bridge between conventional music software and the web platform.

The Different Implementations

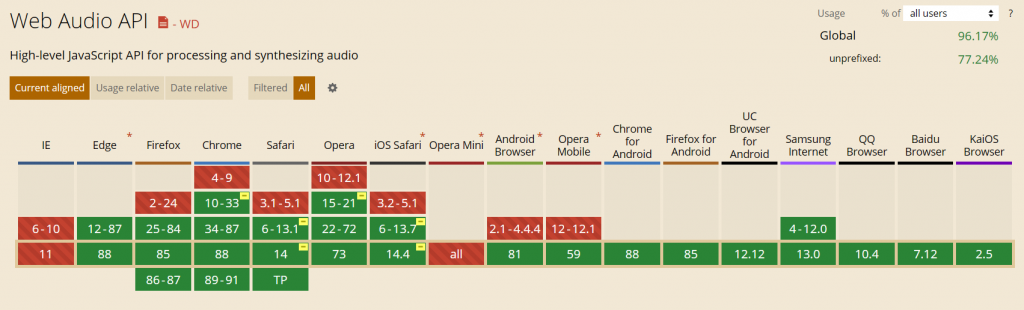

The Web Audio API is supported by all modern browsers. These include Mozilla Firefox, Google Chrome, Microsoft Edge, Opera and Safari. Most mobile browsers also support the API.

Taken from Can I Use… February 2021

The performance of the Web Audio API differs in different browsers. Four Web Audio API implementations are currently present in browsers.

- WebKit: Chrome and Safari used to share the same code here.

- Blink: When Blink was forked from WebKit, a separate implementation of the API was also developed.

- Gecko: The implementation in Gecko was developed from scratch and differs in its philosophy from the others to some extent.

- Edge: The source code of Edge is not public.

One difference is the processes per tab. Gecko has one process for all tabs and the chrome, all other browsers use several processes. The difference mainly affects responsiveness when Gecko is still processing something in the background in a web application that uses the web audio API. At the moment, this problem is being further developed. Other engines, as I said, use multiple processes. This divides the load and makes delays less likely.

Another difference is the implementation of the AudioNodes. This is clarified in the following. In general, it can be said that Gecko often paid more attention to quality and the other engines focused on performance.

Performance Relevant AudioNodes

The Web Audio API offers many different AudioNodes for different functions. In the following, the AudioNodes that can have a particularly strong influence on performance are described. They concern CPU and memory. If you have trouble with your performance using the Web Audio API you should double check the following AudioNodes. If you have trouble with the performance and not necessary you should not use them because of their costs.

AnalyserNode

With the AnalyserNode, you can read out analysis information in the frequency and time domain in real time. This AudioNode does not influence the signal but analyses the signals and can forward the generated data. This can be used to create visualisations, for example.

This AudioNode can provide information about the frequency response, using a Fast Fourier Transform algorithm. This Fourier transformation is computationally intensive. The more signal that needs to be analysed at once, the more computationally intensive the process becomes.

The fast Fourier transform algorithms use internal memory for processing. Different platforms and browsers have different algorithms, so it is not possible to make an exact statement about the memory requirements of this node.

PannerNode

The PannerNode makes it possible to position an audio source spatial and adjust the position in real time. To do this, the position is calculated in real time and described with a velocity vector and a directivity. For this to work, the output of the PannerNode must always be stereo. There are two modes in which the PannerNode can be used. Especially the HRTF mode is performance-critical.

This is so performance-critical because it calculates a convolution. The input data is convolved with HRTF pulses that simulate a room. This procedure is already known in all other fields of audio processing, but the PannerNode makes it possible in the browser. When the position of the audio source changes, additional interpolation is done between the old and the new position to provide a smooth transition. For stereo sources, several convolvers must operate simultaneously during movement.

The HRTF panner must load the HRTF pulses for the calculation. In the Gecko engine, the HRTF database only loads when needed, while other engines always load it. The convolver and the delay lines also need memory. Depending on how the Fast Fourier Transformation works on the respective system, the memory requirement also varies here.

ConvolverNode

The ConvolverNode also works with convolution. Here, convolution is used to achieve a certain reverb effect. A certain room response to a signal is convolved with the signal in the ConvolverNode, whereby this room response is transferred to the signal.

Here convolution makes the calculation is very performance-critical too. It correlates with the duration of the convolution pulse. Again, some browsers are more likely to experience a computational congestion than others, depending on how the computation is offloaded to background threads.

The ConvolverNode creates different copies of the signal to calculate convolutions independently. Therefore, it needs quite a lot of memory, which also depends on the duration of the pulse. Additionally, depending on the platform, memory may be added for the implementation of the Fast Fourier Transform.

DelayNode

The DelayNode interface enables delays. A delay is introduced between the arrival of the signal and the forwarding process. The storage costs result from the number of input and output channels and the length of the delayed signal.

WaveShaperNode

The WaveShaperNode represents a non-linear distortion. A function is computed with the signal to obtain a wave-shaped distortion. This WaveShaperNode creates a copy of the curve and can therefore be quite memory-intensive.

OscillatorNode

As already mentioned, audio can be generated with the OscillatorNode. It generates a periodic oscillation that is interpreted as an audio signal.

The oscillations are implemented with tables that are calculated via the inverse Fourier transformation. As a result, only when the waveform is changed are there initially higher computational loads when these tables are calculated. In Gecko-based browsers, the waves are cached except for the sine, which is calculated directly.

The stored wave tables can take up a lot of memory. They are shared in Gecko-based browsers, except for the sine wave in Gecko, which is calculated directly.

Tips and Tricks

The following tips and tricks come from Paul Adenot, one of the developers of the Web Audio API. You can find more details here. They should help you to achieve optimal performance for your web application with the Web Audio API.

For Developers

Sometimes the Web Audio API is not sufficient to solve certain problems. In this case, you can use the AudioWorklet described above to create functions yourself. In the best case, these are implemented in JavaScript to remain in the language of the API.

Paul Adenot recommends the following rules to get the best results:

- You should use typed arrays because they are faster than normal arrays.

- You should also reuse arrays.

- Do not manipulate the DOM or the object prototype during processing.

- Stay mono-morphic and use the same code path.

- Compile C or C++ to JavaScript.

- Extensions like SIMD.js or SharedArrayBuffer can improve the performance in browsers supporting them.

Reverb

As already mentioned, the Web Audio API offers the ConvolverNode to simulate very good sounding convolution reverb. Since this process is computationally intensive, it is worth looking for alternatives for mobile devices.

This is possible with delay, equalisers and low-pass filters, which can also be used to create reverb effects. More information on creating alternative reverb effects instead of convolution reverb with the Web Audio API can be found here.

Panning

For browser applications such as online games, where acoustic localisation should take place, binaural panning is very important. The HRTF panning is based on convolution, sounds good, but is as already mentioned, very computationally intensive. Here it is worthwhile to use an alternative for mobile devices.

You can use a short reverb and a panner in equalpower mode, which makes similar localisation as the HRTF panner possible. This is especially useful if the position of the source is constantly changing. More information about the PannerModel can be found here.

Conclusion

The Web Audio API was a revolutionary step for audio in browsers. If you keep an eye on a few AudioNodes and stick to some advice when you wnt to do custom processing, it also has a good performance.

If you are now interested in the Web Audio API or want to try out a small example, I recommend this example.

You will learn there how to build a boom box with little code that can pan the sound in real time.

I hope you enjoyed this article.

Related Links

More information about the Web Audio API (W3C)

Further informaton about the Web Audio API (MDN Web Docs; Mozilla)

Detailed Informations about the AudioNodes (MDN Web Docs; Mozilla)

Information about the AudioWorklet interface of the Web Audio API (MDN Web Docs; Mozilla)

Leave a Reply

You must be logged in to post a comment.