Adversarial machine learning and its dangers

The world is led by machines, humans are subjected to the robot’s rule. Omniscient computer systems hold the control of the world. The newest technology has outpaced human knowledge, while the mankind is powerless in the face of the stronger, faster, better and almighty cyborgs.

Such dystopian visions of the future often come to mind when reading or hearing the latest news about current advances in the field of artificial intelligence. A lot of Sci-Fi movies and literature take up this issue and show what might happen if the systems become more intelligent than humans and develop their own mind. Even the CEO of SpaceX, Tesla and Neuralink, Elon Musk, who is known for his innovative mindset, has a critical opinion towards future progress in artificial intelligence:

If I were to guess what our biggest existential threat is, it’s probably that. So we need to be very careful with the artificial intelligence. […] With artificial intelligence we are summoning the demon.

Elon Musk

For this reason he started the non-profit organization OpenAI in 2015 aiming at researching and developing friendly artificial intelligence. But also other famous scientists and researchers have doubts that the current hype regarding AI and Deep Learning yields only benefits for the people. Stephen Hawking claims, for example, that

the rise of powerful AI will either be the best or the worst thing ever to happen to humanity. We do not yet know which. The research done by this centre is crucial to the future of our civilisation and of our species.

Stephen Hawking

While these doomsday scenarios are targeted at a potential evolution of artificial intelligence in decades, if not centuries, there are already posing serious dangers and risks from such systems these days. In times of increasingly self-driving vehicles, personal assistants, recommender systems etc., where more and more task are taken over by computers, people rely on them and trust them. Consumers use artificial intelligence because it simplifies their daily lives, it offers convenience and helps them in certain situations. Companies use and develop intelligent systems because they provide multiple benefits compared to human labour: They are cheaper, have more capacity and are more efficient. But above all, we think that these algorithms are free of human errors: They don’t make errors due to fatigue or inattentiveness. They don’t make errors due to miscalculations or misjudgement. They behave like they are told to behave. And this is exactly the reason why the so-called adversarial machine learning is such a powerful opportunity that enables attackers to specifically manipulate these systems.

What is adversarial machine learning?

First of all, what is machine learning? Machine learning is a subfield of artificial intelligence, although the terms are often used interchangeably. It can be used to learn from input data in order to make predictions. The heart of each ML application is its underlying model, that defines the architecture of the algorithm and all of its parameters. It is important to mention that machine learning is not tied to a specific algorithm, for example not all ML applications make use of deep neural networks, although this type of algorithm is becoming more and more popular.

There are two ways of machine learning that you can use, based on the characteristics of the input data and on what you want to learn: With supervised learning classification (deciding between two or more classes, e.g. “Is the object in the image a bus, a car or a pedestrian?”) and regression (learning a function, e.g. “How long will my journey take?”) tasks can be solved, but they require labeled training data. This means that for each data instance, for example some image, the required target output has to be specified, e.g. “the image contains a car and pedestrian, but no bus”. Unsupervised learning on the other hand does not require labeled data, which can save a lot of time during data preparation, but the tasks are limited to cluster analysis like finding similarities, which is heavily used in recommender systems.

In order to get high-quality predictions from the algorithm, one has to train the model first. During the training phase, you feed lots of data into the algorithm, which computes an output based on the current model. In the case of supervised learning, the computed output is compared with the given target output. Based on the deviation, the model parameters are updated (this is basically the “learning”) in order to minimize the error. If the performance of the model is satisfying, it can be leveraged in production in order to predict, classify, cluster or whatever you want to do with your data.

Now that we have a principal understanding of how machine learning is working, we can get a better understanding of how adversarial machine learning is working. Generally speaking, inputs of machine learning systems are manipulated in a way that the output of the model becomes any desired result with high confidence. By applying smallest perturbation to the inputs, the model can be completely tricked. As a result, attackers can enforce the machine learning application to output any result they want under certain circumstances.

This opens the door for various types of attacks that are not limited to a specific type of application, technology, or algorithm. But how does it look like in practice?

Adversarial machine learning in practice

To give an example of what adversarial machine learning is capable of, we can take a closer look at fooling image recognition.

Some years ago, image recognition was done by applying traditional techniques, which includes choosing the suitable filters, applying edge and keypoint detection and clustering the visual words. Although this method worked well for years, it had some drawbacks: First of all, it depends on the specific application; you had to choose the correct setup, filters and other components tailored towards the individual application. Furthermore, the accuracy was acceptable, but not extraordinary. But from the 2010s on, a new technique was developed, strongly outpacing former traditional methods: Image recognition based on deep learning. They simplified and generalized the whole process while setting new benchmarks in the field of image recognition.

Although their accuracy is above any doubt, they are prone to adversarial machine learning attacks. In this context, the attacker manipulates an image in such a manner that the classification algorithm misclassifies the content of the image in favor of the attacker’s desired output.

Researchers presented two types of attacks, both fooling the algorithm:

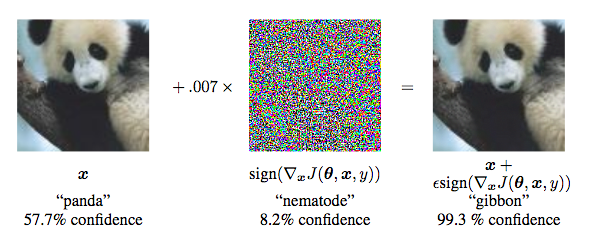

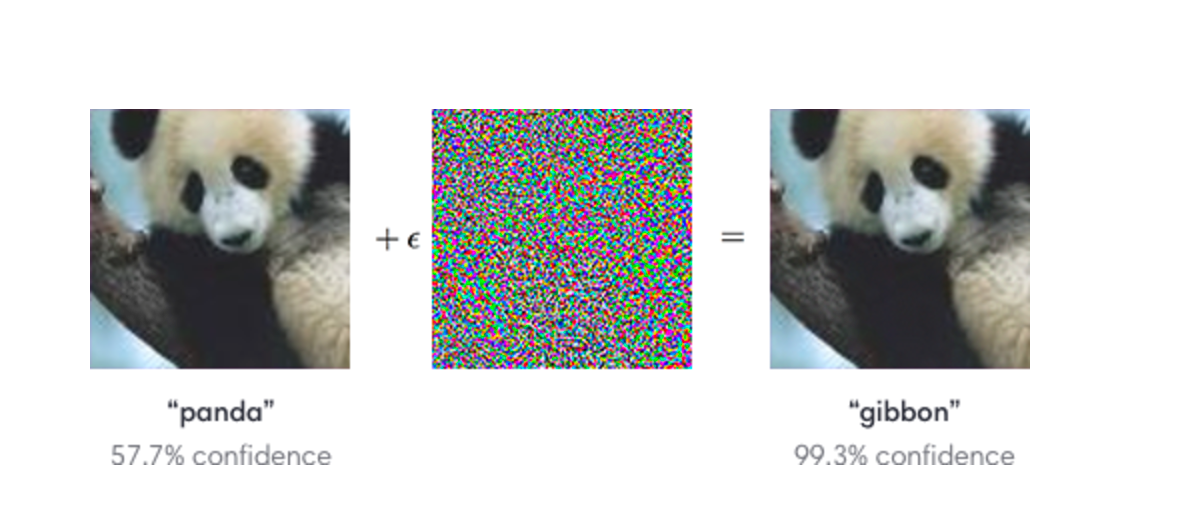

The first approach is based on a “normal” image, meaning that the image contains a human-recognizable object or scene. Now the attacker adds a tiny perturbation to the image, which is hardly perceptible by humans, if at all. However, the perturbation is not some random white-noise signal, but results from a targeted calculation. In the figure below, you can see an example:

On the left side, the original image containing a panda is depicted. If you would feed this image in the machine learning algorithm used for this example, it would classify the content with a confidence of 57.7% to be a panda. But instead of classifying the output of that picture, the attacker adds a perturbation to it, pictured in the center. Note that this noisy image is multiplied with a factor of 0.007, so it is actually not visible for human eyes. The combination of the original image and the perturbation is visible on the right: It looks exactly like the original panda image, even if you know that it contains the perturbation, you cannot recognize it. For humans, the object in the manipulated image is still clearly a panda, but the algorithm is almost completely convinced (99.3% confidence) that it contains a gibbon. But this is no coincidence: You can basically take any picture, add the correct perturbation to it and get the desired output classification.

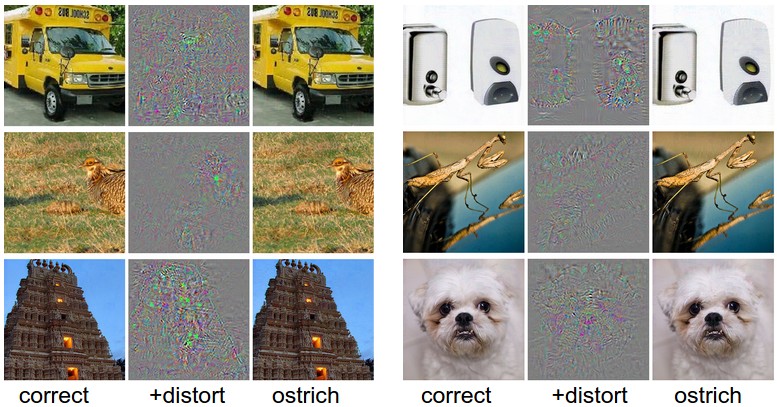

As you can see in the figure above, the algorithm classifies each of these six different images as ostrich, although they are containing something completely different.

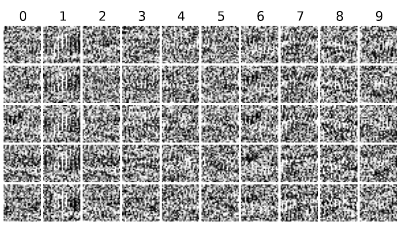

The second approach starts from scratch, without depending on an existing image. A completely random-looking image is generated, looking like a white-noise signal, but is actually recognized with high confidence by the algorithm. How does this look like?

In this example, you can see several newly generated images. For humans, it looks more like some kind of QR code or something, but the algorithm can recognize digits in these images. For example, the images in the first column are classified to contain the digit “0”. For us it’s impossible to see, but the algorithm yields a confidence score of 99.99%.

How does it work?

As described in the next section, adversarial machine learning is not limited to a specific application, e.g. image recognition, but is universally practicable in the field of machine learning. For that reason, it is not possible to explain how all of these attacks exactly work, because it depends on what you want to achieve. Therefore, a short summary is given of how the scientists managed it to produce and manipulate images like in the examples above in order to fool the image classification algorithm:

In general, the image generation and manipulation process is based on a local search algorithm, known as genetic algorithm. It is derived from the biological theory of evolution by Charles Darwin, transferring the concept of “survival of the fittest” to optimization problems.

Let’s call the the digit-generation example from above to mind: How can we produce such an image, that looks like white noise, but will be classified by a trained machine learning algorithm to contain any desired digit between 0 to 9 with a confidence of over 99%?

Imagine we want to craft an image which is classified as the digit “6”. At first, we have to produce an initial population. The population is the set of all relevant states, referred to as individuals. This means in practice that we have to generate a set of independent, random images. Each image is an individual, all images together form the population.

The whole process consists of four steps:

- Evaluation: Each individual is evaluated with a fitness function. The fitness function indicates how good the respective individual is with regard to subjective. In concrete terms, each previously randomly generated image is classified with the machine learning algorithm. The fitness value would be the confidence score of the algorithm for the digit “6”. Since the images are white-noise signals, the fitness evaluation value should be quite low initially for each individual.

- Selection: We select a subset from the complete population for the next step. However, these individuals are not randomly chosen. Since we want to build a better generation, we state that fitter individuals are more likely to be chosen. This means that we select those images, that are recognized to be a “6” with higher confidence, with higher likelihood.

- Crossover: We can only produce a new population, if we modify the selected individuals. Therefore we choose pairs of parent individuals which produce a child individual by crossover. Regarding our example crossover means that we choose a random crossover point (selecting a random pixel) and then taking all the pixel values before that crossover point from the first parent individual and taking all the pixel values after that crossover point from the second individual, combining them into the new child individual. By this means we hope to chose the best values from the parents and therefore produce an even stronger child.

- Mutation: With low probability, we modify individual pixel values in the child instance.

The child individuals replace the weakest individuals from the current population. Now we can evaluate the population again in order to determine the fitness values of all individuals. If the fitness value of one or more individuals is high enough, which means that the ML algorithm is confident enough to recognize a “6”, we can terminate the genetic algorithm. Otherwise, we start the next iteration, constituting the next generation.

For the example above, the researchers needed less than 50 generations to produce images with a confidence score of ≥ 99.99% and only 200 iterations to achieve a median confidence of 99%.

Using this approach, we don’t need access to the underlying model of the classification algorithm, we just need the output with the confidence score.

What else is possible?

Adversarial machine learning is not limited to image recognition tasks. There are many more applications that are vulnerable to these attacks:

Researchers showed how to easily fool Google’s new Cloud Video Intelligence API. This API offers the functionality to analyse videos and make them searchable like textual content. The main purpose of the Cloud Video Intelligence API is to recognize the the content of videos and provide the associated labels.

How could we adversarially use this API? Remember that the principle of adversarial machine learning consists of manipulating inputs of machine learning systems in a way that the output of the model becomes any desired result with high confidence.

Researchers took an image different from the actual video content and inserted it periodically and at a very low rate into the video. The original video contained for example animals like in the figure above. Now they inserted an image of a car, a building, a food plate or a laptop every two seconds for one frame. Although this is a very low rate (one frame every two seconds for a video of 25 fps corresponds to a insertion rate of 2%), the API is deceived into returning only the video labels which are related to the inserted image, but did not return any labels related to the original video.

Another team showed how to attack voice recognition systems. Personal assistants are widely and extensively used nowadays, but they are also prone to adversarial attacks. Similar to the image generation example above, they generated voice commands in order to fool systems like the Google Assistant or Apple’s Siri. For their whitebox approach they had complete knowledge of the model, its parameters and architecture. Hence the attack worked out very well: The voice commands were not recognizable by humans, but completely understandable by the system. This way an attacker could attack any voice recognition system without being noticed by the user. However, in reality it’s not common to have complete knowledge about the system’s internals. Therefore they performed another experiment, this time treating the machine learning model as blackbox. Even though the voice commands generated now were easier understandable for humans, they were also harder to process by the voice recognition systems.

A third team of researchers also showed how to attack malware classification systems. First, they created an artificial neural network that acts as a malware classifier and trained it with 120.000 android applications, 5.000 of them being malware. Now they tried to attack their own network, treating it like a blackbox (they pretended not to know about the details of the model, making it more realistic). For this they modified the malware examples in such a manner that they are classified by the neural network to be non-malicious by adding features like permissions or API calls to the Android application. The results showed that they were successfully fooling the classifier, which did not recognize the modified malware in 60% – 80% of all cases.

This shows that adversarial machine learning can be a huge threat in terms of machine learning driven systems, especially for security-critical domains.

Why does it work?

Why are these algorithms vulnerable to adversarial machine learning? These attacks can not only be targeted towards deep neural networks, but also other, completely different algorithms. That means the attack is universal and therefore practicable for many applications. But what is the reason for that?

If we approach the topic from a higher level, we find the cause for this in the similar, but not completely equal ways of learning that exist between humans and machines. Machine learning is, like many other techniques in artificial intelligence (for example neural networks and evolutionary algorithms), strongly inspired by nature: As explained previously, machine learning works by training the model with large amounts of data, while it’s constantly improving. Humans learn in the same way: There is a supervisor, most likely the parents or teachers, who tells the child what to do and what not to do. A child learns to write by repeating to write words over and over again. With every iteration, there are minor improvements and in the long term the child is “trained”. However, there are differences: When a human classifies an image, there are multiple areas in the brain involved, processing the information, gathering information from the long term memory, linking several impulses, forming connections, … This mechanism is incredibly complex, even scientists are not yet completely aware of what exactly is going on.

On the other hand, models for machine learning algorithms consist of mathematical formulas which basically map the high dimensional input data onto low dimensional output data. We know what the algorithm is doing, so we know how it’s working internally.

While humans make use of their abstract representation of knowledge, machines rely on parameterized functions. If you can control the functions and know exactly what they are doing, it’s a lot easier to modify their input in order to receive the desired output.

But referring back to the panda-image example from above, why exactly does such completely random-looking noise completely fool the neural network, while there is no difference recognizable by humans?

The answer is that we actually don’t add random noise to the picture. The perturbation is calculated subject to the desired output. Explaining the details of this method would go beyond the scope of this post, but a short summary is provided:

The aim of the training phase is to improve the model. For neural networks this means that we adapt the weights between the nodes in order to get better results. The weights are adapted in dependency of the loss function, measuring the deviation between actual output and target output. By calculating the gradient we know how to adjust the weights (gradient descent algorithm). In order to calculate the perfect perturbation to fool the classifier, we turn the tables: Instead of adapting the weights depending on the output, we adapt the input depending on the output. To put it simply, we calculate the perturbation that increases the neuron’s activation for the desired output. For example the perturbation that is added to the panda image above focuses on the activation of the nodes that are triggered when recognizing a gibbon. So all we have to do is to calculate the gradient ascent instead of the gradient descent based on the desired output.

Now you may ask why we would have to run algorithms over several iterations in order to generate adversarial instances like in the genetic algorithm example that we used to produce machine-recognizable digits, when all we have to do is to simply calculate the gradient ascent. The answer is that calculating the gradient ascent is the faster, easier and more precise solution, but it has one major drawback: You need access to and knowledge about the underlying model (its weights) which is not given in most cases. To sum it up, if you want to generate adversarial examples with an whitebox attack, you may want to choose the gradient ascent method, while on blackbox attacks you have to go with other techniques like local search or even bruteforce attacks.

What can happen?

The bandwidth of targets and impacts is very manyfold. For some applications there is no big impact, for example fooling recommender systems, weather forecasts or artificial intelligence in computer games may lead to confusing results for the end user, but there is no major damage. But then again, considering applications in security- or safety-critical domains, adversarial machine learning can result in serious damages. Imagine your self-driving car recognizes a right-of-way sign instead a stop-sign, because somebody manipulated it. The driver has no opportunity to react to this misinterpretation because the perturbation is not visible to him. Or think of AI systems that are trained to write news and reports for newspapers like Google is testing currently. If you can fool this system, it is open for propaganda and fake news. Furthermore, military drones can use image recognition to identify enemy targets. It is frightening to think of scenarios in which it’s possible for attackers to mislead them into attacking uninvolved targets. Or your phone reacts to a hidden voice command, incomprehensible for humans, that tricks your personal assistant into posting your private data to Twitter.

Countermeasures

It is hard to protect machine learning systems against adversarial attacks, because there is no known solution to reliably detect perturbations or adversarial manipulations. However, some approaches exist in order to defend against these attacks:

- Request user feedback: Before any actions is taken by the system, the user approval is required. This way, the user acts as an instance to control the results of the algorithm and can deny them if he detects any mistake. But this approach has two main drawbacks: First of all, asking the user for feedback contradicts the idea of AI, which should support the user as much as possible with as little effort as possible. This limits the usability. Secondly, the user is not always able to give the correct feedback, for example the invisible perturbation in the panda image. Therefore, requiring user feedback is not a suitable solution against AML.

- Adversarial learning: Machine learning models can only recognize something they’ve already seen before. An image recognition system only knows how a car looks like if a number of cars were present in the learning dataset. The same also applies for AML: The model can’t recognize adversarial instances as such if it has not learned what adversarial instances are. Therefore you can deliberately add adversarial data to the training set in order to recognize them in the production phase. This is a good start for protection against AML, but attacks can still be successful because of the diversity of possible attacks.

- Choose another model: The previously presented approaches are a way of minimizing the effects of adversarial machine learning, but do not fight their cause. Researchers showed that AML is possible due to the linearity of the models. Only with linear components in the model it is possible to fool it. Therefore the ultimate solution against AML is to use a model that is nonlinear, for example random forests or radial basis functions. The problem is that these models may not always be as good as models like neural networks. In that case the developer has to decide between high performance and high security. Unfortunately, performance sells better than security. Machine learning models “were designed and trained to have good average performance, but not necessarily worst-case performance”, as Nicolas Papernot, Google PhD fellow in security, put it into words.

Conclusion

Machine learning applications are already widely spread and are involved in an increasing part of our daily lives. They can be extremely helpful, but they can also be exploited by attackers, feeding them with adversarial instances. While the impacts are not always harmful, they can cause great damage in some cases. The problem is that currently most of the deployed models are vulnerable to those attacks due to their linearity. Almost every model based on the popular neural networks is affected. Furthermore, there is no existing countermeasure against AML, but it’s even getting worse: Attackers find more and more ways to fool the models. So does this mean that Elon Musk is right and we should fear artificial intelligence, because it’s our biggest threat? At least it seems that AI will be more important than ever and new progress will be made in the next years. Then we will know whether adversarial attacks have grown to become normal dangerous side effects or we discover a reliable way of defending against them.

There is only one fact that shows us that adversarial machine learning has its limitations, too: To attack an existing model in an optimal way, we need internal information about it. However, most companies use proprietary models, keeping the architecture and parameters secret. In this manner they are still assailable, e.g. with search algorithms, but not as targeted. What a consolation!

Maybe we should listen to the warning voices. Who wants to live in a world in that our lives are depending on the unreliable decisions of machines? Sounds a lot like dystopia and Science-Fiction, huh?

Sources:

- Deceiving Google’s Cloud Video Intelligence API Built for Summarizing Videos (https://arxiv.org/pdf/1703.09793.pdf)

- Adversarial Perturbations Against Deep Neural Networks

for Malware Classification (https://arxiv.org/pdf/1606.04435.pdf) - Explaining and harnessing adversarial examples, J. Goodfellow et al. (https://arxiv.org/pdf/1412.6572.pdf)

- Intriguing properties of neural networks, C. Szegedy et al. (https://arxiv.org/pdf/1312.6199.pdf)

- Deceiving Google’s Cloud Video Intelligence API Built for Summarizing Videos, H. Hosseini et al. (https://arxiv.org/pdf/1703.09793.pdf)

- Deep Neural Networks are Easily Fooled: High Confidence Predictions for Unrecognizable Images , A. Nguyen et al. (https://arxiv.org/pdf/1412.1897.pdf, http://www.evolvingai.org/fooling)

- Hidden Voice Commands, N. Carlini et al. (https://www.usenix.org/system/files/conference/usenixsecurity16/sec16_paper_carlini.pdf, http://www.hiddenvoicecommands.com/)

- Adversarial Perturbations Against Deep Neural Networks for Malware Classification, K. Grosse et al. (https://arxiv.org/pdf/1606.04435.pdf)

- The Limitations of Deep Learning in Adversarial Settings , N. Papernot et al. (https://arxiv.org/pdf/1511.07528.pdf)

- Fooling The Machine, D. Gershgorn (http://www.popsci.com/byzantine-science-deceiving-artificial-intelligence) (Image)

Leave a Reply

You must be logged in to post a comment.