Tag: deep learning

Sicherheitscheck – Wie sicher sind Deep Learning Systeme?

In einer immer stärker digitalisierten Welt haben Neuronale Netze und Deep Learning eine immer wichtigere Rolle eingenommen und viele Bereiche unseres Alltags in vielerlei Hinsicht bereichert. Von Sprachmodellen über autonome Fahrzeuge bis hin zur Bilderkennung/-generierung, haben Deep Learning Systeme eine erstaunliche Fähigkeit zur Lösung komplexer Aufgaben gezeigt. Die Anwendungsmöglichkeiten zeigen scheinbar keine Grenzen. Doch während…

Allgemein, Artificial Intelligence, Deep Learning, System Architecture, System Designs, System Engineering, Ultra Large Scale Systems

Allgemein, Artificial Intelligence, Deep Learning, System Architecture, System Designs, System Engineering, Ultra Large Scale SystemsAn overview of Large Scale Deep Learning

article by Annika Strauß (as426) and Maximilian Kaiser (mk374) Introduction Improving Deep Learning with ULS for superior model training Single Instance Single Device (SISD) Multi Instance Single Device (MISD) Multi Instance Multi Device (MIMD) Single Instance Multi Device (SIMD) Model parallelism Data parallelism Improving ULS and its components with the aid of Deep Learning Understanding…

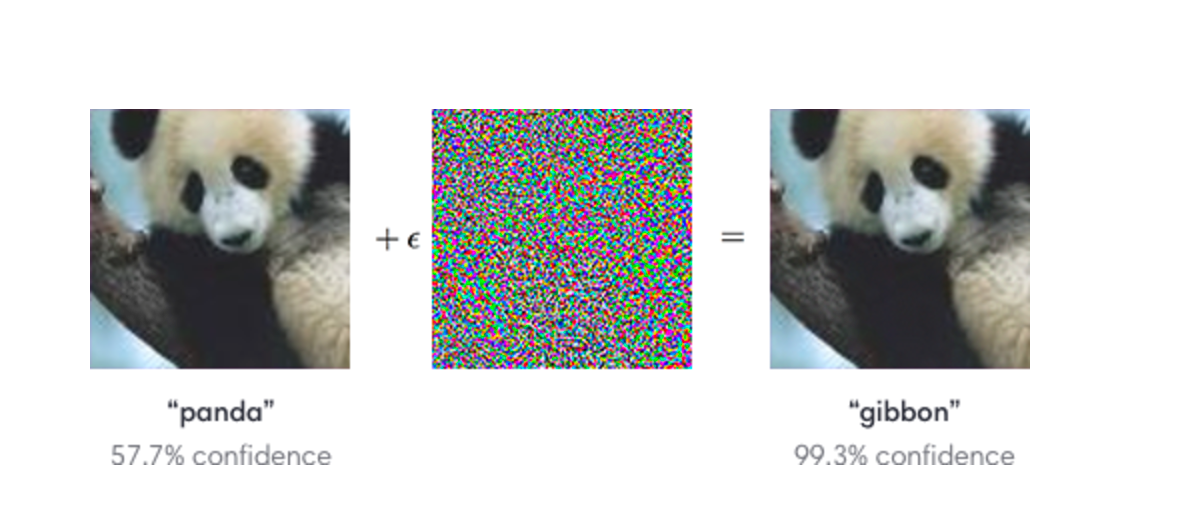

The Dark Side of AI – Part 2: Adversarial Attacks

Find out how AI may become an attack vector! Could an attacker use your models against your? Also, what’s the worst that could happen? Welcome to the domain of adversarial AI!

Large Scale Deployment for Deep Learning Models with TensorFlow Serving

Introduction “How do you turn a trained model into a product, that will bring value to your enterprise?” In recent years, serving has become a hot topic in machine learning. With the ongoing success of deep neural networks, there is a growing demand for solutions that address the increasing complexity of inference at scale. This…

Improved Vulnerability Detection using Deep Representation Learning

Today’s software is more vulnerable to cyber attacks than ever before. The number of recorded vulnerabilities has almost constantly increased since the early 90s. The advantage of Deep Learning algorithms is that they can learn vulnerability patterns on their own and achieve a much better vulnerability detection performance. In this Blog-Post, I will present a…

Federated Learning

The world is enriched daily with the latest and most sophisticated achievements of Artificial Intelligence (AI). But one challenge that all new technologies need to take seriously is training time. With deep neural networks and the computing power available today, it is finally possible to perform the most complex analyses without need of pre-processing and…

FOOLING THE INTELLIGENCE

Adversarial machine learning and its dangers The world is led by machines, humans are subjected to the robot’s rule. Omniscient computer systems hold the control of the world. The newest technology has outpaced human knowledge, while the mankind is powerless in the face of the stronger, faster, better and almighty cyborgs. Such dystopian visions of…