Welcome to the first of two blog posts, that will deal with the latest developments in cloud security.

In this post, we will initially look at the role the cloud plays in today’s market and why it is important to deal with the security of the cloud. In order to address the security aspects, we need to know how the cloud works, so we’ll then take a closer look at the concepts and technologies used in the cloud.

After we know the technologies of the cloud, we will consider their weaknesses and threats in the next post. To this end, we are trying to identify the weaknesses of the cloud as far as possible, and we will regard a list of threats that companies can face when using the cloud. After that we will observe scientific papers that currently deal with the issue of cloud security. Finally, we will summarise, draw a conclusion and look ahead to potential future developments in the area of cloud security.

And now I wish you to enjoy reading! 🙂

A current market overview

Through digital transformation, cloud computing has become increasingly important in the last few years for companies from a variety of areas. In addition to big data, mobility, Internet of Things and cognitive systems, today, cloud computing is one of the most important technologies for implementing digital transformation. With the cloud, new services can be quickly and easily made available and scalable, mostly without acquiring own hardware (that applies to public and hybrid clouds, as well as to private hosted clouds – you can find a more detailed explanation of the various cloud types in the next section), which is why the cloud can rightly call itself an innovation accelerator. In addition, it has now become an essential component of IT strategy of many companies and has thus become the de facto architectural model of digital transformation. It is not surprising that IT budgets in many companies are strongly moving towards cloud computing. By 2020, expenditures for cloud computing in Germany are expected to rise to about 9 bil. euros, which is about three times as much as in 2015 with 2.8 bil. euros.

Companies have three essential requirements when using the cloud. These are: availability, performance and safety. A cloud will only be successful if the users’ data is secure and customers can rely on it, because even today the greatest obstacle to the use of the public cloud is the fear that unauthorised people can have access to sensitive company data. This fear is reflected in the opinions of cloud users: German users tend to prefer cloud providers that are bound to the German data protection law; The majority of German IT decision makers tend to rely more on German cloud providers, and even more than half of them distrust American providers when regarding data privacy (which in turn creates opportunities for regional providers that can complement the offer of cloud giants). This is one reason why German companies have opted for private cloud solutions in the last few years and have therefore run applications with sensitive data on their own IT environments. However, as early as 2015, more than half of the companies have already planned to migrate to hybrid cloud within the next one or two years (and thereby maintaining the full control of the private cloud and being able to scale to the public cloud at request peaks).

The confidence in the German cloud, because of legal provisions, has not been hidden from the EU and therefore it is going to implement the General Data Protection Regulation (GDPR) from May 2018 onwards. This will enable cloud providers, which are located within the EU, to enjoy the same trust as a German cloud. This regulation will also apply to third countries offering their services within the EU. Legal regulations are a not negligible point when it comes to expanding the security level on a large scale. Attackers, however, who are intent on causing damage and revealing information, cannot be impressed by any legal provisions. They rigorously try to exploit any technical or human weaknesses to achieve their goals. Legal regulations may help to avoid procedures that could jeopardise the safety of certain systems, but to achieve proper safety in systems, weak points, be it human or technical origin, must be eradicated, resulting in more robust and less susceptible systems. For this reason, we will focus on the technical background of cloud security issues in these two posts.

In order to be able to deal with technical security in the field of cloud computing, we need to have a profound knowledge of the concepts and technologies used in the cloud, which is why we will look more closely at them in the next section.

The concepts and technologies of the cloud

Now we will look at the concepts and technologies of the cloud a little bit more closely. We’ll start from the beginning with the explanation of what the cloud is, and then go on to the various concepts and solutions that exist, up to the technical construction of the different methods for server virtualisation. Regarding the methods of virtualisation, we’ll already try to draw conclusions about their impact on security. So, let’s start!

What is the cloud?

Cloud Computing is storing, managing and processing data online. An ordinary data center consists of computing resources and storage resources, which are interconnected by a network. In the cloud, these resources are virtualised by certain techniques (at which we will look more closely later on). The virtualisation in combination with the use of specific management software enables an intelligent and automated orchestration and thus an efficient utilisation of recourses.

Resource management

Regarding resource management, there are two keywords that you should have heard of when dealing with the cloud. These keywords are: software-defined infrastructure (SDI) and infrastructure as code (IaC). SDI stands for an agile IT infrastructure, which is a flexible environment in which the resource management is automated. At IaC, the infrastructure can be automatically managed and provided by code, which is also referred to as so-called programmable infrastructure. The advantages resulting therefrom are automated and dynamic adaptability and scalability. Examples include: the ability to quickly make virtual servers available and remove them just as quickly, and automatically de-escalate overloads and failures. The available resources thus can be used more (cost-)effectively than in ordinary data centers. This results in the business model of the public cloud where users usually only have to pay for actual usage, because unused resources can be quickly and flexibly distributed to other users.

Cloud types

There are different cloud types, which, in my opinion, contribute a decisive part to the security of the cloud. They determine on which servers processes and data are located. For this reason, we will consider them briefly below.

The main types of the cloud are: public cloud, private cloud and hybrid cloud. There is also the classification in community cloud (where only a selected pool of users from several organisations can access), and different variations of the mentioned cloud types, such as hosted private cloud (for the hosted private cloud hardware is rented from a web hosting company and then a private cloud is established on it), but we will not go into it any further. The simultaneous use of different cloud services and cloud providers is called multi cloud.

The public cloud is characterised by a multi-tenant environment, which usually only needs to be paid for actual use and really occurring data traffic. The customer uses a workspace or a service on the provider’s servers. Due to the opaque environment, only insensitive data should be processed in public cloud. The public cloud is used to host everyday apps, hand in resource management to the provider, and for applications where data traffic can go up unpredictable. In the private cloud, unlike the public cloud, there is only one tenant or user of resources and the services run on dedicated servers. The advantage of efficient and flexible resource management is, of course, also given in private cloud. In addition, the private cloud is completely and independently controllable. It is used for foreseeable workloads and for sensitive or company-critical processes and data. The hybrid cloud is a combination of private and public cloud. It is used for services with uncertain demands. For example, applications can be deployed on private cloud and extended or scaled to public cloud at demand peaks (which is also referred to as cloud bursting). Thus for hybrid cloud dedicated and shared servers are used. It offers the controlability of the private and additionally the scalability of the public cloud.

Service models of the public cloud

In the public cloud, there are different service models, which we will look at briefly. They determine which parts you can manage and which are managed by the cloud provider. Therefore, in my opinion, they also contribute to the security of the cloud.

The different services are (as defined by the National Institute of Standards and Technology): infrastructure as a service (IaaS), platform as a service (PaaS) and software as a service (SaaS). In addition, there are countless other names for services, such as anything or everything as a service (X/EaaS) (XaaS comprises all public cloud services to the use of human intelligence as human as a service (HuaaS)), , but they are not relevant to us.

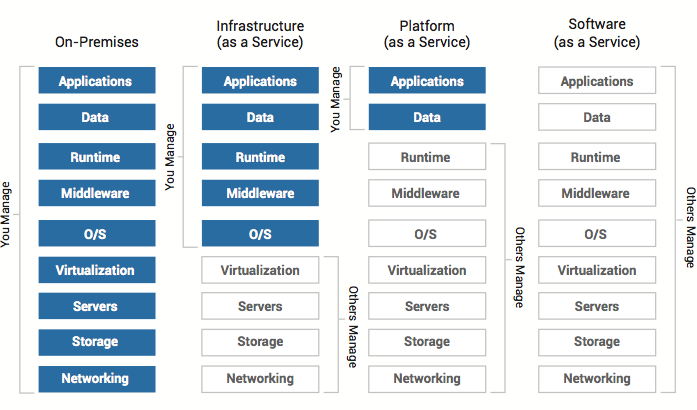

Figure 1: Service models of the cloud – Who manages what?

Figure 1: Service models of the cloud – Who manages what?

(Source: http://community.kii.com/t/iaas-paas-saas-whats-that/16)

Figure 1 shows who manages what when using the above-mentioned service models. With SaaS, the user utilises a prepared application or service. Examples of this are Facebook or Google’s search engine. In PaaS, the user hosts an application and data on a platform supplied by the cloud provider. An example of this is Amazon Lambda, where the customer deploys code in one of the available programming languages and then executes it by means of specific (function) triggers (the executable code is called Lambda function). At IaaS, the customer is provided with a complete infrastructure below the operating system. The user can control and configure everything on the operating system (OS). The OS itself is set up by the provider, because the virtualisation, the core of cloud technology, which allows the flexible management of resources (including the creation of virtual systems) is done by the provider. Compared to the service models of the public cloud, the stack on the left represents the private cloud, where full control over all instances is available, from the network to the application itself.

Up next, we’ll have a closer look at the structure of server virtualisation techniques used in the cloud.

Technologies for virtualisation

Virtualisation is the key point that makes the cloud possible at all. Through the virtualisation of systems, resources can be efficiently utilised. For virtualisation, there are several technologies that can extend from virtualisation level (see Figure 1) up to the application. These are: Hypervisors, virtual machines (VMs), unikernels and containers (and today also a combination of unikernels and containers). In the following we will look more closely at the characteristics and structure of these virtualisation technologies, because they are, in my opinion, the key point for security in the cloud (virtual machines should be known to everyone, so we don’t go into them separately).

Hypervisors

Hypervisors, also known as virtual machine monitors (VMMs), are the core of many products for server virtualisation. They enable simultaneous execution and control of several VMs and distribute the available capacities. Hypervisors are lean, robust and performant, because they contain only the software components needed to achieve and manage virtualisation of operating systems (OSes), which reduces the attack surface. Figure 2 shows the two different types of hypervisors.

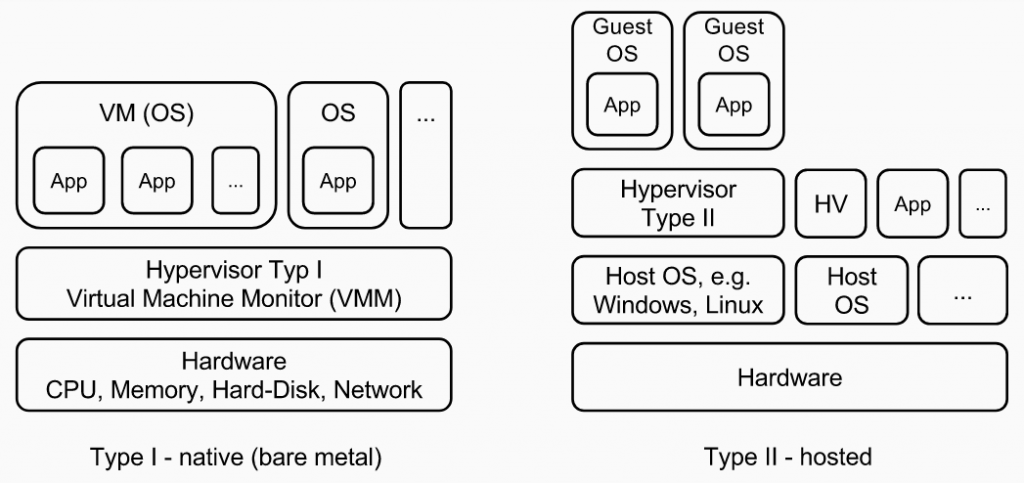

Figure 2: Types of hypervisors

Figure 2: Types of hypervisors

Type I is referred to as a native or bare metal hypervisor, because it relies on the hardware and can access it directly. Multiple VMs can be created on the hypervisor itself, and again multiple applications can run on the VMs. The most widely used type I hypervisor representative is Xen. Type II is called hosted hypervisor, because it is based on a host OS. On the hypervisor itself, VMs can be created (they are called guest OSes here). The logical separation of the entities is evident by the indicated boundaries. Vertical boundaries represent a certain security barrier or isolation. Malicious code primly spreads vertically. If, for example, a hypervisor is infected, the attacker can enter the components based on it and control it, if no special security precautions have been taken in the overlying layers.

Unikernels

Unikernels are specialised, single-address space machine images created using library operating systems (Lib OSes – a Lib OS has only one virtual address space). In contrast to normal OSes, they are not designed for multi-user environments and general-purpose computing. Unikernels contain only a minimal set of libraries (of an operating system) needed to run a app. To create a unikernel, libraries, the app and code for configuration are compiled. These properties result in a complete system optimisation, which is also referred to as specialisation, which has the advantage of a reduced attack surface. Unikernels can run directly on the hardware or on hypervisors.

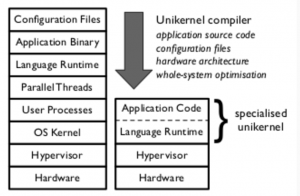

Figure 3: Specialised unikernel

(Source: https://mirage.io/wiki/technical-background)

Figure 3 shows the reduced codebase of a specialised unikernel in contrast to the software stack of a common OS or a VM. Unikernels only have about 4% of the amount of code of a comparable OS, making the footprint and the attack surface very small. As mentioned above, a Lib OS has only one virtual address space. This means that only one address space is available for each specialised unikernel and thus also per application. This leads to process isolation against other entities. As a result, malicious code cannot spread horizontally. Unikernels are also particularly fast. If a request is sent to a unikernel, the unikernel can be booted and answer the request within a request-timeout. In summary, unikernels have a service-oriented or microservices software architecture. A Lib OS used for the creation of unikernels is, for example, MirageOS.

Containers

In a container (sloppily formulated), everything is packaged, needed to get a piece of software run. Containers only include libraries and settings necessary to run certain software (no full OSes, as opposed to VMs). Thus, containers are efficient, lightweight and self-contained (isolated) systems. The software they contain will run the same regardless of the operating system they are deployed. The leading software for building containers is Docker.

Let’s now look more closely at the structure of containers.

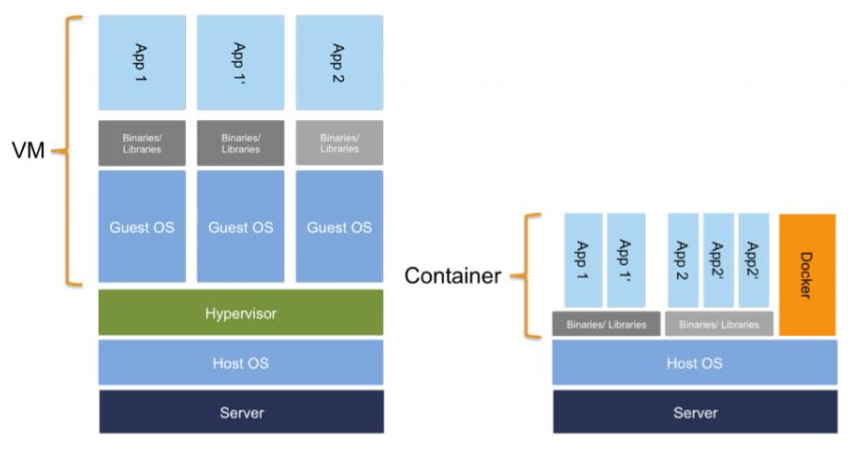

Figure 4: Virtual machines vs. Docker containers

(Source: https://www.computerwoche.de/a/docker-in-a-nutshell,3218380)

Figure 4 shows virtualisation with a type II hypervisor and VMs on the left-hand side, and on the right- hand side virtualisation with containers. As we can see, different containers (App 1 and App 2 on the right) are isolated from each other, but same apps share the same binaries or libraries, and different containers even build on the same operating system (the orange field on the right represents the Docker engine, which is the software for creating containers, called containerisation). Whereas hypervisoring (on the left) creates separate guest OSes with binaries and libraries for each app. Thus, we can conclude that on the one hand containers are a slimmer alternative than the virtualisation via VMs, but on the other hand they have a serious lack of isolation and thus in terms of security.

The kernel, on which a container builds, comprises control possibilities for file systems, network, application processes and so on. If a kernel is compromised, the entire functionality can be exploited to access containers, that build on the kernel, and to influence or control them. A hypervisor, on the other hand, offers much less functionality than a kernel, that can be exploited. To counteract this problem, today, there is a solution that unites containers and unikernels. It was introduced after Unikernel Systems (the organisation of the open-source unikernel software) joined Docker, because both containers and unikernels pursue the same goals: isolation and specialisation.

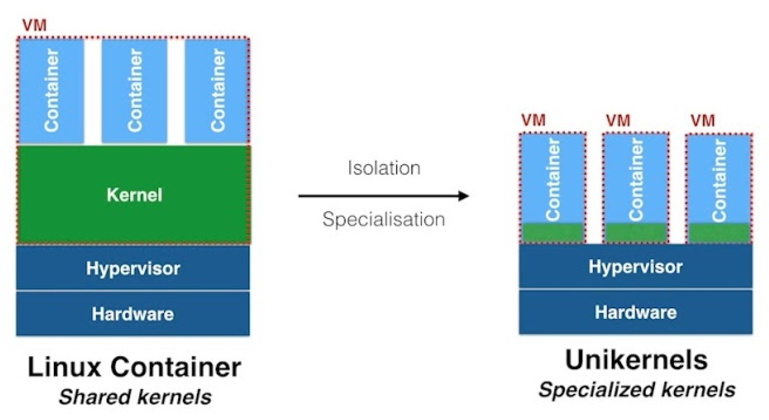

Figure 5: Shared kernel vs. specialised kernels

(Source: https://www.youtube.com/watch?v=X1Lfox-T0rs)

Figure 5 shows a typical shared kernel with several containers running on the left-hand side. On the right-hand side, we see specialised kernels (green) on each of which a container runs (the specialised kernels each represent unikernels). Thanks to the combination of unikernel and container, the desired insulation can now be achieved and the additional specialisation offers the advantage of a reduced attack surface.

We could go deeper into the different virtualisation technologies and see how the components are build up in detail and are connected and communicating with each other. But this would exceed the already very extensive scope of this post even further. So we will leave it at this time.

That’s it for the first part of the two blog posts about cloud security. I would like to thank everyone who has made it to this point (that was surely not easy ;-P). Let’s quickly recap what we’ve learned in this post, and how it goes on in the next one. We looked at the latest metrics of the cloud market, and we saw that there is a need for security of cloud users, which should best be satisfied by the elimination of technical and human weaknesses. In addition, we learned the different concepts and technologies used in the cloud and have already drawn some conclusions on the security of the different technologies for server virtualisation.

In the next post, we will be able to look at the vulnerabilities and threats of cloud computing. Also, we will look at current scientific papers dealing with security of the cloud. To conclude, we will draw a résumé and venture out into the prospects of cloud security.

If you have any questions or comments about this post, please let me now by leaving a comment. I am really looking forward to your feedback! And I’m already looking forward to welcoming you to the next blog post, where it is time to go deeper into the security aspects of the cloud! So, stay tuned and see you soon!

Sources (Web)

All sources were last accessed on September 3, 2017.

- IDC Central Europe GmbH: IDC Infografik – Hybrid Cloud in Deutschland 2016 (2016), http://www.idc-central.de/files/infografik_hybrid_cloud2016/

- Intel Corporation: IDC-Studie: Deutsche Unternehmen setzen vermehrt auf Hybrid Clouds (n.d.), https://www-ssl.intel.com/content/www/de/de/cloud-computing/idc-studie-deutsche-unternehmen-setzen-vermehrt-auf-hybrid-clouds.html

- IDC Central Europe GmbH: IDC Infografik – Cloud Computing in Deutschland 2017 (2017), http://www.idc-central.de/files/infografik_cloud_computing2017/IDC_Infografik_Cloud_Computing_2017-DE.pdf

- Akamai Technologies: Cloud-Architektur (n.d.), https://www.akamai.com/de/de/resources/cloud-architecture.jsp

- A. Pols and M. Vogel: Cloud Monitor 2017: Eine Studie von Bitkom Research im Auftrag von KPMG Pressekonferenz (March 14, 2017), https://www.bitkom.org/Presse/Anhaenge-an-PIs/2017/03-Maerz/Bitkom-KPMG-Charts-PK-Cloud-Monitor-14032017.pdf

- C. Pütter: Die 10 wichtigsten IT-Trends bis 2018 (May 15, 2017), IDG Business Media, https://www.cio.de/a/die-10-wichtigsten-it-trends-bis-2018,3247944

- O. Schonschek: Datenschutz-Grundverordnung – was Cloud-Nutzer wissen müssen (May 8, 2017), IDG Business Media, https://www.cio.de/a/datenschutz-grundverordnung-was-cloud-nutzer-wissen-muessen,3330645

- IDC Central Europe GmbH: IDC Infografik – Software Defined Infrastructure in Deutschland 2016: Agile IT-Infrastrukturen als Basis für die digitale Transformation (2016), http://www.idc-central.de/files/infografik_software_defined_infrastructure2016/IDC_Infografik-Software_Defined_Infrastructure_2016-DE.pdf

- M. Rouse: Definition: Infrastructure as Code (IAC) (February 2016), TechTarget, http://www.searchenterprisesoftware.de/definition/Infrastructure-as-Code-IAC

- Rackspace US, Inc.: Was ist Cloud Computing? (n.d.), https://www.rackspace.com/de-de/cloud/cloud-computing

- cio.de: Cloud Computing (n.d.), IDG Business Media, https://www.cio.de/k/cloud-computing,3503

- ITwissen.info: XaaS (anything as a service) (February 28, 2013), DATACOM Buchverlag GmbH, http://www.itwissen.info/XaaS-anything-as-a-service-Anything-as-a-Service.html

- ITwissen.info: Hypervisor (February 6, 2014), DATACOM Buchverlag GmbH, http://www.itwissen.info/Hypervisor-hypervisor.html

- Wikipedia: Unikernel (August 12, 2017), https://en.wikipedia.org/wiki/Unikernel

- Wikipedia: Single address space operating system (February 29, 2016), https://en.wikipedia.org/wiki/Single_address_space_operating_system

- Wikipedia: Virtual address space (August 21, 2017), https://en.wikipedia.org/wiki/Virtual_address_space

- P. Rubens: What are containers and why do you need them? (June 27, 2017), IDG Communications, Inc., http://www.cio.com/article/2924995/software/what-are-containers-and-why-do-you-need-them.html

Leave a Reply

You must be logged in to post a comment.