Autonomous cars are vehicles that can drive to a predetermined destination in real traffic without the intervention of a human driver. To ensure that the car gets from A to B as safely and comfortably as possible, various precautions must be taken. These precautions are explained in the following sections using various questions and security concepts. In addition, further questions are used to answer typical questions in the field of autonomous driving.

What happens if one of the systems or sensors fails?

A complete failure of the machine perception or the sensors must not occur. If this should happen, the car would drive completely blind and the probability of an accident would be correspondingly high. For this reason, sensory redundancy is provided.

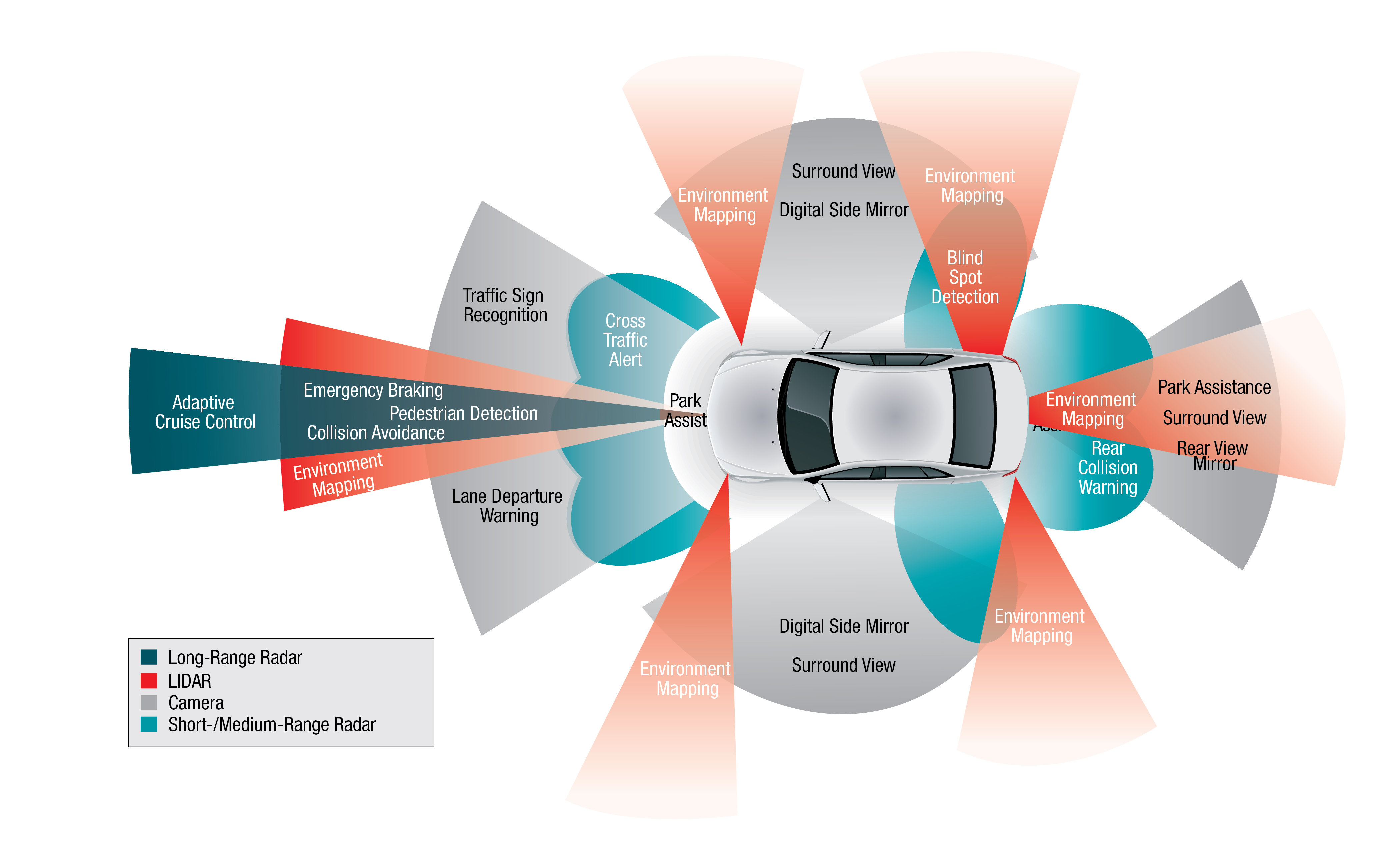

Such redundancy is provided by so-called multi-sensor systems (see Figure 1). These systems use and merge the data and information from the various sensors and sensor principles to compensate for a possible partial system failure. For example, if radar and lidar sensors are installed, both provide distance measurement data, but in different quality and in a different sensor detection range. However, due to the similarity of the measurement data, they can support each other.

How can the risk of a failure be reduced or a problem identified?

The recognition of risky situations represents a great challenge. External events must be recorded via the perception of the environment and correctly interpreted in order to be able to react appropriately. Nevertheless, technical faults in the vehicle and in the vehicle control system must also be detected. During vehicle operation, a human driver observes the warning and control lamps and senses changes, for example due to technical defects. However, since an autonomous vehicle does not have a driver, sensors and functions must be integrated that detect technical defects and faults and determine the current and future performance and possible range of functions based on their severity. The expected complexity of a vehicle with a vehicle guidance system leads to a high number of measured values. As a result, a self-representation of the vehicle is created, which is used to arrive at an assessment of the current risk depending on the situation and performance.

Which is the biggest human-machine problem?

Google’s autonomous vehicles have so far been accident-free, i.e. they themselves have not yet caused any accidents. However, there have already been some rear-end collisions, which have been caused by the fact that the human drivers could not properly assess the cautious driving style of autonomous cars. For example, an autonomous vehicle slowed down too suddenly because of a pedestrian who might be about to want to cross the street. The driver of the following car had not expected such a caution and therefore it came to the accident.

While this confirms the safety of autonomous vehicles, which in principle can reduce accidents, they are also at great risk when they are on the road with human drivers. The reason for this is that stubbornly rule-guided and too cautious driving of the software meets hasty, inattentive, emotional and rules creatively following or ignoring driving of people.

This dilemma is made even more difficult, at least when autonomous and human-controlled vehicles share the roads, because it has turned out that the frequency of accidents involving autonomous vehicles in conjunction with human drivers is twice as high as that of human drivers who interact with one another and who seem to be able to assess each other better (as Bloomberg reports).

Can autonomous vehicles reduce the number of accidents?

One advantage of autonomous driving is supposed to be vehicle safety. However, as comparatively few autonomous vehicles are currently on the road, it is much more difficult to forecast a reduction in accidents. For this reason, it is worth taking a look at the assistance systems currently in use and the effects that have occurred since their introduction. Looking at ESP (Electronic Stability Program), for example, the number of accidents could be reduced by up to 25% in some cases. In general, the number of accidents or serious accidents has decreased due to the use of assistance systems. If this approach is applied to autonomous driving, it can be assumed that the number of accidents (assumption: more and more autonomous cars are on the road) will gradually decrease. This approach is supported by a study by Daimler, among others. It is assumed that by 2020 10%, by 2050 50% and by 2070 100% of accidents can be avoided. Technical errors are not taken into account. Overall, due to missing data and function descriptions, as well as introduction point and function limits, it is not possible to make an actual forecast on the safety effect of autonomous vehicles.

How does the car behave in dilemma situations?

In everyday traffic, in some cases there is a concatenation of several events that lead to a situation that cannot be solved without personal injury. In these so-called dilemmas, an autonomous car has to select a possible option for action within a very short period of time, which may lead to personal injury but causes as little damage as possible. Possible material damage and violations of applicable laws are also conceivable, but have a comparatively lower priority.

In the following, different situations (the first is collision-free, the second can lead to a dilemma) are presented and the different options are shown (see Figure 2).

At the beginning of the first situation, the vehicle drives on a lane, and further vehicles are parked on the side of the road. Between them, a person unexpectedly steps onto the lane. In this case, there are now several ways in which the vehicle can react to avoid a collision with the pedestrian. In option one, the vehicle can slow down and stop in front of the pedestrian. In option two, the vehicle can switch to the adjacent lane and prevent a collision, whereby the continuous line is crossed and a violation of the road traffic regulations occurs.

The second situation is similar to the first with the difference that only one other vehicle approaches the autonomous vehicle. Assuming that a braking maneuver no longer prevents the collision with the pedestrian, the autonomous vehicle is in a dilemma:

Colliding with the pedestrian can result in serious injury (option two). Switching to the adjacent lane leads to a collision with the oncoming vehicle and may also injure the pedestrian (option three). A collision with the parked vehicles to reduce their own speed is also conceivable (option four), but there is a great deal of uncertainty as to whether the pedestrian will be spared. Such situations therefore also require the implementation of ethical principles in the software for decision-making within the vehicle control system.

One solution to this problem could, for example, be the use of vehicle-to-vehicle communication between the autonomous vehicle and the oncoming vehicle. The two vehicles could work out a joint solution that would allow the pedestrian to pass without collision (option five).

Nevertheless, operation must also be possible without communication with other road users and the infrastructure, since it is unlikely that these communication options, especially at the beginning, will be available nationwide and with all road users.

Therefore the vehicle guidance must also be possible on the on-board sensors. On the one hand, this on-board autonomous operation represents the highest demands on the vehicle control system, on the other hand, it is currently the only option for use in road traffic.

Further dilemma situations in the form of a self-test can be found at Moral Machine. Various options are shown and the user can decide how the car should react. At the end of the test, the results are evaluated according to various categories.

Now that the various questions have been answered, various safety concepts for protecting the vehicle from external influences are presented in the next sections.

Increased safety requirements

A fully autonomous car is increasingly becoming a reality. Once all the technical requirements for the necessary system hardware, sensors and traffic infrastructure have been met, the corresponding vehicles can even be mass-produced within a few years.

At the same time, the networking and communication between vehicles is increasing, so the respective IT components in particular must be protected against attacks. This includes technologies from the IT and telecommunications sectors, which are comparatively new for the automotive sector. These new technologies include the use of multi-level firewalls, encryption and authentication methods, and secure software updates via the Internet.

Staggered safety components

Most attacks on systems or vehicles follow a similar pattern. The first thing attackers usually look for is a vulnerability in a remote system. When developing an electronic vehicle infrastructure, all conceivable threats, including their potential damage and the actual vulnerability of an IT device to an attack, must be considered. Because hackers are always looking for vulnerabilities, all areas within the system must be equally well secured to avoid leaving unsecured backdoors open.

At this point it has proven itself to use various multi-level security technologies and techniques to prevent individual solutions from being duped or circumvented.

In addition, the entire connectivity and IT infrastructure is protected on several levels and with security solutions working behind and next to each other. This is done, for example, by isolating system-critical ECUs (Electronic Control Unit) from non-safety-relevant units and protecting the respective networks of the car with a gateway with firewalls.

External interfaces

Although physical interfaces are the easiest way into the heart of a car, they are also relatively well protected because they are placed inside the vehicle. For autonomous cars, however, it will be necessary to link the vehicle to the relevant traffic infrastructure, other road users and the cloud, e.g. the electronic dynamic horizon, via external, wireless interfaces. The focus of the attacks is therefore on the growing number of wireless external communication channels. If these are compromised, the internal systems of the car can also be attacked remotely, creating a new threat situation. As a result, car manufacturers have to plan further than before to protect their vehicles.

For example, it is necessary to protect all open communication channels against misuse by means of dynamic PKI (Public-Key-Infrastructure) encryption. Data and commands should also be protected against manipulation. Among other things, this is done by authenticating the integrity of all transmitted data and commands. In order to exclude unauthorized access in the best possible way, all connected end devices must also verify each other. This ensures that only communication partners intended for each other are connected to each other.

In-vehicle network

The protection of the wireless interfaces of the car is system-critical for all networked vehicles, especially for autonomous driving vehicles. In addition, however, the wired interfaces to and in the car must also receive optimum protection, since external access cannot be completely excluded. The next level of security is therefore the internal data network. To a certain extent, this is the nervous system of the IT components, since it connects both the external terminals with the vehicle electronics, as well as the individual control units to each other. As with the external interfaces, all security-relevant data in the internal network must be encrypted and the commands transmitted between the ECUs authenticated in order to prevent data misuse.

To prevent manipulation of vehicle IT by internal attacks from the network, the individual ECUs should be physically or logically separated from each other. For example, through firewalls or separate subnetworks. Safety can be further enhanced by additional authentication of the control units each time the engine is started and at irregular intervals during operation.

Electronic Control Units

If the external interfaces and the internal network are protected, the control units, i.e. the individual data centers of the vehicle, should also be protected. They generate, link and manage huge amounts of data, making them a tempting target for attackers. In addition to the theft of data, there is also the risk that attackers could trigger malfunctions. This is remedied by encrypting both the program codes and the data within the memory and checking them for manipulation at regular intervals.

The central gateway plays a key role in managing and securing the ECUs. Not only does it use a firewall to shield the different network areas from each other, but it also monitors the legitimacy of messages as a router in the network before they are forwarded accordingly.

Conclusion

On the one hand many questions in the context of safety of autonomous vehicles can already be answered, but there are still some unknown ones which cannot be answered, because firstly not enough data are available yet and secondly the current knowledge is not sufficient yet. For example, it is a pure prediction that the number of accidents will actually decrease.

On the other hand, in the context of system security, there are already some concepts on how to protect an autonomous vehicle from attack. However, it will also be necessary to gather more data to make the systems even more secure and safe from attacks.

Research Issues

- How can the car react to incorrect detection of objects?

- How can the car and the interfaces be protected against attacks?

- What expectations do users have for autonomous driving?

- How do the attitudes towards driving and the car-handling practices change with the introduction of automatic vehicles?

- What “ethics” is expected from the machine “car”?

References

- https://www.all-electronics.de/schutz-vor-hackern-beim-autonomen-fahren_digitale_verkehrssicherheit/

- https://www.heise.de/tp/features/Autonome-Fahrzeuge-Mensch-Maschine-Probleme-3377411.html

- Autonomes Fahren – Technische, rechtliche und gesellschaftliche Aspekte

- http://moralmachine.mit.edu/hl/de

Leave a Reply

You must be logged in to post a comment.