Artificial intelligence has a great potential to improve many areas of our lives in the future. But what happens when these AI technologies are used maliciously?

Sure, a big topic may be autonomous weapons or so called “killer robots”. But beside our physical security – what about our digital one? How the malicious use of artificial intelligence will threaten and is already threatening our digital security, will be discussed in this blog post.

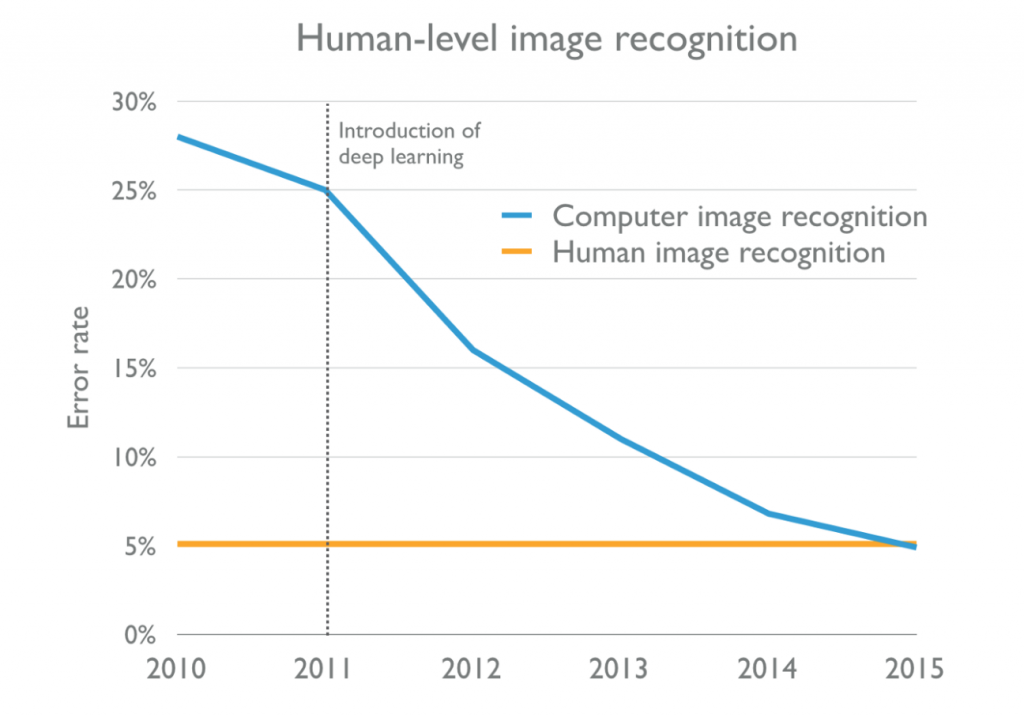

Artificial intelligence is currently one of the most developing technologies and some might also say it’s the ‘next era of computing’. And that’s for a reason: at least since 2011 when deep learning was introduced as a concept for machine learning, the performance of applications using AI, e.g., for image recognition increased to a super-human-level. Easier access to big data and more computing power reinforced this trend as well.

Still, AI didn’t evolve it’s real potential yet and there are lots of research areas that still need to be covered. One of the topics that has not gotten enough attention yet is security. And to express it even more specific: digital security.

In this blog post I would like to give you a short overview about this unnoted but still important topic, i.e., how malicious use of AI already threatens our digital security and what we can expect within the next years.

Artificial Intelligence and the Curse of it’s Dual Use Nature

There are plenty of projects using AI that are really made and used for the common good: AI helps to prevent cancer, detects criminal activities, helps to rescue drowning people, etc. and there is hope that we will see a lot of more similar projects in the future as artificial intelligence offers the potential to perform almost any task that requires human (or animal) intelligence.

But this potential is also the central issue. It’s both a curse and a blessing as, sadly, we all know that human intelligence is not used for the common good only.

Just like the human brain, Artificial intelligence is a dual-use tool and there are and will always be people that abuse AI technologies for malicious use.

An Already Existing Threat:

Malicious Use of AI in Social Media

There are a lot of AI technologies that are already used maliciously and threatens our digital and political security. In the past years we experienced this trend especially in social media. Social media platforms give attackers both access to big data and a possibility to address people personally which might become a “toxic” combination and creates an ideal base for an efficient attack.

1. Use of Data Mining / Profiling

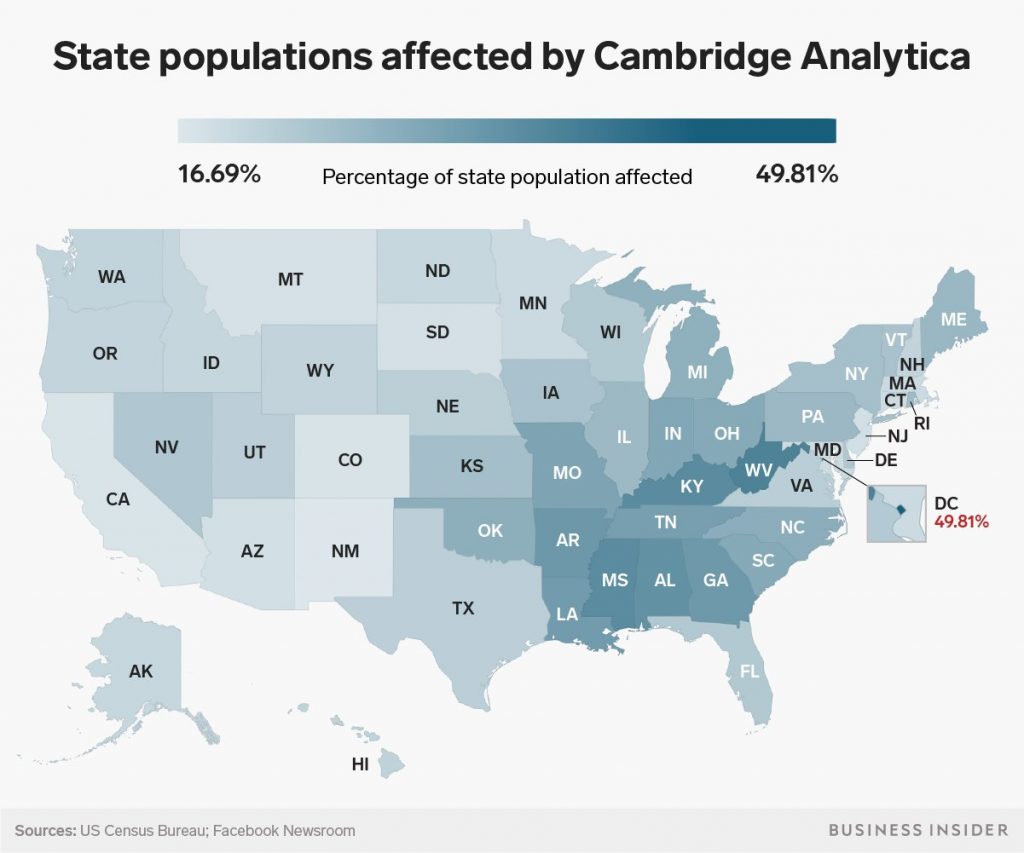

It’s no big news that social media platforms, particularly Facebook, earn money by using their users’ data to generate and display, e.g., customized advertising with the help of intelligent algorithms. Whether this already counts as malicious use or not, this will not be discussed here. But a famous example how these AI technologies have already been abused to threaten the political security of the U.S. citizens (and not only them) is the case of Cambridge Analytica and its influence on the presidential elections 2016. With the help of data mining for microtargeting Cambridge Analytica was able to possibly influence millions of american voters.

The work of Cambridge Analytica describes only one example how the malicious use of data mining or profiling can have a negative impact on security. Fake news or customized content (e.g., customized prices depending on user data) could be increased by it in the future as well.

2. Use of Natural Language Processing/ Generation

Natural language processing and generation shows many different areas of application, e.g., translation and sentiment analysis on social media platforms. Where once expensive and time-consuming human work was required to synthesize or analyse texts can now be mastered within seconds by one intelligent algorithm. What may appear as a beneficial technology for many people can unfortunately also be abused for instance to automize SPAM or phishing attacks.

The american security company ZeroFox already showed in their case study #SNAP_R how easy and lucrative this could be for attackers who abuse these technologies. By using a neural network for data mining and natural language generation they automatically generated customized “@mention”-Tweets containing phishing links.

Details of the study can be found in their presentation but in essence #SNAP_R works as follows:

- Set up fake profiles

- Take a list of random Twitter users

- Evaluate users’ vulnerability

- Collect data about most vulnerable users (topics, bag of words etc.)

- Feed the neural network with data

- Generate and post “@mention” Tweets with phishing link

The result is impressive: after 2 days they achieved a 30 – 66% click-through-rate (the percentage is vage as they can’t be sure whether all clicks came from real persons or from other bots).

Using a neural network to generate phishing attacks will be both time and (probably) money saving combined with a high efficiency – a dream for every attacker.

Very probably we will see a lot of intelligent and automated phishing, SPAM or social engineering attacks using artificial intelligence in the future.

New Threats:

Multimedia Generation and Manipulation

Audio, image and video data have become an essential part of our digital communication. Artificial intelligence has already been used very early to help us dealing with media data in different kinds of ways, e.g., object recognition or media interpretation in general. Recently, also media manipulation and generation technologies have shown up rising new possibilities but also questions for our digital security.

1. Audio and Speech Generation

The possibility to let Siri, Alexa and Co. read every possible text is nothing new to us but how great would it be to let your favorite voice actor read all your files for you instead? The current AI technology is not as far from that! In 2016 Adobe already presented their software Voco that might be capable to do exactly this (however in the moment there are no news about when or whether the software will be released). But also the canadian start up Lyrebird makes it possible to create a vocal avatar – “a digital voice that sounds like you with only one minute of audio”. Although the results still sound kind of artificial it’s just a matter of time when the technology will have developed to a deceptively realistic level.

Not only speech generation is already possible yet but also generation of sounds. A research group of the MIT shows how “Visually-Indicated Sounds” can be created with the help of machine learning. It “generates” appropriate sounds of a drum stick just by giving a video as input to a neural network (for more information I really recommend watching their video or reading the paper of the project).

No doubt, these are impressive examples, how AI can be used for audio generation in the future. But what does this mean for our digital security?

Unfortunately a technology that is capable of putting any possible words in the mouth of your own digital voice is also capable of doing this for any other person’s voice. So with just a small amount of original audio recordings of a targeted person, it will be easy to abuse this technology to spread audio-based fake news or to use it for social engineering (especially phone frauds).

At the Black Hat Conference 2018, John Seymour und Azeem Aqil showed in their presentation “Your Voice is My Password” that it’s already possible to use AI based technologies to outsmart voice authentication. Examples like this put the future of biometric authentication into question.

In general it will be almost impossible for humans to differ real from fake recordings in the future and it will be a big challenge for the IT security to find ways how at least machines can detect the difference.

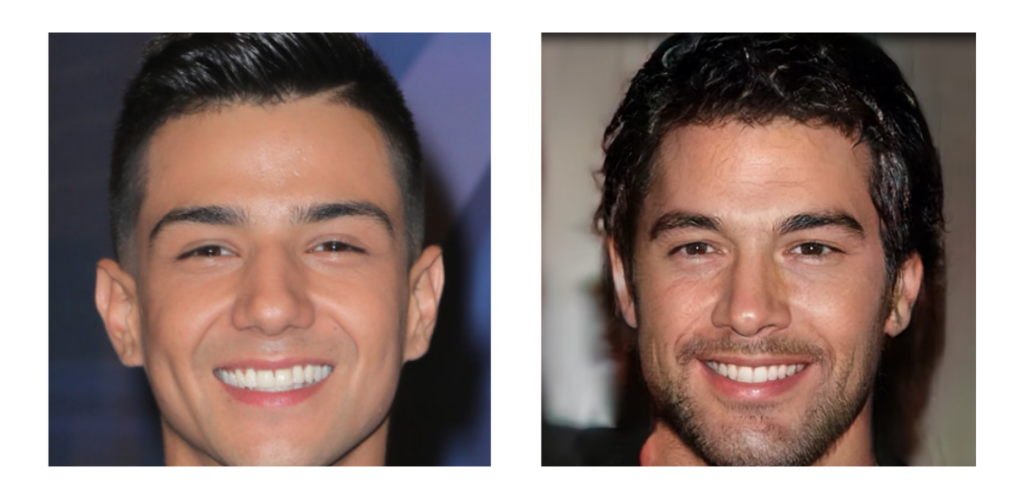

2. Image Generation

In times of Photoshop, etc. image manipulation is nothing new to us but machine learning based algorithms now enables us to generate completely new images. Here is an example: could you tell which one of these images is a photo of a real existing person and which one is just generated with the help of a Generative Adversarial Network by Ledig et al.?

https://arxiv.org/abs/1710.10196 ,

https://cdn.playbuzz.com/cdn//9ced2191-d73f-4e3e-9181-824046fac4ed/2bda590b-182b-4c67-8793-8ffc1bd8b9ea.jpg

Here is the answer:

The left one is a photo of the mexican singer Luis Coronel and the other one is just a fictive person.

It’s most probable that in the next years it will be not only possible to generate high resolution images of portraits but also of places, objects, animals and everything one can imagine. What might be great for the stock photo industry or image rights in general, might be used maliciously to create content for fake profiles and to make fake news look more plausible. In the end we will have to face the question: How can we differ real from fake images in the future? Again, a big challenge for the IT security.

3. Video Generation and Manipulation

If we can already generate and manipulate audio and image data it’s not surprising that also new AI based video manipulating technologies have come up in the last years. The research project Face2Face by the University of Erlangen-Nuremberg, the Max-Planck-Institute for Informatics and the Stanford University shows how easy it is to manipulate a person’s facial expression in a video, even in real time (here is their demonstration video).

Also face swapping in videos, so called Deep Fakes, have gone viral and can be created easily just by using a software called FakeApp (however it seems their Website has been turned down since June 18).

Especially in combination with speech generation, these technologies can become really dangerous for our security when used maliciously. They open doors for highly deceptive fake news videos: either to change only some significant details, e.g., in a politician interview with the help of Face2Face and voice generation, or to create a whole new video and map a target person’s face into it with Deep Fakes. And what will happen with credibility of video evidence?

We will have to overthink our “seeing is believing” mindset.

Again it will be a big challenge for the IT to find a way how we could mitigate this problem.

In a Nutshell – What can we expect in the next years?

In summary, both existing and new threats will probably lead to the following problems in the next years:

- Attacks will become:

- more automated

- finely targeted

- more effective

- Personal data will be used and abused more than ever

- Distinction between real and fake content will be almost impossible for humans

- Fake news will be a bigger challenge than ever before – A small group of people will be able to manipulate the public opinion (e.g., case Cambridge Analytica)

- Biometric authentication might become insecure

How can we mitigate or even prevent the threats?

First of all, when dealing with dual-use technologies there is no real solution how to prevent them from being abused. The only way would be to prohibit the malicious use of it, which might somehow be promising for material or expert-knowledge required technologies like nuclear energy. But in the end a trained neural network is just a small file and can easily be distributed and adapted to be used even by laypersons. Additionally, it’s quite subjective what counts as malicious use of AI and what not, so a prohibition would be hard to draft in the first place.

Nevertheless, there is a lot we can do to mitigate the threats. Here are some suggestions depending on the different roles that are involved.

1. User

In simple terms: the less people fall for fake news – the less fake news will be “produced”. So what one can do as a user is:

- Refocus on the “real” threats of AI (remember: AI is not only about killer robots)

- Call attention

- Be more careful using personal data

- Participate in the fight against fake news

- Use your rights (e.g., when your personal data is used unjustified)

2. Governments / Institutions/ Corporations

The government and institutions can have a big positive influence on the fight against the threats. What they can do is:

- Support more collaboration with technical researchers

- Create ethical standards

- Support Beneficial Intelligence

- (Re)inform/ educate about the threats

- Provide platforms to report fake news

- Extend privacy protection

- Consider industry centralization / centralized blacklisting

3. AI Developer / AI Researcher

Of course the majority of AI developer do not have any malicious intents when working on a new project. However, we have to keep in mind: “With Great Power Comes Great Responsibility”. And in detail we should:

- Take the dual-use nature of one’s work seriously

- Call attention

- Comply with ethical standards

- Limit the network on the task it is intended for (Undirected intelligence vs. directed intelligence )

- Show failure transparency

- Consider whether the code should be made open source or not (open source is great but there might be some edge cases where it might be better to limit the source)

4. Security Developer/ Security Researcher

In the end there is a risk of attacks and security developer/researcher will be needed to mitigate the problems. To be prepared for this they should:

- Initiate collaborations (e.g. FakeNewsChallenge )

- Use Game Days / White Hat Hacking with focus on AI-attacks

- Use AI against AI

And at this point I would like to propose some research questions:

- To what extent can the malicious use of AI already be mitigated in the architecture of the network?

- How can AI be used to detect multimedia fake news ?

- To what extent can technical measures certify the authenticity of video, images and audio data?

- What impact will the expansion of image- and sound-generation have on the future of authentication?

More interesting but also quite detailed research questions have been formulated by the Future of Life institute and can be found here.

Conclusion:

The malicious use of artificial intelligence will become a difficult problem in the following years for our digital and political security. Especially users of social networks will have to deal with influence campaigns, spear phishing and particularly fake news. We will have to question the authenticity of audio, image and video data and can no longer believe in what we see or hear.

However it lies in our hands to influence how strong the threats will actually become. Most of all it’s important to call attention of the threats and to initiate prevention measures. AI developers should be aware of their responsibility and the government should support security research.

Artificial intelligence is a great chance but we should remember Stephen Hawking’s words:

“Artificial Intelligence will be either the best or worst thing for humanity.”

… and it is up to us in which direction things will develop.

Sources & References

A big part of this blog post has references to the great report “The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation” (Miles Brundage et al., 2017) from the University of Oxford.

Other resources and references are:

- #SNAP_R by ZeroFox – Weaponizing Data Science for Social Engineering.

URL: https://www.blackhat.com/docs/us-16/materials/us-16-Seymour-Tully-Weaponizing-Data-Science-For-Social-Engineering-Automated-E2E-Spear-Phishing-On-Twitter.pdf [Accessed 2018-05-06] - State Population affected by Cambridge Analytica: https://www.businessinsider.de/facebook-cambridge-analytica-affected-us-states-graphic-2018-6?r=US&IR=T [Accessed 2018-05-06]

- Lyrebird: https://lyrebird.ai/vocal-avatar [Accessed 2018-05-06]

- Visually Indicated Sounds, Andrew Owens et al., 2015: https://arxiv.org/abs/1512.08512 [Accessed 2018-05-06]

- Image Luis Coronel https://cdn.playbuzz.com/cdn//9ced2191-d73f-4e3e-9181-824046fac4ed/2bda590b-182b-4c67-8793-8ffc1bd8b9ea.jpg [Accessed 2018-05-12]

- Progressive Growing of GANs for Improved Quality, Stability, and Variation, Tero Karras et al, 2017 URL: https://arxiv.org/abs/1710.10196 [Accessed 2018-05-06]

- Face2Face: Real-time Face Capture and Reenactment of RGB Videos (CVPR 2016 Oral) URL: https://www.youtube.com/watch?v=ohmajJTcpNk&feature=youtu.be&t=53s Accessed 2018-05-06]

- Fake News Challenge, URL: http://www.fakenewschallenge.org/ [Accessed 2018-05-30]

- Future of Life Institute URL: https://futureoflife.org/ [Accessed 2018-05-12]

- Tech Trends Report 2018: URL: https://futuretodayinstitute.com/2018-tech-trends-annual-report/ [Accessed 2018-05-12]

Leave a Reply

You must be logged in to post a comment.