Test-driven Development of an Alexa Skill with Node.js

This is the third part in a series of blog posts in which we will describe the process of developing an Amazon Alexa Skill while focusing on using new technologies like serverless computing and enforcing the use of clean code conventions. We decided for our project to use continuous integration and delivery. For that to work as it should and to prevent unnecessary bugs from being discovered by the user, we relied on test-driven development for our code.

If you missed the first or second part you can catch up by reading them here(1) and here(2).

As already presented in the introduction, our skill helps students from the HdM Stuttgart get information about classes and professors quickly by making them able to ask Amazon Alexa, which is a more modern and dynamic approach than searching for information on a website.

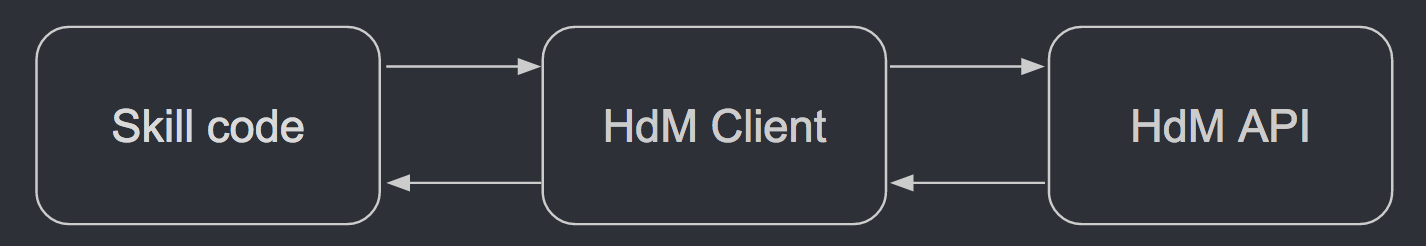

We decided to use the Node.js platform for implementing our skill. As stated in part 1, we wrote our own npm package, the hdm-client, to encapsulate the unofficial API of our university, which helped us separate the gathering of data from the actual skill implementation. For writing both the skill and the client we used a test-driven approach. The components of our Skill looked like this:

Test-Driven Development (TDD) states that you first write your test cases before even writing the first line of production code. The process of test-driven development (TDD) consists of three steps:

1. Write a unit test that initially fails

2. Write as much code as we need to make the test pass

3. Refactor the code until it’s simple and understandable

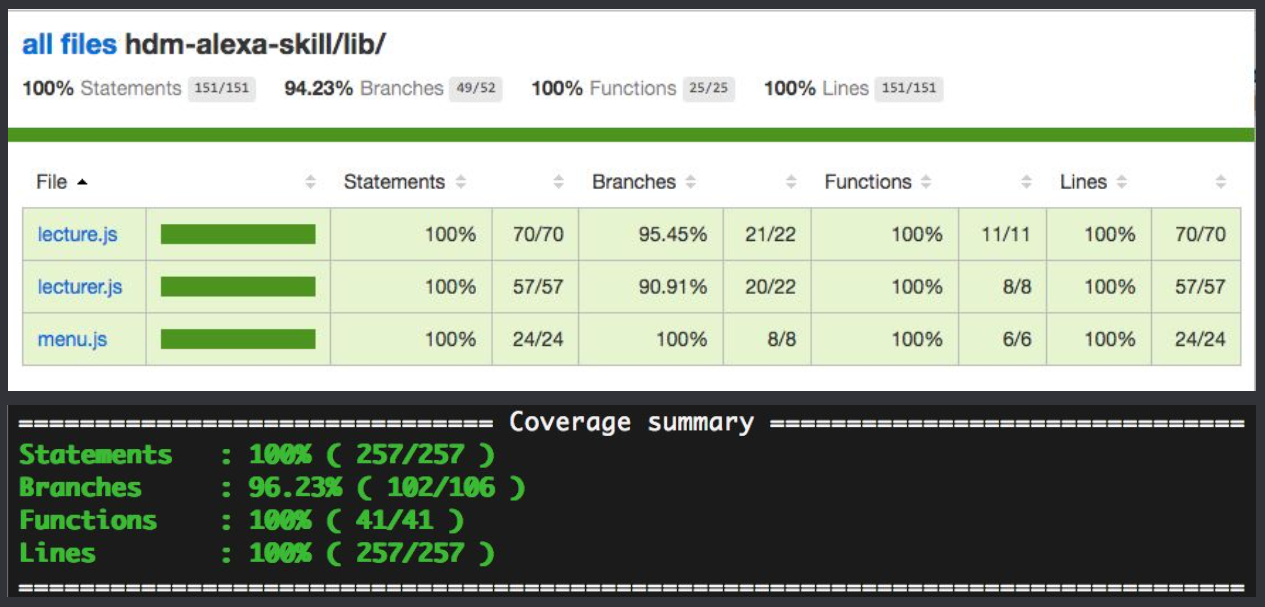

We really didn’t want to write a single line of code if there was no test demanding it. That led to a high test coverage percentage and it also forced us to only write code that was necessary at that moment, which complies with the YAGNI design pattern (You ain’t gonna need it). We tested code coverage using a free javascript tool called Istanbul.js, here is a screenshot of the report as HTML and from terminal:

Testing with Mocha and Chai

Luckily for us, testing Node.js applications isn’t anything new and there are plenty of good and free testing frameworks out there. We chose Mocha for our tests because it is one of the more robust and widely used libraries. Mocha doesn’t incorporate an assertion library, it works with everything that throws an exception. We used it for describing our tests and we used Chai to write the test’s assertions. A simple test case looked like this:

describe('officeHours', function() { // Test description in Mocha

'use strict';

it('should be a function', function() {

// Self-explanatory assertions with Chai

expect(lecturer.officeHours).to.be.a('function');

});

});In our case, the skill relies heavily in making http requests, which led to a lot of asynchronous functions in our code. Mocha makes it easy to test asynchronous code because you can pass a done function as a parameter and Mocha expects the function to be called within the callback function in a customisable period of time. The following example tests the function of the module lecturer responsible for getting the office hours of a particular professor:

describe('officeHours', function() { // Test description in Mocha

'use strict';

it('should throw an error if the client throws one', function(done) { // Passing in the done function

lecturer.officeHours(client /*the hdm-client*/, 'Professor X', function(err, response) {

expect(err.message).to.equal('Not Found'); // Assertions using chai.js

expect(response).to.equal(undefined);

done(); // 'done' gets called here if the callback function is called

});

});

});Here the hdm-client gets passed into the lecturer module following the pattern of inversion of control. This helps us decide which state of the client gets used in the officeHours function. That’s especially helpful in this scenario because we want our unit tests to run isolated from the rest of the code. Furthermore, we also want our unit tests to run fast and for that, we need to prevent them from doing real HTTP requests to the unofficial API from our university, which in fact only parses the website looking for information. We achieved this by using Sinon.js, a javascript library used for mocking, stubbing and spying javascript objects. With Sinon we can stub the client’s function that is used inside officeHours to prevent it from actually being called. Instead, it returns a predefined value which we can test in our unit test code:

it('should return the office time if there's just one result', function(done) {

var client, stub, data, responseText;

// Our fake response

data = [{

name: 'Professor X',

officehours: 'Do 14:-16:'

}];

// Here we create a stub from the clients function searchDetails

stub = sinon.stub().callsArgWith(3, null, data);

client = { searchDetails: stub };

responseText = 'Professor X hat am Do 14:00-16:00 Sprechstunde.';

// Then we pass in the client object as always

lecturer.officeHours(client, 'Professor X', function(err, response) {

expect(err).to.equal(null);

expect(response).to.equal(responseText);

done();

});

});The challenges we faced

The biggest problem for us when coding our skill test-driven was a breaking change in our dependency, the hdm-client. We were facing problems with our code running for too long on the Amazon Lambda system, which led to timeout exceptions. We decided to change the hdm-client to make it possible for its functions to be called with a maximum results-parameter. Of course, we knew this change would make every line of code using the client in the skill logic break since the order of the arguments in the functions changed: But still none of our unit tests failed. But how?

The tests did not fail because we weren’t actually calling functions of the client. The Sinon stub prevented the real function from being called and returned our fake response immediately. We knew exactly which lines in our code were causing the problem, but simply changing them didn’t feel right. The two first steps of TDD state that you first have to write a test that fails and then write the production code you need. Long story short: we decided to introduce integration tests.

Integration tests

We wrote one test for each main function in our skill code, but this time we didn’t mock the client. We called this kind of tests integration tests because we were actually testing how the skill code behaves when using the real hdm-client. We watched all tests fail and then changed the code in the skill’s logic to make them pass. Then all of our unit tests failed so we had to fix those as well.

The unit tests failed because of the client’s stub calling the callback function with the wrong order of arguments.

Our integration tests did take longer to complete than regular unit tests (around 200 to 400ms each) because of their data, which actually does the whole roundtrip to the unofficial HdM API. With this procedure, we weren’t able to test if the data coming back was valid because we depended on many networking factors, but the purpose of the tests was finding out if the skill logic uses the hdm-client correctly. We also didn’t need to run them as often as unit tests, so we usually just ran them in our CI-Server.

Lessons learned

Breaking changes to a dependency can be a pain in the neck, whether you are programming test-driven or not. If you are using a TDD approach, fixes to the code base should always be led by an appropriate test that also fails at first. Unit tests do not always warn you about existing problems. This time we knew breaking changes were coming with an update to our dependency because we developed that library, but that is only rarely the case. You should always do end-to-end testing before publishing or pushing your changes to the production environment. In our case, it’s difficult to automate end-to-end tests because we need to talk to Alexa to test our skill and we also need to hear the answer, but for that, we wrote down our test cases and tested them one by one talking to our Echo Dot. Without knowledge of the dependency changes and without any integration tests, that would have been the only way of finding out there was a problem.

Even though making quick fixes might seem to take longer with TDD, we were glad to use this approach, since our failing tests were perfect for finding every line in our codebase which was still making a wrong call to the client. Without a test for each line of production code, we might still have parts of our codebase we forgot to change, only waiting for a user to do an unusual request to our service, making the skill logic crash.

Please don’t hesitate to leave us a comment and tell us what your experience with Test-Driven Development was.

Leave a Reply

You must be logged in to post a comment.