Introduction

Often overlooked, usability turned out to be one of the most important aspects of security. Usable systems enable users to accomplish their goals with increased productivity, less errors and security incidents. And It stills seems to be the exception rather than the rule.

When it comes to software, many people believe there is an fundamental tradeoff between security and usability. A choice between one of them has to be done. The belief is – make it more secure – and immediately – things become harder to use.

It’s a never-ending challenge – security and usability experts arguing about which one is more important. And some more people of the engineering and marketing department get involved giving their views and trying to convince the others. Finding the right balance between security and usability is without a doubt a challenging task.

The serious problem: User experience can suffer as digital products become more secure. In other words: the more secure you make something, the less secure it becomes. Why?

Humans as the weakest link

Many aspects of information security combine both technical and human factors. If a highly secure system is unusable or doesn’t behave in a way the users expect, they will try to circumvent the system, bypass security mechanisms or move entirely to other systems that are less secure but more usable. Problems with usability are a major contributor to many security failures today.

Secure services must be as easy to use as insecure services or users will gravitate to the insecure alternative.” (Ian Hamilton, Signiant CTO)

As Hamilton describes, when security gets in the way people tend to develop (sometimes really clever) hacks and workarounds that defeat security. It’s not because they are eval, it’s due to how humans are made.

The best example is how users authenticate to systems, particularly using passwords. Passwords have an tension between usability (short, easily memorable passwords) and security (longer, more diverse passwords that are difficult to crack). Guidelines for password selection focus largely on security rather than usability. Getting passwords and sensitive information through social engineering is often not a big deal – just by looking at desks or even by pretending to be someone else and just asking for it.

As we are not made for remembering long and complex passwords, we write them down in good faith, hide them under the keyboard, paste them on the monitor or use the same password for a bunch of services.

Passwords are the least expensive mechanism known for securing systems. But complex password requirements reduce security and increase costs.

If a system’s security features are difficult to access and/or apply, users will make mistakes or forgot protection at all.

Some examples

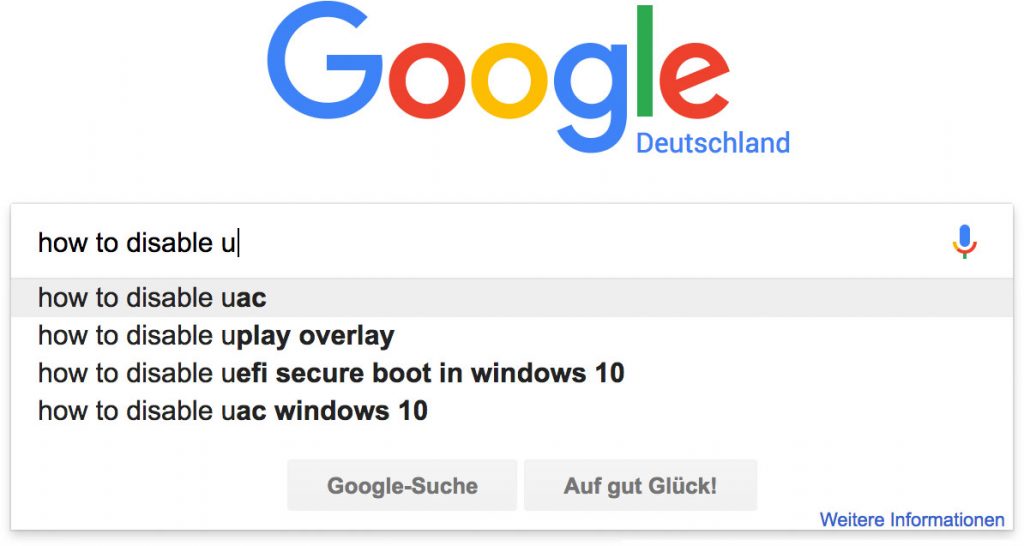

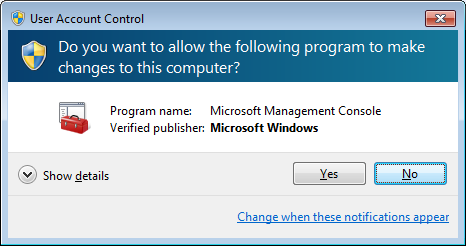

As mentioned, when a feature annoys users, they will bypass security mechanisms. We saw this behaviour prominently in Microsofts Vistas User Account Control (UAC).

Windows Vista UAC

As result of the huge security issues in Windows XP Microsoft introduced User Account Control (UAC) in its successor Windows Vista. It is a security feature which helps prevent unauthorized changes to the operating system.

Interrupting the users workflow every few minutes made lots of users disabling this security mechanism at all – the worst thing that could happen.

UAC is a good thing – when done right. By improving UAC in a way that it didn’t annoy the user that much anymore, Microsoft made this major security mechanism more usable and protective in Windows 7. Microsoft provided better explanation of UAC so even unskilled users were more aware of how important this feature is.

From that point on users were able to control the amount of popups by a slider. Finding a compromise between security and usability by themselves requires more experienced users though. But most important, this change hat certainly made less users disabling the features completely (no statistics given).

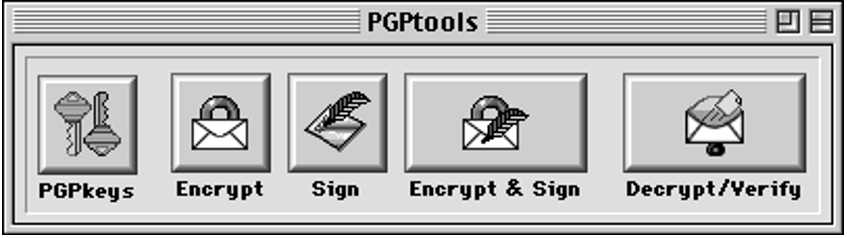

PGP – Pretty Good Privacy

PGP, short for Pretty Good Privacy, is an email encryption system invented by Phil Zimmerman in 1991. Using PGP was (and is still regarding the numbers of users using it) so frustrating and unpleasant for people that they simply won’t use PGP.

A paper called “Why Johnny Can’t Encrypt: A Usability Evaluation of PGP 5.0.” pointed out the challenges users were faced using PGP. A study showed that the majority of the participants (experienced users of email) was not able to successfully encrypt a message.

Several test participants emailed secrets without encryption. The participants chose pass phrases that were similar to standard passwords. Only a third could correctly sign and encrypt a message within 90 minutes. So PGP – at least in terms of usability – has failed cause users simple don’t understand it.

How can we get better?

Learning from each other and closing skill gaps

Rarely do security experts have design knowledge (and vice versa). Teams need to understand the basics of the counterpart. The best way to ensure that security is considered by designers is for them to understand the basics of security and authentication. Security engineers generally lack experience in usability engineering. One of the main reasons why application security violations continue to rise, is the fact that many deployed security mechanism are not user friendly, limiting their effectiveness.

Unless engineers start thinking more about how to make security more usable, progress in securing systems will be limited. They should have an idea about how the implementation of security mechanisms impacts the user interface and the user experience. It needs teams which want to learn from each other and that do not consider the usability vs. security as a tradeoff.

Security by Design – Integrating UX and Security in the Development Process

Software designers often think about usability and security as something to add after they’ve already designed the software architecture or in the worst case after they’ve finished the final product. So they’re not features that are integrated into the product from the beginning. Neither security nor usability should be afterthoughts.

Usability and security evaluations should be performed at all phases of development and included in software from the very beginning of the design process. Iterative design is one way to do this by analysing users, designing, testing, evaluating, re-designing, re-testing and so on. This cycle is common in software design, but it’s usually focused on software functionality. This helps address a potential conflict and ensure that the security features are useable from the very beginning. At every step involved developers need to keep in mind that people will be interacting with the security.

It requires good planning and even the business model should pay attention to a balance in security and usability.

And as already mentioned not often enough do user experience teams work closely with their security counterparts. To reach the goal of better cooperating teams it can be helpful creating cross-functional teams as f.e. Spotify does with their squad framework.

Strengthen security behind the scenes

It seems as an obvious point – improving security technically without touching the user interface at all can help improving the product – not to the disadvantage of usability. It means the security mechanisms could be strengthened behind the scenes and invisibly. For example by improving the underlying security algorithms used to scan attachments, downloads, etc. or by strengthening spam filters.

Following guidelines for secure interaction design

Quite a bit research has been done on the field of usable security. in 2002 Kai-Ping Yee defined a set of guidelines helping accomplish secure interaction design. Secure interaction design deals with how to design a system that’s both secure and usable. It is often cited and even Apple refers to them in their user interface guidelines.

The design principles identified by Yee:

- Path of least resistance

The most natural way to do a task should also be the safest. - Appropriate boundaries

The interface should draw distinctions among objects and actions along boundaries that matter to the user. - Explicit authorization

A user’s authority should only be granted to another actor through an explicit user action understood to imply granting. - Visibility

The interface should let the user easily review any active authority relationships that could affect security decisions. - Revocability

The interface should let the user easily revoke authority that the user has granted, whenever revocation is possible. - Expected ability

The interface should not give the user the impression of having authority that the user does not actually have. - Trusted path

The user’s communication channel to any entity that manipulates authority on the user’s behalf must be unspoofable and free of corruption. An example for a trusted path that Microsoft Windows provides at its login window is the common key combination Ctrl-Alt-Del that users are required to press. This key sequence causes a non-maskable interrupt that can only be intercepted by the operating system to guarantee that the login window cannot be spoofed by any application. - Identifiability

The interface should ensure that identical objects or actions appear identical and that distinct objects or actions appear different. - Expressiveness

The interface should provide enough expressive power to let users easily express security policies that fit their goals. - Clarity

The effect of any authority-manipulating user action should be clearly apparent to the user before the action takes effect.

Following these guidelines don’t guarantee a usable and secure system for sure. They should be seen as general suggestions. And they don’t address every issue, but they can help preventing common pitfalls and remind of good practices. They can also be used for systematic evaluation of existing user interfaces. Simple changes on a interface’s design can result in much better user security sometimes.

Testing

A/B testing is a method of comparing two versions of a feature and then measuring it against each other to determine which one is more successful. The results can be very enlightening as it can be tested automated on a large base of users and giving feedback about how users interact with a system, how they choose security relevant settings, how they behave in terms of security. Do they bypass security mechanisms? Do they get stuck somewhere in applying security?

A number of tools are available for A/B testing. It can help to have valuable insights into your customers’ thoughts. Accurate A/B tests can make a huge difference. By using these tests and gathering empirical data, you can figure out exactly which interface works better for users to interact with the system in a secure way.

Final Thoughts

For sure, and its proofed so often, improving security and user experience simultaneously is not straightforward and can be time consuming.

Today’s software providers need to invest in both security and usability. There is no ‘one size that fits all’ approach. Each software has different security requirements, target groups, tasks to fulfill, different expectations and mental models of the users to match.

Coming back to the introduction I think that security and usability are not fundamentally contrary to each other. In fact, it should be clear that the opposite makes more sense: a more secure system is more controllable, more reliable, and more usable; a more usable system reduces confusion and is more likely to be secure. These problems come up when computers fail to behave in a way that the user expects or understands. Designing secure systems that are good with usability can prevent that from happening.

This means we must not expect users to be security experts or to understand all the details of how security mechanisms work. They should be able to complete their tasks safely and securely be relying mainly on knowledge and understanding that they already have.

So it is time to make systems more secure, still usable and effective when performing the tasks.

Sources

- Simson Garfinkel, Lorrie Cranor: Security and Usability – Designing Secure Systems that People Can Use, O’Reilly Media

- Ka-Ping Yee, Aligning Security and Usability, http://zesty.ca/pubs/yee-sid-ieeesp2004.pdf

- Cristian Florian, Security and Usability: Finding the Right Balance, https://techtalk.gfi.com/security-usability-finding-balance/

- Don Norman, When Security Gets in the Way, http://www.jnd.org/dn.mss/when_security_gets_in_the_way.html

- Ian Hamilton, Usability as a protection feature, SC Magazine, February 2015

Leave a Reply

You must be logged in to post a comment.