Introduction

Who hasn’t seen a cinema production in which an AI-based robot threatens individual people or the entire human race? It is in the stars when or if such a technology can really be developed. With this series of blog entries we want to point out that AI does not need robots to cause damage. Already today, AI is used in harmful ways and we should be aware that every progress in AI technology has a downside, especially if we, without considering the possible consequences, make results openly available and thus offer everyone the chance to use the technological advances for their own benefit.

This first part of the blog series addresses the dual-use character of today’s AI by showing possible applications of AI in the lifetime of cyberattacks. The defensive possibilities are briefly presented, but the focus is on the offensive, i.e. the possibilities that AI offers attackers to execute cyberattacks more intelligently and automatically. Based on this, a technology that could become a major threat is investigated more closely: Deepfakes. I’ll give you an insight into how this technology works, the threats it poses and why people in general can be quickly influenced by such misinformation.

New AI technologies can not only be used for attacks, but also the AI itself can be manipulated, which can cause great damage, especially in systems where AI makes decisions.. This topic is covered in part 2 of the blog series.

Artificially intelligent cyberattacks

The spread of new technologies has always had a flip side. The use of technology can have positive effects in many areas, but there will always be people who use it in a harmful way. The same applies to the technological advances of AI and the field of cybersecurity. I will only briefly discuss the defensive options first and focus mainly on how AI can be used in harmful ways.

Defensive AI

One of the most well-known applications of defensive AI is malware detection. Outdated signature-based methods are not able to identify newly generated malware. From an AI point of view, the problem can be described as a classification task: Is an application or system behavior a malware or a deviation from normal behavior? In order to build some kind of classification model, we need good features on which we can discriminate. One option is to build a so-called Bad-Behavior model that tries to map and generalize the characteristics of existing malware and thereby detect new instances. There is also the possibility to map the normal system behavior and detect deviations from it. Such models are called Good-Behavior models. Which features might be useful for deriving decisions? The following table gives you an overview of existing researches.

| Algorithm | Features | Link to paper |

| Decision Trees, Random Forest | PE file characteristics | link |

| Support Vector Machine | Network activity | link |

| Neuronal Networks | PE file characteristics, strings | link |

| Naive Bayes, Random Forest | Byte sequences, API/System calls, file system, window registry | link |

| Deep Neural Networks | API call sequences | link |

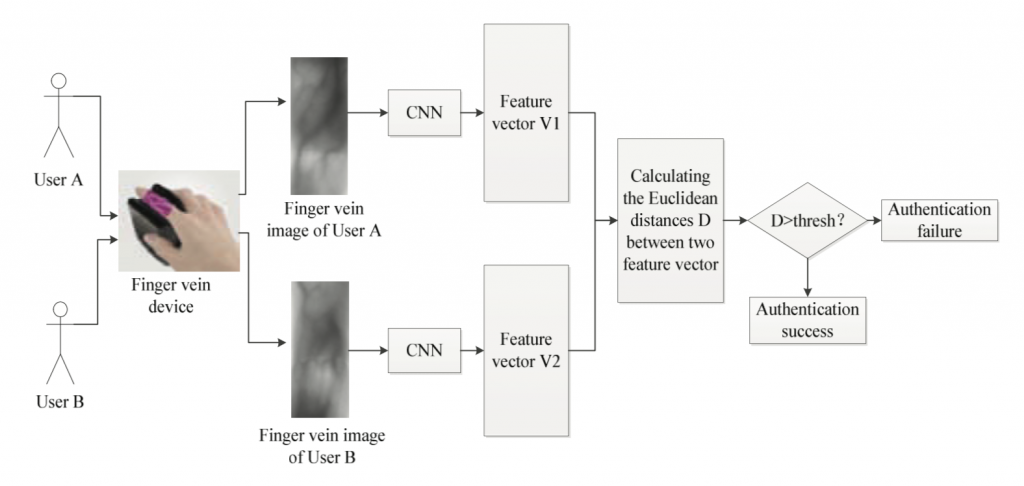

In addition to malware detection, AI offers many authentication options through biometric security. The most obvious is to use physical characteristics for identifying a person. Images of the face, fingerprints or iris, for example, are features that are used to train an appropriate machine learning algorithm. Furthermore, the veins of a person can be used for authentication, e.g. to make the operation at an ATM more secure. When it comes to feature extraction from images, as of today, Convolutional Neural Networks and their variations are currently the most promising networks. The figure below shows a scheme of how such a network can be used to identify a person by their veins:

Source: Using Deep Learning for finger-vein based biometric authentication

There are many more applications of AI to increase security. Fortunately! Some other fields are spam detection, intrusion detection systems (host/network based), phishing detection and vulnerability detection.

It is at least a little reassuring to know that there are researchers investigating the defensive usage of AI in cybersecurity. This is absolutely necessary. The following section presents offensive AI techniques, some of which have already been observed in the wild. Although no devastating AI-based cyberattack is known at this stage, we shouldn’t wait with building countermeasures until such an attack occurs, right? Otherwise, as is so often the case, the technology used by attackers will be more advanced than that used for defense.

Offensive AI

So far, we have only looked at the golden side of the coin and seen ways in which AI can help identify and prevent cyberattacks. The following examples show the flip side and explain the extent to which AI already has a damaging influence today and that it is very likely that certain techniques will be increasingly used by attackers in the future.

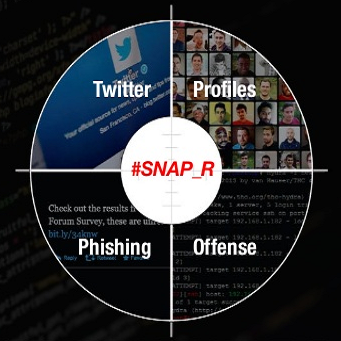

Target profiling is an area where AI is used during the reconnaissance. The probability that this technique is actively used is very high because the technological readiness in the field of Natural Language Processing is already very advanced. The extent to which AI can help an attacker in this area is best demonstrated with the proof-of-concept tool SNAP_R (Social Network Automated Phishing with Reconnaissance), which was introduced in 2018 and can be found on GitHub. How does this tool work? First, there is the so-called target discovery, where users are categorized into clusters based on publicly available data like profile information, followers, activities. Second, the tool analyzes tweets by twitter users belonging to clusters it believes is most vulnerable to a social engineering attack and determines what they post about. Then, replies with attached fraudulent links are automatically created by using a Long short-term Memory (Neural Network). It has been shown that such automated AI-based phishing attacks have a click-through rate of up to 30%, which is twice as high as manually generated attacks.

Password attacks are becoming more and more advanced through the use of AI. machine learning can be used to learn patterns in known password leaks and generate new passwords, which lead to faster results than a brute-force attack. Many demonstrations of such algorithms are publicly available. One example is PassGan. By the usage of a Generative Adversarial Network, passwords are emulated from real password leaks. During the training of such a GAN, the output of the network (fake sample) gets closer to the distribution of passwords in the original leak and is therefore more likely to match real user’s passwords. Another model type that can be used for password attacks are Recurrent Neural Networks. Researchers showed that, with an open-source machine learning algorithm, called Torch-RNN, they were able to brute force passwords with a success rate of 57% after 1000 experiments. In addition to password guessing, there is also the approach of stealing passwords. A current AI-based approach uses the sound waves generated by the keystroke on smartphones to classify and recover the numbers that were entered. The sound waves are recorded by the smartphone’s own microphone and sent to the corresponding app, which runs in the background. Experiments showed a success rate of 61% of guessing 200 four-digits PINs within 20 attempts. Interesting but scary at the same time, right? It was hard enough, even without the inclusion of AI, to protect against such side channel attacks.

Network Behavior analysis can be used to learn the normal behavior of systems and thus detect deviations. But why should this not be used by an attacker? The malware in the target system could act as inconspicuous as possible by first learning the normal behavior of the system. Just one example where the dual-use character of AI is clearly evident. Literature indicates that AI-supported network behavior analysis is at a high readiness level and that this technology has been adopted by malicious actors. At the end of 2017, the cybersecurity firm Darktrace Inc. spotted an attack at a client company in India that used rudimentary machine learning to observe and learn patterns of normal user behavior inside a network. The software then began to mimic that normal behavior, effectively blending into the background and becoming harder for security tools to spot. It is to be expected that such attacks will occur more frequently in the future.

Self learning malware would eliminate the need for a malware to contact its mother ship (server of the attacker). This concept includes the generation of attack strategies designed by an AI without any human intervention. Sounds far-fetched? In 2019, a paper was published in which the usability of self learning malware was simulated. The work demonstrated how a self-learning malware can indirectly attack computers in a supercomputer facility by interfering with the cyber physical systems of the building automation system. By targeting the cooling control system that manages the facility, the AI-controlled malware collected the data needed to run scenarios to disrupt the cooling capacity. It then autonomously implemented three different attack strategies to successfully disrupt the cooling system and and thereby indirectly the computer system.

Obfuscation, that is remaining undetected for as long as possible, is an important component in the life of a cyberattack. So far, no attacks are known which use AI to increase obfuscation, but IBM researchers have developed a malware called DeepLocker in 2018, which uses artificial intelligence to find its targets and protect itself against detection by security software. To demonstrate the capabilities of such intelligent malware, the researchers developed a proof of concept to spread the ransomware WannaCry via a video conferencing system. During their tests, the malware was not detected by antivirus solutions or sandboxing. This is because the malware remains inactive until it reaches its very specific target. For the detection of the target person, AI-techniques like face recognition, location detection, speech recognition combined with an analysis of data from various sources such as online trackers and social media can be used. DeepLocker does not launch its attack until the actual target has been identified. It’s like a sniper attack, isn’t it?

These examples should make the negative sides of AI a bit more tangible. I myself was surprised how little information is currently available about known AI-based cyberattacks. However, the potential in which AI is or could be used is huge: During Reconnaissance for target profiling or vulnerability detection, to simplify access to systems with password guessing or captchas bypassing, during internal reconnaissance with network/system behavior analysis, to simplify command and control by using self-learning malware or swarm-based botnets or at last to remain inconspicuous for as long as possible and learn how to remove from the system without attracting attention. To what extent AI is currently used in the anatomy of a cyberattacks is difficult to say. Even if there are indeed relatively few artificially intelligent cyberattacks, we should now take the opportunity to develop suitable countermeasures to stay one step ahead of the attackers.

A more tangible danger, which could have an impact in different areas, also on politics and could affect each of us, are the so-called deepfakes.

Deepfakes

Take a look at what the former president of the United States is saying in this video. Are you a fan of Kim Kardashian? Maybe not after this video.

Introduction

The shown videos do not correspond to reality. These words were never spoken by these people. It still seems pretty real, doesn’t it? There are many examples of these so-called deepfakes. The first such manipulated videos appeared in 2017 on Reddit and only three years later the technology has developed that well that some videos or voices can no longer be identified by humans as fake or truth. Another very recent example can be found in this article. The general idea of transforming facial expressions and orientations to other people and letting them say whatever you want is nothing new and has been used many times in movies to bring dead actors back to life, such as Grand Moff Tarkin in Star Wars Rogue One.

Source: How ‘Rogue One’ Brought Back Familiar Faces

However, compared to these CGI techniques, the use of AI makes the production much cheaper, less time consuming and less demanding. You wonder why? Anyone can download implementations from GitHub and with some ambition create an own deepfake.

Technology

As already mentioned, there are many repositories and related tutorials that explain how to create your own deepfake video. If you are interested, you can find plenty of implementations here on GitHub.

Source: Understanding the Technology Behind DeepFakes

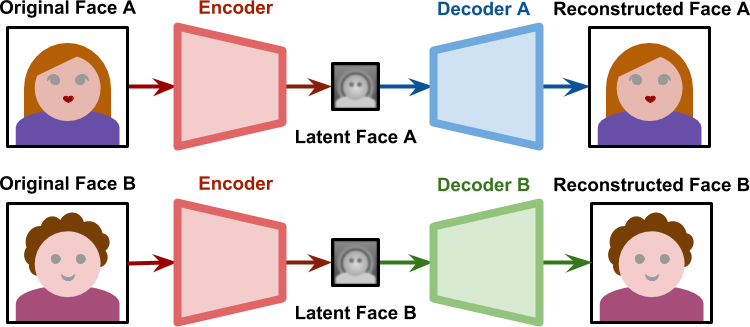

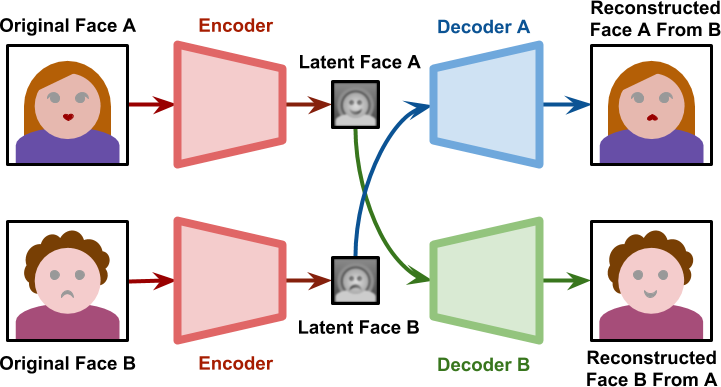

Basically, what makes the deepfake video technology possible is finding a way to force two faces, A and B, to be encoded on the same latent feature space. Deepfake implementations solved this by having one encoder module, yet using two different decoders.

Source: Understanding the Technology Behind DeepFakes

During the training, decoder A reconstructs face A and decoder B face B. After training, we can pass the face A as input to the encoder module and feed the output to decoder B. Decoder B will try to reconstruct the face B, based on the information relative to the given input face. If the network generalizes well, we can generate the face B with the same orientation and facial expressions of face A.

Not only deepfake videos can cause damage. On the contrary, in 2019 there was an attack in which AI was used to simulate the voice of a CEO, thereby enticing an employee to transfer the required 220,000 euros to an account. It is difficult to say which specific AI technology the attackers used. One possible approach is the following implementation on GitHub: Real-Time Voice Cloning.

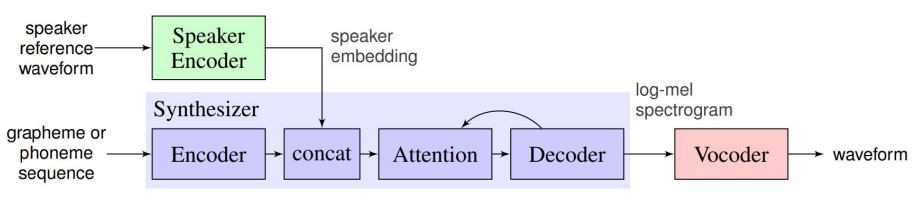

Source: Transfer Learning from Speaker Verification to

Multispeaker Text-To-Speech Synthesis

A five second voice snippet of a person is enough to create a deepfake voice. The algorithm consists of a speaker encoder that derives an embedding from the short utterance of a single speaker. The embedding is a meaningful representation of the voice of the speaker, such that similar voices are close in a latent space. Moreover, there is the synthesizer that, conditioned on the embedding of a speaker, generates a spectrogram from text. A spectrogram is a visual representation of the spectrum of frequencies of a signal as it varies with time. At last, there is a so-called vocoder that infers an audio waveform from the spectrograms generated by the synthesizer. That’s it. You can now have any text spoken with a specific voice.

Before we take a closer look at the possible consequences of deepfake attacks, we should consider why people are easily influenced by such misinformation.

Why people are easily influenced by misinformation

Of course it is difficult to find well-founded reasons for this, which can be transferred to all people. Individuals are just too different. Nevertheless, the following quotes address two problems that I think apply to some people.

The real problem is not merely the content of any particular (outrageous) belief, but the overarching idea that depending on what one wants to be true some facts matter more than others

McIntyre, L. (2018) in Post-truth

People often perceive as truth what corresponds to their own faith or that of people they trust. If the truth is not questioned, this often leads to the statement of the second quote.

A lie can go halfway around the world before the truth can get its shoes on

Doermann, D. (2019) in House holds hearing on ‘deepfakes’ and artificial intelligence amid national security concerns

If you do not question the truth of videos, audios or news, but simply believe it, rumors spread very quickly. Especially nowadays with all the social media platforms. The more people believe in the truth, the easier it seems for for us to accept it. Even if the misinformation is eventually uncovered, it has already caused damage in people’s minds.

Dangers Emerging from deepfakes

There are a number of different areas where the harmful use of deepfakes could have a major impact:

- Everyone could be extorted with an embarrassing deepfake video. In this case, victims could be forced to provide money, trade secrets or nude pictures or videos.

- It could mean the loss of romantic opportunity, the support of friends, the denial of a promotion or the cancellation of a business opportunity.

- Anyone could be edited into pornographic videos that spread virally.

- A deepfake could show people stealing from a store, taking drugs or saying s.th. racist. Depending on the circumstances, timing and circulation of the fake, the effects could be devastating.

- Soldiers could be shown murdering innocent civilians in a war zone, causing military tensions .

- Fake videos could put you in meetings with spies or show criminals and thereby initiate public outrage or criminal investigations.

These are some examples of the direct consequences that deepfakes could have. But there is the opinion that the greatest danger comes from the mere existence of deepfakes. The case of the president of Gabon in 2019 serves as an example:

It was known that President Bongo had health problems at the time. To calm the population, the president gave the New Year’s speech. Because of his stroke, he looked very different to many people in the video because he hardly used facial expressions and gestures. The rumor that the video was a fake and that the government was hiding something spread very quickly, although security experts could not find any evidence of a manipulated video. One week later, the military launched an unsuccessful coup, citing the video as part of the motivation. The incident is summarized in this video.

The mere existence and knowledge that such deceptively genuine fakes exist has contributed to such an event. Even though there were many other political reasons for the coup attempt, this example raises the question of what happens to our society when we can no longer trust our eyes and ears, when we no longer know what and who is trustworthy and see everything as fake. In addition, there is not only the danger of making a fake look like the truth, but also the opposite: You can declare the truth as fake. In a court of law, the video evidence would no longer be conclusive. Corrupt personalities could deny all videos and point out that everything was a fake. Videos could no longer be evidence of police brutality. As a result, people would become increasingly apathetic and develop an indifference that could harm the entire political system. This phenomenon is known as reality apathy. Maybe it all sounds a bit utopian and maybe we will never live in a world with the perfect deepfake. But can we rule that out for sure? Considering how fast technology has developed over the last three years, I doubt we will stagnate at this level of quality.

Conclusion

This first part of the two-part blog showed that AI extends and alters the existing threat landscapes, by expanding the set of actors who are capable of carrying out the attack, the rate at which these actors can attack a system or person, and the set of plausible targets. Progress in AI may also expand existing threats by increasing the willingness of actors to carry out certain attacks. This claim follows from the properties of increasing anonymity and increasing psychological distance. If the attackers know that an attack will probably not be tracked back to them and if they feel less empathy toward their target and expect to experience less trauma, then they may be more willing to carry out the attack. In addition, the use of AI introduces new threats. The property of being unbounded by human capabilities implies that AI systems could enable actors to carry out attacks that would otherwise be infeasible. For example, most people are not capable of mimicking others’ voices realistically or manually creating audio files that resemble recordings of human speech. Physical damage can also result if, for example, AI-controlled vehicles or drones are taken over or used in a harmful manner. This video shows how dangerous drones in particular can become. It may seem a bit futuristic, but the technology is already available for such an attack.

In addition, the second chapter presented the risks of deepfakes and how easy it is to create one yourself. Especially in the area of elections, where a lot of manipulation and attempts are made to influence people, such videos could have a great impact. I’m curious if such videos will appear in the final round of the US elections 2020. As a foretaste, you are welcome to read through these imaginary scenarios regarding the dangers of deepfakes on elections.

So far, we have dealt with the question of how AI technology can be used for good or evil. But what if the AI itself becomes an attack vector by allowing an attacker to consciously control the output of the algorithm? This also answers the question, why not simply use AI to detect deepfakes. Such detectors sometimes work quite well, but they can also be outsmarted. We have reached the domain of adversarial attacks, which are covered in part 2.

Hooked?

Resources and additional information:

On artificially intelligent cyberattacks

- The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation

- Artificial Intelligence and Evolutionary Computations in Engineering Systems

- Building Trust in Machine Learning Malware Detectors

- ICCWS 2020 15th International Conference on Cyber Warfare and Security

- Artificial Intelligence and Crime

- Cyber Defence in the Age of AI, Smart Societies and Augmented Humanity

- Cyber-Sicherheit

- Artificially intelligent cyberattacks

- How AI and Cybersecurity Will Intersect in 2020

On deepfakes

- Adversarial Deepfakes: Evaluating Vulnerability of Deepfake Detectors to Adversarial Examples

- Deepfake videos could destroy trust in society – here’s how to restore it

- How could deepfakes impact the 2020 U.S. elections?

- Is seeing still believing? The deepfake challenge to truth in politics

- The biggest threat of deepfakes isn’t the deepfakes themselves

- Artificial Intelligence and New Threats to International Psychological Security

- Preparing for the World of a “Perfect” Deepfake

- Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case

Image sources

- Unsplash

- Using Deep Learning for finger-vein based biometric authentication

- Weaponizing data science for social engineering

- Artificial intelligence just made guessing your password a whole lot easier

- Is Your Network Behavior Analysis Up to the Task

- Is AI a threat to humans?

- DeepLocker: When malware turns artificial intelligence into a weapon

- How ‘Rogue One’ Brought Back Familiar Faces

- Understanding the Technology Behind DeepFakes

- Real-Time-Voice-Cloning

Leave a Reply

You must be logged in to post a comment.