Games are usually built in order to optimize performance, not security. This still holds true today, though it is slowly changing with the rise of cheaters in online games and technical advancements in general. Of course, when it comes to online gaming and cheating in highly optimized, massively populated multiplayer games, there is a bit more to think about than in classic single player games. But not only do the increased technical requirements hold the potential for security issues but also anti-cheat software and cheaters themselves. This article will have a look at what anti-cheat software is, how cheating gamers use technological shortcomings and finally, how Street Fighter Vs code base could have had serious consequences for the players’ security.

According to developer and blogger Madeline Miller, a good anti-cheat software (ACS) can vastly improve a games reputation compared to bad implementations of ACS. In her blog she writes “A well-established anti-cheat system can make the game more enjoyable by reinforcing the player’s confidence in the game’s fairness” [1]. A statement that is clearly supported by consumers, and especially those who have been falsely flagged as cheaters before.

When establishing an anti-cheat-system, many different challenges have to be tackled. The system should be accurate, detecting most of the cheats in the sessions. This is done by using different techniques such as heuristic evaluations, server validations or even performance reviews, conducted by a committee of real people [1]. It must also be forgiving in order not to flag false positives. Additionally, it should allow users to appeal incorrect decisions. If no such mechanism is provided, this can lead to bad responses amongst players, depending on the severity of the punishment. If too many players who are actually just incredibly skilled are flagged as cheaters, the reputation of the game can suffer drastically. In any of those cases, the game or even the company might lose a crucial part of its fanbase and users [1].

It is then no surprise that many developers of multiplayer games are spending huge amounts of money and resources to implement the best and most reliable ACS. Such systems are getting more and more sophisticated as a response to the increasing complexity of cheats found in games. Let’s have a look at some cases of cheating and respective ACS strategies. Notice how many of these cheating techniques are similar if not identical to conventional exploits and vulnerabilities used by hackers on standard software. As mentioned above, the detection can be conducted using stochastic or heuristic validation techniques. These mechanisms try to detect whether or not the current input of the player is plausible. For example, if cheaters were to use an aimbot in a first person shooter, the system could detect a humanly impossible accuracy when rotating the player around. In such a case it is pretty safe to say that this is a cheat. Other implementations use input validation on the server side to check the player’s current game state. Checksums and hashes are used to detect unexpected values or invalid player behavior [1].

But those methods are far from enough. Today, cheats exploit all sorts of vulnerabilities in hardware and software. State of the art ACS usually come equipped with always online functions to permanently screen game state values and player behavior. Most of the common ACS also make use of low level memory scanning in order to quit a game session when suspicious code or data is detected in memory – following the principle that any software could carry unwanted code [2]. Therefore ACS usually needs to gain high control over the operating systems (OS) API. This results in relatively restrictive game setups. If users run the OS in safe mode, disable enforced driver signatures or have outdated third party software installed, the ACS might quit your game session or not even let you login to the server [3].

One recent example shows how basic security methods can apply to ACS as well. In February 2023, Valve – the developer and publisher of the highly competitive ESports game Dota 2 – used a Honeypot to collectively ban 40.000 cheaters from the game. They created data which wasn’t used in the actual game but would be read by the popular cheating software g+. That means, whenever a user read this data, Valves ACS would instantly know that this could only be done using a cheat, and, therefore, ban the player in question [4]. Call of Duty developer Activision also uses a Honeypot to detect cheaters with their ACS Ricochet. They implement non-visible enemies – so-called Hallucinations – which are only detected when processing the game’s current state data. If a player tries to kill one of these invisible enemies they are obviously using a cheat [5]. The downside of some of the ACS methods: The cheats or the used software must often be known a priori.

To be fair, most of these ACS rules are pretty obvious and also intended by both the developers and the players – both of which just want to have fair and cheat-free online lobbies. Nevertheless it’s pretty surprising to see developers resorting to extreme measures just to prevent players from seeing through walls given that no lives or critical infrastructure are at stake.

And there is criticism coming from the players, too. Many users are complaining that ACS is collecting too much data and personalized information. Be it hardware details, information about other running processes on the machine or the statistics of a player’s physical behavior. There are even games which enable anti-cheat measures for the games offline single player mode [6] [7]. It also seems as though some ACS are running in the background even when the game isn’t running at all [8].

One very common cheat is the modification of the data using DLL Injection. This means that custom code can be injected in a running but foreign process. The code might then start another process which can then interfere with the hijacked process again. You might have noticed that this technique is not at all exclusive to games! DLL Injection as well as other software “hacks” used by cheating gamers can potentially be applied to cause serious harm to any kind of digital infrastructure.

Developers of ACS have found themselves in an arms race with the cheat software developers, both are upgrading their weapon arsenal and are resorting to more complex and extreme measures to beat the other party. Therefore even the “good” guys have to upgrade their weapons. To see a kind of DLL Injection in process and understand how even single player games can open a backdoor for cheaters and hackers, we can go back in time to the year 2016, where Capcom introduced a software vulnerability in one of their games. So let’s have a closer look now at how the game Street Fighter V created a gap in Intel’s security architecture.

The vast majority of games are either developed by huge companies with highly specialized and educated software engineers and game developers, often working with proprietary software, or by independent studios or smaller developer teams which usually work with well established engines like Unreal Engine or Unity Engine. Either way, there are big players behind the nitty and gritty details of communication with the OS. That being said, it is not unusual that these kinds of software products use libraries, APIs and hardware drivers that are well tested and certified by the respective OS vendor. Sometimes, the developers decide to create their own drivers and make sure to have them certified before the game ships. This is how Capcom.sys was distributed on the PCs of many players all over the world.

Capcom.sys is a driver shipped with Street Fighter 5. Shortly after release, some cheaters found a way to exploit the driver in order to execute code in Kernel-Mode by disabling SMEP [9].

Before looking into the drivers code, let’s summarize briefly what this actually means.

An OS has a so-called “User Mode” and a “Kernel Mode”. The User Mode is the mode in which processes i.e. normal executables run. Whenever a process needs to access low level APIs such as hardware drivers, it can call the OS API which then performs a context switch to Kernel Mode. Now the drivers and the low level code can perform the requested calls and switch back to the User Mode eventually. The OS also strictly isolates the virtual memory of the User Mode and the Kernel Mode. This is necessary to provide a layer of security, thus preventing compromised memory and invalid memory accesses [11]. Some CPUs implement a feature called Supervisor Mode Execution Protection or SMEP for short. This is a special system protection method, which prevents memory located in processes, running in User Mode, from being called from the highest privilege level. Put in other words, it is a mechanism that prevents unwanted and potentially harming data from entering crucial OS functionality [12].

Such memory references can be made using the Capcom.sys driver. Usually SMEP would stop those calls, but Capcom didn’t seem to double check a specific function in their driver’s code prior to shipping. Also, since this was a signed driver (certified by hardware or OS vendor), the OS didn’t block or remove it from the user’s machine.

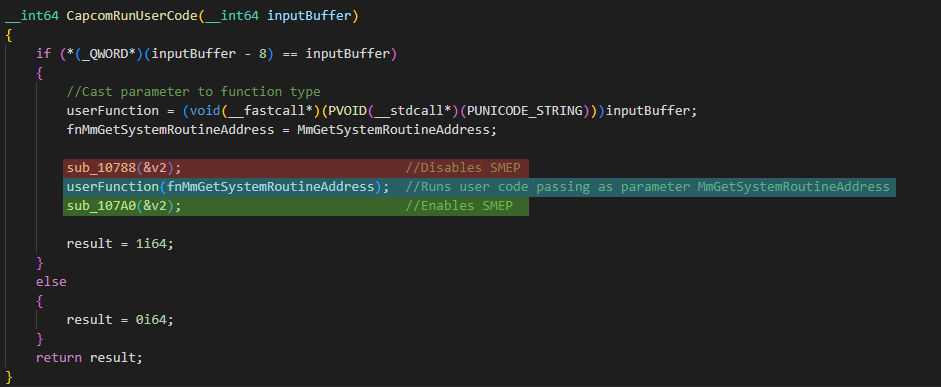

To see exactly what happened, let’s have a look at the Disassembly of said function (note that the names of variables and functions don’t match the original. This Disassembly was created by a user from [9]).

As you can see above, this function is composed of an if-else branch and returns a result. But what’s most interesting is the call of the userFunction right in the middle of the body. This call is surrounded by the function calls sub_10788() and sub_107A0(). As the comments in the Disassembly suggest, these calls disable SMEP prior to the call of the userFunction and enable it again afterwards.

This means that any code located in the pointer fnMmGetSystemRoutineAddress will be executed by the driver in Kernel Mode without any protection whatsoever. In addition to that, any value given as an argument will be handed over to the userFunction. It is not too hard to implement some more code to create a wrapper that executes arbitrary code using this driver’s functions (see [9] for a full template code).

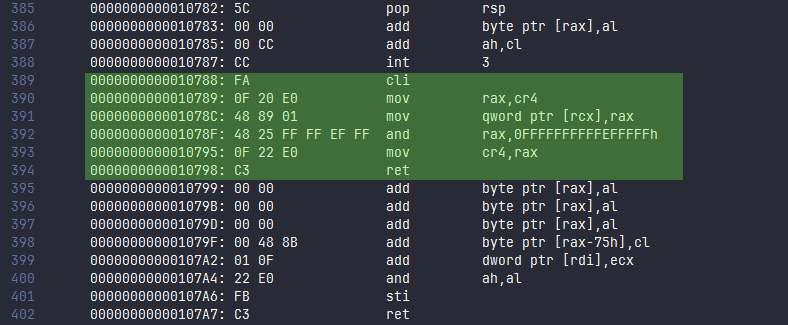

To validate the behavior of the functions sub_10788() and sub_107A0() let’s take a look at the Assembler code I converted from the original Capcom.sys driver myself.

In the Assembler code we can clearly see the resemblance of the C++ code from the figure above it. The most important functions are color coded above as well as in the Assembler code. If we now follow the function pointer 0000000000010788 to the sub_10788() we can see the following function.

Notice the register cr4 in line 393. This register is a control register on x64 architecture Intel CPUs. Its 21st bit happens to manage SMEP activation [13]. The value which is being moved into the registry is 0FFFFFFFFFFEFFFFF in line 392. Because Windows uses a little-endian byte expression we have to read the individual bytes from right to left. And indeed, the 21st bit is a zero while all others are ones. Therefore the function shown above actually disables SMEP on Intel CPUs and consequently allows hackers as well as cheaters to run any code they wish without any security check. By the way: According to my research, this problem only occurs with Intel CPUs, since AMD does not support SMEP.

(The SMEP-enabling function is not shown here because of redundancy.)

So what does this mean in the context of games, ACS and general software security?

After the exploit was discovered, the Capcom.sys certificate was revoked immediately. Obviously such security vulnerabilities cannot be allowed to reside in users systems for a long time. This case shows that even games – which usually don’t seem to have a need for high security standards – can still introduce serious issues.

A game system’s proximity to the OS, the increasing complexity of cheats and ACS plus possible financial and reputational pressure on game developers might all culminate in a scenario where something like the Capcom case can actually happen.

Although pretty severe, this is by far not something that happens regularly. As Madeline Miller describes in her blog, game companies usually don’t deal with such delicate software – neither in a usual game development context nor in ACS [1]. Still, this case shows how important high quality production code is. And it should make software engineers just a bit more aware of their responsibility – even if they are seemingly working on non-security-related code.

Sources:

- [1] Madeline Miller – Blog

https://madelinemiller.dev/blog/anticheat-an-analysis/ - [2] 4Players – Different ACSs functionalities

https://www.4players.de/cs.php/anticheat/-/185/16625/index.html - [3] EasyAntiCheat – Overview of EasyAntiCheat Troubleshooting

https://www.easy.ac/de-de/support/game/issues/errors/ - [4] Golem – Honeypot for Cheat Software (Valve)

https://www.golem.de/news/dota-2-valve-lockt-cheater-in-einen-honeypot-2302-172157.html

[5] Activision Honeypot for Cheat Software (Activision)

https://www.callofduty.com/blog/2023/06/call-of-duty-ricochet-anti-cheat-season-04-update - [6] Steam Community – Watch Dogs Offline ACS https://steamcommunity.com/app/447040/discussions/0/152390014801453165/

- [7] Wired – Kernel Based Anti-Cheat Drivers

https://www.wired.com/story/kernel-anti-cheat-online-gaming-vulnerabilities/ - [8] Reddit – Kernel based Anti-Cheat in single player games https://www.reddit.com/r/pcgaming/comments/q8ao19/every_game_with_kernellevel_anticheat_software/

- [9] Unknown Cheats – Capcom Exploit example

https://www.unknowncheats.me/forum/general-programming-and-reversing/189625-capcom-sys-usage-example.html - [10] Stronghold Cyber Security – Kernel Ring Model

https://www.strongholdcybersecurity.com/2018/01/04/meltdown-bug-meltdown-attack-intel-processors/cpuprotectionring/ - [11] Microsoft Learn – User-Mode / Kernel-Mode

https://learn.microsoft.com/en-us/windows-hardware/drivers/gettingstarted/user-mode-and-kernel-mode - [12] Intel – Supervisor Mode Execution Protection

https://edc.intel.com/content/www/us/en/design/ipla/software-development-platforms/servers/platforms/intel-pentium-silver-and-intel-celeron-processors-datasheet-volume-1-of-2/005/intel-supervisor-mode-execution-protection-smep/ - [13] OSDev Wiki – CPU Registers

https://wiki.osdev.org/CPU_Registers_x86-64#CR4 - [14] Game Deals – Street Fighter V cover image https://img.gg.deals/01/b4/323edf0507129ecd71d9acd5dff96b32200f_1920xt1080_S1000.jpg

Leave a Reply

You must be logged in to post a comment.