Now that we’ve understood the basics, this second part will cover the most relevant container threats, their possible impact as well as existent countermeasures. Beyond that, a short overview of the most important sources for container threats will be provided. I’m pretty sure you’re not counting on most of them. Want to know more?

Container threats

Due to their features and fundamental implementation, containers are exposed to several attack vectors. However, before we step further, let me get one thing clear: First, this is not only the fault of container vendors like Docker. As we saw in the first part, containers rely on many features offered by the underlying Linux kernel which includes bugs, too. Second, there’s no software in the world that can be marked as bug-free and robust against any sort of attacks. Therefore, this post is not about criticizing Docker or containers in general (Honestly speaking, I really like them both!), but presenting existent attack surfaces and what can done about them in a neutral way. So keep the following in mind for the rest of this blog post: Nobody is perfect! And there’s less this is as suitable for as software.

Inner-container attacks

The probably most obvious threat for a container is any attacker who wants to gain access to the container itself. There might be different reasons for this. Possibly the attacker intends to simply shutdown the container or to steal available user data that might shape up as valuable. Another more advanced motivation might be to get foothold on a single container in order to use it as an initial point for performing attacks against other containers or the container host.

Interestingly, flaws introduced by container systems like Docker usually are not the reason that allows for capturing a container. Instead, it’s often the software or rather the services hosted by a container which makes it vulnerable against invaders. Here’s a more detailed list of potential causes:

- Overage software: The software running inside a container is not kept up to date.

- Exposure to unsecure/untrusted networks: A container is accidentally accessible from unknown networks.

- Use of large base images: Lots of software means lots of things to patch. Every additional package or library increases a container’s attack surface.

- Weak application security: A custom application or an application’s runtime environment (e.g. Java’s JVM) might be exploited by attackers due to vulnerabilites.

- Working with the root user (UID 0): By default, the user inside a container is root (UID 0). We’ll cover the consequences in an extra section.

Cross-container attacks

Losing control over a single container might be annoying , but considering only basic scenarios, there’s no impact on the rest of the container infrastructure. In the last resort, the affected container has to be deleted and re-instantiated.

The next level consists in capturing a container and use it to attack other containers existing on the same host or within the local network. For example, an attacker might have the following goals:

- Stealing database credentials by means of ARP spoofing.

- Executing DoS (Denial of Service) attacks by flooding other container services with requests until they’re down or making a single container bind all the available physical resources (e.g. by means of forkbombs).

Again, the vulnerabilities making that possible are generally not introduced by faulty container implementations or container-related weaknesses. Instead, it’s the following points that bring about this attack vector:

- Weak network defaults: Docker settings default to bridge configuration. The host’s docker0 interface acts as a switch and passes on traffic between containers without any restriction.

- Weak cgroup restrictions: Poor or completely missing resource limits.

- Working with root user (UID0): High risk due to parts of the kernel which are not namespace-aware.

Especially the bridge network configuration constitutes a high risk, since it allows for accessing other containers attached to the same interface without any restrictions. Docker’s policy is to provide a set of reasonable default configurations in order to get their users up and running without having to deal with various settings beforehand. The bridge configuration is part of the Docker defaults which of course makes sense, considering that e.g. a application container and a database container have to communicate. However, this approach comes with the disadvantage of some users being tempted to leave the entire configuration responsibility up to the container system. As for cgroup settings, it’s exactly the same thing.

Attacks against container management tools

Deploying containerized applications in production is usually not done manually. Instead, tools for container orchestration (e.g. Kubernetes or Apache Mesos) as well as service discovery (e.g. Eureka by Netflix) are employed in order to realize automated workflows as well as robust and scalable environments.

Though, using such tools requires permission for bidirectional network traffic between containers and the management tools. Tools like Kubernetes manage containers on the basis of health checks comprising lots of different metrics they gather from containers. As a consequence, there have to be permanent connections instead of just temporary ones. This brings up different imaginable scenarios:

- An attacker captures a single container. Since there’s a network connection to the orchestration tool, he also succeeds in bringing it under his control by exploting any documented weaknesses. From that moment on, the attacker might for example shut down the entire production system, execute DoS attacks or expands into other parts of the internal network.

- Again, an attacker captures a single container. Due to vulnerabilities of the service discovery, he also captures it and either shuts it down or manipulates its data. As in the scenario above, he might aim at causing a crash failure of the whole system or making his way through the internal network.

Once more, these threats are not caused by the container system itself. But what could lead to the scenarios described above?

- Weak network defaults: Yes indeed, it’s the network configuration again. The problem is the same as explained in the section covering cross-container attacks.

- Weak firewall settings: Poorly configured firewalls may allow containers or additional tools to access parts of the internal network which are not relevant for their purpose.

Escaping

Another very important threat that must not be underestimated is escaping the container and entering the host. In fact, this can even be considered the worst case scenario, because once successful, the attacker’s influence is not limited to a container any more. Rather, assumed he manages to obtain root privileges, he controls every service and every application running on that host. Moreover, the attacker may attempt to compromise other machines residing within the local network.

Especially in terms of containers escaping is a very critical aspect, since their principle actually is giving up some degree of isolation in order to gain advantages conerning storage and speed. Here’re the aspects which facilitate container breakouts:

- Insecure defaults/weak configuration: This concerns the host firewall and cgroup settings.

- Information disclosure: That means e.g. exposing the host’s kernel ring buffer, procfs or sysfs to containers.

- Weak network defaults: It’s very risky to bind a host’s services and dameons on all interfaces (0.0.0.0) because this way they’re accessible from within containers.

- Working with root user (UID 0): Operating as root within a container might lead to container breakout (a proof of concept for Docker will be presented in the next section).

- Mounting host directories inside containers: This is very critical especially for Docker containers. I will explain that in the proof of concept section.

The points which highly increase the risk of container escaping look very familiar. Indeed, it’s a relatively small set of container settings or properties that’s responsible for the bigger part of the risks coming with them. One of the most critical aspects is that a Docker container’s default user is root. That is because if an attacker succeeds in breaking out of a Docker container as root user, the host system is at his mercy. Under particular conditions, escaping a Docker container is actually very easy. I will cover this in the following section.

The Linux kernel

Yes, it’s true: Even the Linux kernel itself constitutes an important threat as far as containers are concerned. There’re multiple reasons why this is the case:

- Privilege escalation vulnerabilities: New kernel weaknesses which enable users to extend their privileges are permanently discovered. For example, many users of smartphones exploit these security gaps in order to become root within their Andoid OS. Of course this also affects containers.

- Overage kernel code: Many of the already mentioned kernel vulnerabilities are a direct result of the user being able to load and install old packets and kernel modules which are no longer updated and maintained. The most popular Linux distributions like Ubuntu continuously integrate protection mechanisms against this, but they just started doing so. Another source for kernel-related security issues is the system calls.

- Ignorance of development team: Although it may sound kind of odd, the development team itself is a great threat for kernel security. This is because for many core developers, security does not have the highest priority. Linus Torvalds is particularly infamous for considering security bugs less important than other ones.

Proof of Concept: Escaping a Docker container

In order to makes this more concrete, I will now demonstrate how to escape a Docker container. You will shortly recognize that although this may sound like rocket science first, it is much easier as it seems. And this is exactly what makes it very dangerous.

How it works in theory

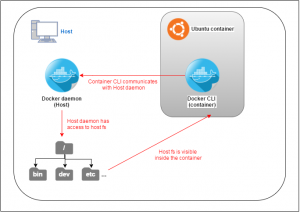

What we’ll do with the following “hack” is talking to the Docker dameon of the host with a Docker CLI we installed inside a container. You heard right, we’ll run Docker inside Docker ;).

As soon as we have our dockerized Docker container up and running, we’ll use the host’s Docker socket to gain unrestricted root access to the host system (figure 1).

Docker architecture

Docker daemon? Docker socket? Ok, let’s stop here for a moment and take a look at the Docker architecture fundamentals. It’s important to understand that Docker is not just a single script or programm, but consists of three major components which only form a comprehensive container platform when they come together.

The first element of Docker is what you use as soon as you run commands like docker run, docker pull and so forth. It’s called the Docker client or CLI (command line interface).

However, it takes another component to process these commands and perform the heavy lifting, stopping, re-starting and destroying of containers. This part of Docker is called the Docker daemon, because it’s a background process.

The third essential part of Docker is the Docker Registry. I already mentioned it in the previous blog post and will skip it here since it’s not relevant for our current concern.

Instead, the next interesting question is: How can CLI and daemon communicate?

For being able to process commands sent by the Docker client, the Docker daemon provides a RESTful interface. Although one might immediately think of HTTP when reading about “REST”, Docker rather makes use of a UNIX domain socket for client-daemon communication. This bidirectional communication endpoint can be found under the following path: /var/run/docker.sock.

The last thing we need to know before we can start is how to access this socket from within a container. Fortunately, Docker comes with a feature that allows to mount directories of the host directly into a container. Such volumes, as they’re called in the Docker ecosystem, can be very handy in certain situations like sharing configuration files among multiple containers. Though, I will now show you that this feature might backfire if it’s used inconsiderately.

How it’s done

The first thing we have to do is running an ordinary Docker container as we did several times before. However, we will additionally configure a host directory to be mounted within the new container. In other words, we’ll add a volume to the container. It’s very important that for our purpose, we can’t take an arbitrary directory. Instead, we’ll rather choose the /var/run folder:

# Launch a container with a volume mounted at /var/run $ docker run -i -t -v /var/run:/var/run ubuntu:latest /bin/bash

Why do we just have to take the /var/run directory? In the previous paragraph, I mentioned that the Docker socket docker.sock we need for being able to talk to the host’s Docker daemon resides in this folder. That’s the only reason we can’t select another one. Consider that the path to docker.sock inside the container must be exactly the same as on the host, since this is the default path where the Docker CLI expects to find it.

Now that we have the hosts’s Docker socket available, we also need a corresponding command line interface for sending commands. Therefore, our next step is to install Docker inside the container. I won’t cover this here, because the procedure is not different from what must be done to install Docker on a normal host and there’s lots of documentation available covering this in under various Linux environments. As soon as you’re done with this, make sure everything works properly:

# We do this WITHIN a running container! root@abc123def:/# docker -v Docker version 1.11.0

Assumed our Docker in Docker is ready, we’re ready to hit the host daemon from within the container. Can’t believe that? Type docker images on your container prompt. Look exactly at what you’re seeing as a result: Indeed, the command returned a list of all images located on the host, didn’t it?

We’re almost done. Before we take the last steps towards breaking out of our container, make yourself clear what we’ve reached so far: Since we’re able to control the host’s Docker daemon from inside a container, we’ve logically everything we need to force our way into every host directory we would like to. How about mounting the host’s entire filesystem into a new container?

# Attention: We are already WITHIN a container! # We're mounting the host's fs root at /test root@abc123def:/# docker run -i -t -v /:/test ubuntu:latest /bin/bash

Once your containerized container is ready (which sould be a matter of seconds), cd into /test and execute ls. If everything works as expected, you’ll discover that every folder under the hosts root directory is now available within /test. Basically, we’re now able to manipulate the host filesystem as we like it. That’s exactly what we wanted to achieve.

To make things a little bit more comfortable, we’ll at least start a new root shell with /test as our new root directory. So go back to the container root / and type the following:

# Configuring /test as our new root dir by creating chroot jail root@ghi456jkl:/# chroot /test # Starting bash inside our jail /bin/bash # Ét voilà root@ghi456jkl:/# cd /; ls bin dev home lib mnt proc run srv tmp var boot etc lib64 media opt root sbin sys usr

As a side effect, this also helps us to circumvent the access restrictions introduced by a bind-mount. To prove yourself that you’re actually working directly on the host right now, navigate to a directory of your choice and create a sample file.

Now, exit the inner container as well as the outer container until you’re finally back on the Docker host. Go to the folder where you created the sample file from within the inner container. You’ll realize that it really exists.

The root-dilemma

Asking why and how it is possible to acquire control over the entire host system from inside a container is a legitimate question. From your Docker host, navigate to the sample file from above again and check the files permissions and ownership:

$ ls -al sample_file -rw-r--r-- 1 root root 0 Aug 10 00:00 sample_file

Look at the user who owns the file from the host’s point of view: root??

At first sight, that’s no surprise because the user inside the container was also called root. However, shouldn’t the existence of this root user be restricted to the container itself? And before you ask: The container’s root user actually has UID 0. So you may guess what’s the origin of this dilemma: As soon as a container root leaves it’s enclosed environment, what really happens when it accesses a mounted host directory, there’s no chance for the host to make a difference between the “real” host root and the container root user. From the kernel’s perspective, a user is just a number, and in that case it recognizes UID 0 in both cases.

Of course one must notice that this behavior is a consequence of having the Docker socket mounted inside a container. Accessing this socket has been the key to operate on the host without any restrictions. The argument that nobody would ever expose the host’s Docker socket inside a container isn’t a very good one in my opinion, since solely rely on nobody will ever mount /var/run is too risky.

Countermeasures

So far, we covered lots of potential risks and threats for containers, and we even got very concrete by stepping trough a Docker escape hands-on. Now let’s see what kind of solutions have been developed over the last time to answer these problems.

User namespaces

In order to tackle the issue we’ve met when we entered the host filesystem from within a container, a new feature called user namespaces has been elaborated. Remember that the problem’s core arose from the fact that the Linux kernel was not able to make a difference between the container root user and the actual host root, since both carry UID 0. So when we once had access to any host directories virtually restricted to the host root, nothing could have been stop us from damaging the entire system when it comes to the worst.

The solution which solves this problem is “root remapping” (this is how Docker’s Director of Security Nathan McCauley calls it in his talk at Docker Con 2015). What that means is that the root user inside a container is mapped to another user outside the container. Within the container environment, the root user has still UID 0, but is represented by another random UID as soon as it enters any mounted host directory. Since this way there’s no more confusion between different users with UID 0 on the host system, the container root (which has now nobody privileges on the host) cannot harm the host any more.

Let’s first activate user namespaces in Docker to prove this actually works. The scenario is quite the same: Start a container with the Docker socket mounted as a volume and install Docker inside the container. To make it easy, the best thing is to reuse the container from above. However, before we start with this, there’s a small change we have to apply to the systemd Docker configuration (assumed that your init system is systemd). Navigate to /etc/systemd/system/docker.service.d/ and create a new file inside this folder which ends with “.conf”. After that, write this into the file:

# We have to tell systemd that this is a service unit [Service] # Make sure that the parameter is actually resetted ExecStart= # We activate the user namespaces (see last paramaeter) ExecStart=/usr/bin/docker daemon -H fd:// --userns-remap=default

Afterwards, we have to restart the Docker daemon in systemd style:

# Reload configuration $ sudo systemctl daemon-reload # Restart Docker daemon $ sudo systemctl restart docker.service

Again, prepare a Docker-in-Docker container and ..hey, what’s going on?!

root@111abc222:/# docker images Cannot connect to the Docker daemon. Is the docker daemon running on this host?

It seems like we can’t access the mounted Docker socket. However, the error message doesn’t give us the reason for that behavior. Further examinations will prove that the container user doesn’t have the necessary permissions any more in order to communicate with the host’s Docker daemon, which requires root privileges or at least membership of the docker group. Indeed, user namespaces do their job pretty well.

Though, we already ran into an important disadvantage of the current user namespaces implementation. That is, they can be disabled on demand. As for Docker, the team dicided to leave them deactivated by default, which is why we explicitly had to enable them by means of a daemon parameter and restart the corresponding Docker service. At first sight, this seems not to be a big deal. But focusing on the average user, I’m sure that most of them run the Docker daemon with user namespaces disabled or, even worse, have no idea about how to turn this feature on and off or that it even exists. Maybe Docker will adjust their default settings with future releases.

Another essential aspect is that user namespaces is a relatively new feature. It’s usually common for new features to ship with several bugs and unintended backdoors which can be exploited to bypass their security mechanisms. So user namespaces are not (yet?) the silver bullet in terms of container security, since this feature is still simply work in progress!

Nevertheless, user namespaces are already valuable when working with Docker. Do you remember I said that accessing the Docker socket requires root priviliges OR being part of the docker group? Take a moment and think about that, keeping in mind that the daemon runs with root privileges. As a consequence, doesn’t that mean that belonging to the docker group can be regarded as an equivalent to having root privileges on the host? Yes, that’s exactly what it means. You see, the user namespaces feature didn’t arrive too early.

Control Groups

We already met cgroups in the previous post when discussing the basic container technologies, which is why I’ll keep this short. When we looked at cgroups the first time, the focus was on putting resource limits (CPU, RAM etc.) upon a group of processes. In terms of security, they’re also a effective mechanism for establishing access restrictions. However, I don’t want to keep quiet that on the other hand cgroups also have the potential of being a serious threat to container security. The reason for this is their implementation as a virtual filesystem, which enables external tools to view existing cgroups and control them if necessary. Since the cgroup virtual filesystem can be mounted by anyone interested in this information (also by containers!), it must be considered a possible method for container escape if employed incautiously.

Capabilities

I think we agree that user namespaces is a reasonable kernel extension as for container security. Though, due to some Linux characteristics even this new feature can’t provide all-over protection of the host system. And we’re not talking about any bugs here.

Maybe you already heared about so-calles setuid binaries (in short: suid binaries). As opposed to other binaries, they’re executed with the privileges of the file’s owner in place of the user running it. A special type of suid binaires is suid root binaries, which belong to the root user and therefore are always processed with its unrestricted privilege, no matter which user put them into action. I’m pretty sure you’re surprised to hear that probably the most famous of these binaries is /bin/ping. Just to get that clear: Everytime you do a ping, it’s done with root priviliges!

The consequence that arises from the existence of suid root binaries is that they primarily introduce a large gateway for privilege escalation attacks. Unfortunately, user namespaces can’t even help us here. So the idea behind capabilities is giving a single process temporary and very fine-granular permissions. Capabilities can dynamically be hand over to or taken from a process even on execution time. Regarding /bin/ping, privilege escalation may be avoided by granting it only the permissions it really needs (i.e. network access) instead of being to generous giving it unrestricted access to parts of the system which are not of its business.

Although capabilities is a quite reasonable and powerful feature, it also comes with a great weakness, namely CAP_SYS_ADMIN. This is the most dangerous capability since giving it to any process has the same effect as running it as the root user. There might be a high risk for many users being to lazy for taking the time to carefully think about which permissions are really required and assign them in the form of individual capabilities. Instead they might be tempted to use CAP_SYS_ADMIN in these situations. You see that it’s not only about the technology, because users play an important role in terms of security, too.

Mandatory Access Control

So what else can be done? Another security concept which is actually very old and now widely adopted by several container vendors is Mandatory Access Control (MAC). MAC is heaviliy based on the paper “Integrity Considerations for Secure Computing Systems” which has been commissioned by the US Air Force in 1977. Amongst other things, this paper discusses the idea of watermarks and policies in order to protect the integrity of a computing system’s data. However, it was just ten years later when National Security Agency (NSA) gave MAC a kick towards being integrated into operating systems.

While Discretionary Access Control (DAC) purely makes its access decisions based on the notion of subjects (e.g. users or processes) and objects (e.g. files or sockets) as you may be familiar with from working with Linux, MAC goes beyond that. It relies on a policy for decision making which is made up of a custom set of rules. An essential assumption is that everything that’s not explicitly allowed by a policy rule is interpreted as forbidden by the security system.

There’re several MAC implementations available on Linux, whereupon SELinux (Security Enhanced Linux) and AppArmor might be the most popular ones. What they have in common is their ability to offer the definition of very fine-granular rules. Though, both their configuration is generally considered quite complex since a custom policy requires the creation of a security profile. Each profile contains rules which must be expressed through a specific language. For this reason, Docker ships with an AppArmor template, containing some rules which should be a good default. As for SELinux, an appropriate Docker profile is provided by Red Hat.

Conclusion

Although container technology offers great changes towards highly available and scalable IT infrastructures, their impact on security must not be underestimated. However, we also see that the threats we discussed do not originate from containers themselves in the first place. In fact, the most dangerous risks are introduced by the users themselves as well as the underlying Linux kernel.

Even the most advanced security features cannot give us any protection if they’re explicitly disabled by the system administrator. Of course one may answer that Docker ships with user namespaces and MAC disabled by default. However, we should not take that as a reason for absolving from the responsibility for taking care of our systems. Moreover, Docker already explained that e.g. user namespaces will be activated by default in future releases.

Then there’s the Linux kernel, whose development team didn’t take security seriously enough for a long time, which is why now lots of security features have to be added retroactively. In fact, the security enhancements dicussed above constitute a step in the right direction, albeit introducing new functionality usually involves introducing new bugs. Besides, there’re still many parts of the Linux kernel which are not namespace-aware.

Of course there’re also features coming with Docker which enlarge the attack surface. We saw that Docker’s ability to give a container access to a host directory by offering volumes might lead to escape. Thus, I’m not really a big fan of Docker’s mount feature, even though it might be practical e.g. for aggregating logs or sharing configuration files among multiple containers. However, in my opinion this is not only dangerous, but also hurts the principle of a container being a self-cotntained process execution unit. What are your thinking about this?

This shall be enough for this blog post. In the third and last part of that series, we will take a short look at a very nice Docker feature called Docker Content Trust. Stay tuned!

Further research questions

- The security awareness in terms of container security seems to increase as of late. Will container security ever reach a level where a container can really be called secure? Is absolute security even possible?

- What kind of security features will container vendors like Docker plan for future releases?

- In January 2016 Docker announced that Unikernel Systems joined the team. Will this technology going beyond bringing Docker on Windows or Mac OS and taking Docker security on a higher level in the future? Can Unikernels even help with that?

- Since containers is a key factor driving Linux kernel development, Docker’s participation on Linux kernel improvement would perfectly make sense. When will they start to take part? Did they already?

- Of course security becomes more and more important in software industry. Furthermore, this also holds true for an ordinary software developer’s daily work. How might a developer’s field of responsibility look like in a few years? What kind of role does the DevOps movement play here?

Sources

Web

- Docker Inc.: Docker security (2016), https://docs.docker.com/engine/security/security/ (last access: August 16, 2016)

- lvh: Don’t expose the Docker socket (not even to a container) (August 23, 2015), https://www.lvh.io/posts/dont-expose-the-docker-socket-not-even-to-a-container.html (last access: August 16, 2016)

Papers

- Grattafiori, Aaron: Understanding and Hardening Linux Containers (NCC Group Whitepaper, Version 1.0, April 20, 2016)

Leave a Reply

You must be logged in to post a comment.