Tag: Docker

Docker security: Hands-on guide

Absichern von Docker Containern, durch die Nutzung von Best Practices in DockerFiles und Docker Compose. Einführung Es ist sehr wahrscheinlich im Alltag mit containerisierten Anwendungen in Berührung zu kommen, ohne sich dessen bewusst zu sein. In einer Zeit, in der sich der Trend der Unternehmen weiterhin stark in Richtung Cloud bewegt, gewinnen Container immer mehr…

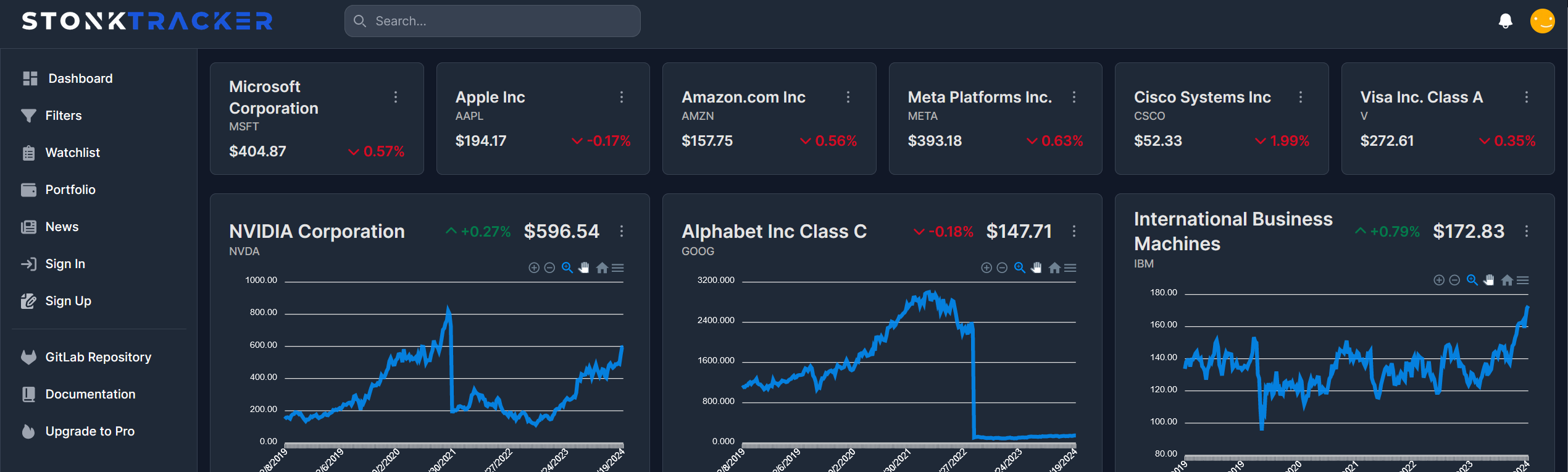

Die Meere der Systemtechnik navigieren: Eine Reise durch die Bereitstellung einer Aktien-Webanwendung in der Cloud

Auf zu neuen Ufern: Einleitung Die Cloud-Computing-Technologie hat die Art und Weise, wie Unternehmen Anwendungen entwickeln, bereitstellen und skalieren, revolutioniert. In diesem Beitrag, der im Rahmen der Vorlesung “143101a System Engineering und Management” entstanden ist, werden wir uns darauf konzentrieren, wie eine bereits bestehende Webanwendung zur Visualisierung und Filterung von Aktienkennzahlen auf der IBM Cloud-Infrastruktur…

- Allgemein, Student Projects, System Architecture, System Designs, System Engineering, Teaching and Learning

Using Keycloak as IAM for our hosting provider service

Discover how Keycloak can revolutionize your IAM strategy and propel your projects to new heights of security and efficiency.

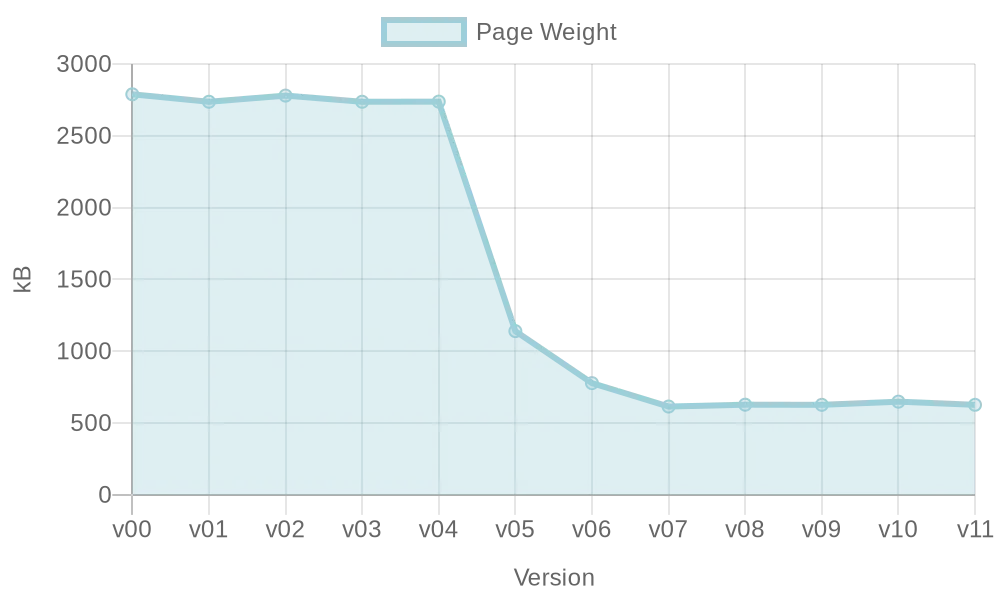

Optimierung einer VueJS-Webseite: Ladezeitenreduktion

Performance einer Webseite ist wichtig für Nutzer und Suchmaschinen, aber im Entwicklungsprozess nicht immer ersichtlich. Diese Hausarbeit untersucht Möglichkeiten zur automatischen Optimierung der Webperformance während der Entwicklung mit VueJS.

Automate PDF – A Cloud-Driven Workflow Tool with Cloud Functions and Kubernetes

Gitlab You can find the Project under this link https://gitlab.mi.hdm-stuttgart.de/fb089/automatecloud Wiki You can find all the Infos in our Gitlab Wiki (https://gitlab.mi.hdm-stuttgart.de/fb089/automatecloud/-/wikis/AutomateCloud). You can even try it urself. Feel free Short Description Automate PDF is a workflow automation tool created in the course “Software Development for Cloud Computing”. The application provides a simple graph editor…

WebAssembly: Das neue Docker und noch mehr?

If WASM+WASI existed in 2008, we wouldn’t have needed to created Docker. That’s how important it is. Webassembly on the server is the future of computing. A standardized system interface was the missing link. Let’s hope WASI is up to the task! Tweet, Solomon Hykes (Erfinder von Docker), 2019 Dieser Tweet über WebAssembly (WASM) des…

Scaling a Basic Chat

Authors: Max Merz — merzmax.de, @MrMaxMerzMartin Bock — martin-bock.com, @martbock

Deploying Random Chat Application on AWS EC2 with Kubernetes

1. Introduction For the examination of the lecture “Software Development for Cloud Computing”, I want to build a simple Random Chat Application. The idea of this application is based on the famous chat application called Omegle. Omegle is where people can meet random people in the world and can have a one-on-one chat. With Omegle…

Application Updater mit Addon-Verwaltung

von Mario Beck (mb343) und Felix Ruh (fr067) Einleitung Unser Ziel war es, einen Programm Updater für Entwickler zu erstellen, den diese einfach in ihre CI/CD-Pipeline integrieren können. Für die Umsetzung haben wir die IBM Cloud und eine Serverless Architektur verwendet, um eine unbegrenzte Skalierbarkeit zu erreichen. Zu den verwendeten Serverless Services zählen die Cloud…

How do you get a web application into the cloud?

by Dominik Ratzel (dr079) and Alischa Fritzsche (af094) For the lecture “Software Development for Cloud Computing”, we set ourselves the goal of exploring new things and gaining experience. We focused on one topic: “How do you get a web application into the cloud?”. In doing so, we took a closer look at Continuous Integration /…