In today’s world, video conferencing is getting more and more important – be it for learning, business events or social interaction in general. Most people use one of the big players like Zoom or Microsoft Teams, which both have their share of privacy issues. However, there is an alternative approach: self-hosting open-source software like Jitsi Meet. In this article, we are going to explore the different scaling options for deploying anything from a single Jitsi server to a sharded Kubernetes cluster.

Jitsi is an open-source video conferencing service that you can host on your own. Aside from its source code, Jitsi is available as a Debian/Ubuntu package and as a Docker image. If you want to test Jitsi Meet, you may use the public instance at meet.jit.si.

To understand how a self-hosted Jitsi Meet service can be scaled horizontally, we need to look at the different components that are involved in providing the service first.

Components

A Jitsi Meet service is comprised of various different architectural components that can be scaled independently.

Jitsi Meet Frontend

The actual web interface is rendered by a WebRTC-compatible JavaScript application. It is hosted by a number of simple web servers and connects to videobridges to send and receive audio and video signals.

Jitsi Videobridge (jvb)

The videobridge is a WebRTC-compatible server that routes audio and video streams between the participants of a conference. Jitsi’s videobridge is an XMPP (Extensible Messaging and Presence Protocol) server component.

Jitsi Conference Focus (jicofo)

Jicofo manages media sessions between each of the participants of a conference and the videobridge. It also acts as a load balancer if multiple videobridges are used.

Prosody

Prosody is a XMPP communication server that is used by Jitsi to create multi-user conferences.

Jitsi Gateway to SIP (jigasi)

Jigasi is a server component that allows telephony SIP clients to join a conference. We won’t need this component for the proposed setup, though.

Jitsi Broadcasting Infrastructure (jibri)

Jibri allows for recording and/or streaming conferences by using headless Chrome instances. We won’t need this component for the proposed setup, though.

Now that we know what the different components of a Jitsi Meet service are, we can take a look at the different possible deployment scenarios.

Single Server Setup

The simplest deployment is to run all of Jitsi’s components on a single server. Jitsi’s documentation features an excellent self-hosting guide for Debian/Ubuntu. It is best to use a bare-metal server with dedicated CPU cores and enough RAM. A steady, fast network connection is also essential (1 Gbit/s). However, you will quickly hit the limits of a single Jitsi server if you want to host multiple conferences that each have multiple participants.

Single Jitsi Meet, Multiple Videobridges

The videobridges will typically have the most workload since they distribute the actual video streams. Thus, it makes sense to mainly scale this component. By default, all participants of a conference will use the same videobridge (without Octo). If you want to host many conferences on your Jitsi cluster, you will need a lot of videobridges to process all of the resulting video streams.

Luckily, Jitsi’s architecture allows for scaling videobridges up and down pretty easily. If you have multiple videobridges, two things are very important for facilitating trouble-free conferences. Firstly, once a conference has begun, it is important that all other connecting clients will use the same videobridge (without Octo). Secondly, Jitsi needs to be able to balance the load of multiple conferences between all videobridges.

When connecting to a conference, Jicofo will point the client to a videobridge that it should connect to. To consistently point all participants of a conference to the same videobridge, Jicofo holds state about which conferences run on which videobridge. When a new conference is initiated, Jicofo will load-balance between the videobridges and select one of the ones that are available.

Autoscaling of Videobridges

Let’s say you have to serve 1.000 concurrent users mid-day, but only 100 in the evening. Your Jitsi cluster does not need to constantly run 30 videobridges if 28 of them are idle between 5pm and 8am. Especially if your cluster is not running on dedicated hardware but in the cloud, it absolutely makes sense to autoscale the number of running videobridges based on usage to save a significant amount of money on your cloud provider’s next bill.

Unfortunately, Prosody needs an existing XMPP component configuration for every new videobridge that is connected. And if you create a new component configuration, you need to reload the Prosody service – that’s not a good idea in production. This means that you need to predetermine the maximum number of videobridges that can be running at any given time. However, you should probably do that anyways since Prosody (and Jicofo) cannot handle an infinite number of videobridges.

Most of all cloud providers allow you to define an equivalent to autoscaling groups in AWS. Now, you create an autoscaling group with a minimum and a maximum number of videobridges that may be running simultaneously. In Prosody, you define the same number of XMPP components that you used for the maximum number in the autoscaling group.

Next, you need a monitoring value that can be used to termine if additional videobridges should be started or running bridges should be stopped. Appropriate parameters can be CPU usage or network traffic of the videobridges. Of course, the exact limits will differ for each setup and use case.

Sharded Jitsi Meet Instances with Multiple Videobridges

As previously suggested, Prosody and Jicofo cannot handle an unlimited number of connected videobridges or user requests. Additionally, it makes sense to have additional servers for failover and rolling updates. When Prosody and Jicofo need to be scaled, it makes sense to create multiple Jitsi shards that run independently from one another.

The German HPI Schul-Cloud’s open-source Jitsi deployment in Kubernetes that is available on GitHub is suitable as a great starting point, since it’s architecture is pretty well documented. They use two shards in their production deployment.

As far as I can tell, Freifunk München’s public Jitsi cluster consists of four shards – though they deploy directly to the machines without the use of Kubernetes.

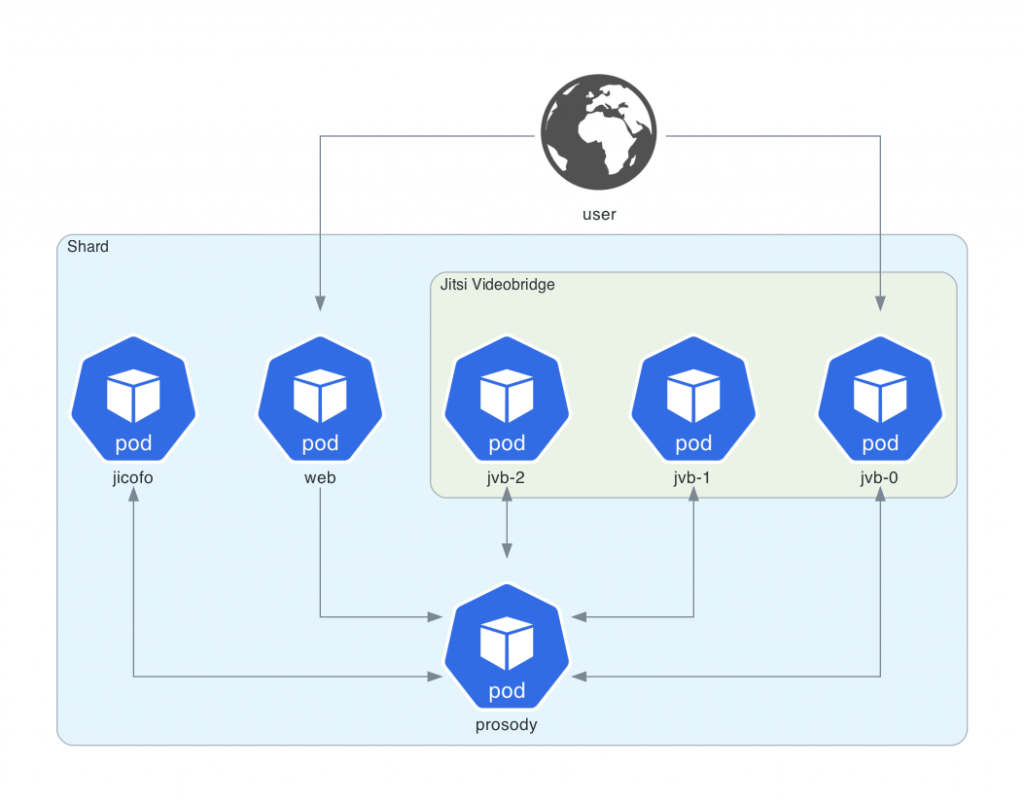

Back to the HPI Schul-Cloud example: Inside a single shard, they deploy one pod each for the Jicofo and Prosody services, as well as a static web server hosting the Jitsi Meet JavaScript client application. The videobridges are managed by a Stateful Set in order to get predictable (incrementing) pod names. Based on the average network traffic to and from the videobridge pods, a horizontal pod autoscaler consistently adjusts the number of running videobridges to save on resources.

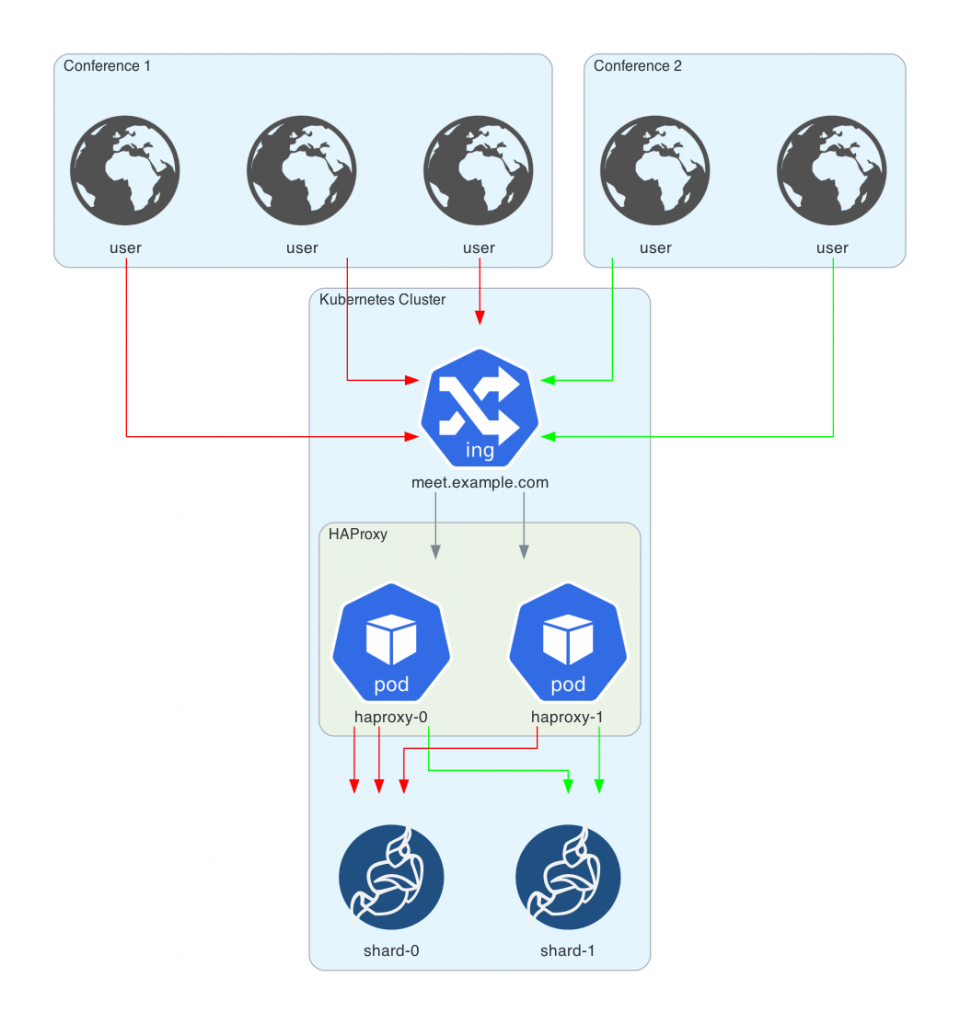

Inside the Kubernetes cluster, an Ingress controller will accept HTTPS requests and terminate their TLS connections. The incoming connections now need to be load-balanced between the shards. Additionally, new participants that want to join a running conference need to be routed to the correct shard.

To satisfy both requirements, a service running multiple instances of HAProxy is used. HAProxy is a load-balancer for TCP and HTTP traffic. New requests are load-balanced between the shards using the round-robin algorithm for a fair load distribution. HAProxy uses DNS service discovery to find all existing shards. The following snippet is an extract of HAProxy’s configuration:

backend jitsi-meet

balance roundrobin

mode http

option forwardfor

http-reuse safe

http-request set-header Room %[urlp(room)]

acl room_found urlp(room) -m found

stick-table type string len 128 size 2k expire 1d peers mypeers

stick on hdr(Room) if room_found

# _http._tcp.web.jitsi.svc.cluster.local:80 is a SRV DNS record

# A records don't work here because their order might change between calls and would result in different

# shard IDs for each peered HAproxy

server-template shard 0-5 _http._tcp.web.jitsi.svc.cluster.local:80 check resolvers kube-dns init-addr none

The configuration for HAProxy uses stick tables to route all traffic for an existing conference to the correct shard. Stick tables work similar to sticky sessions. In our example, HAProxy will store the mapping of a conference room URI to a specific shard in a dedicated key-value store that is shared with the other HAProxy instances. Thereby, all clients will be routed to the correct shard when joining a conference.

Another advantage that sharding gives you is that you can place shards in different geolocated regions and employ geobased routing. This way, users in North America, Europe or Asia can use different shards to optimize network latency.

By splitting your Jitsi cluster in shards and scaling them horizontally, you can successfully serve an enormous amount of concurrent video conferences.

The Octo Protocol

There is still a scaling problem when a lot of participants try to join the same conference, though. Up to this point, a single videobridge is responsible for routing all video stream traffic of a conference. This clearly limits the maximum number of participants of one conference.

Additionally, imagine a globe-spanning conference between four people. Two in North America and two in Australia. So far, geobased routing still requires two of the participants to connect to a videobridge on another continent, which has some serious latency disadvantages.

Fortunately, we can improve both situations by using the Octo protocol. Octo routes video streams between videobridge servers, essentially forming a cascade of forwarding servers. On the one hand, this removes the limit for a large number of participants in one conference due to the distributed client connections to multiple videobridges. On the other hand, Octo results in lower end-to-end media delay for gegraphically distributed participants.

The downside of Octo is that its traffic is unencrypted. That is why lower-level protocols need to take care of encrypting the inter-bridge traffic. Freifunk München’s Jitsi cluster uses an overlay network with Nebula, VXLAN and a Wireguard VPN to connect the videobridge servers.

Load Testing

When setting up a Jitsi cluster, it makes sense to perform load tests to determine your cluster’s limits before real people are starting to use the service. Jitsi’s developers have thankfully created a loadtesting tool that you can use: Jitsi Meet Torture. It simulates conference participants by sending prerecorded audio and video streams.

The results of loadtests performed by HPI Schul-Cloud’s team may be an initial reference point – they too are published on GitHub.

Conclusion

Jitsi Meet is free and open-source software that can be scaled pretty easily. It is possible to serve a large number of simultaneous conferences using sharding. However, even though Octo increases the maximum number of participants in a single conference, there are still some limitations in conference size – if nothing else because clients will have a hard time rendering lots of parallel video streams.

Still, Jitsi Meet is a privacy-friendly alternative to commercial offerings like Zoom or Microsoft Teams that does not require participants to install yet another video conferencing app on their machines. Additionally, it can be self-hosted on quite a large scale, both in the public or private cloud – or on bare metal.

References

- Jitsi Meet Handbook: Architecture – https://jitsi.github.io/handbook/docs/architecture

- Jitsi Meet Handbook: DevOps Guide (scalable setup) – https://jitsi.github.io/handbook/docs/devops-guide/devops-guide-scalable

- HPI Schul-Cloud Architecture Documentation – https://github.com/hpi-schul-cloud/jitsi-deployment/blob/master/docs/architecture/architecture.md

- Jitsi Blog: New tutorial video: Scaling Jitsi Meet in the Cloud – https://jitsi.org/blog/new-tutorial-video-scaling-jitsi-meet-in-the-cloud/

- Meetrix.IO: Auto Scaling Jitsi Meet on AWS – https://meetrix.io/blog/webrtc/jitsi/jitsi-meet-auto-scaling.html

- Meetrix.IO: How many Users and Conferences can Jitsi support on AWS – https://meetrix.io/blog/webrtc/jitsi/how-many-users-does-jitsi-support.html

- Annika Wickert et al. FFMUC goes wild: Infrastructure recap 2020 #rc3 – https://www.slideshare.net/AnnikaWickert/ffmuc-goes-wild-infrastructure-recap-2020-rc3

- Annika Wikert and Matthias Kesler. FFMUC presents #ffmeet – #virtualUKNOF – https://www.slideshare.net/AnnikaWickert/ffmuc-presents-ffmeet-virtualuknof

- Freifunk München Jitsi Server Setup – https://ffmuc.net/wiki/doku.php?id=knb:meet-server

- Boris Grozev and Emil Ivov. Jitsi Videobridge Performance Evaluation – https://jitsi.org/jitsi-videobridge-performance-evaluation/

- FFMUC Meet Stats: Grafana Dashboard – https://stats.ffmuc.net/d/U6sKqPuZz/meet-stats

- Arjun Nemani. How to integrate and scale Jitsi Video Conferencing – https://github.com/nemani/scalable-jitsi

- Chad Lavoie. Introduction to HAProxy Stick Tables – https://www.haproxy.com/blog/introduction-to-haproxy-stick-tables/

- HPI Schul-Cloud Jitsi Deployment: Loadtest results – https://github.com/hpi-schul-cloud/jitsi-deployment/blob/master/docs/loadtests/loadtestresults.md

- Jitsi Videobridge Docs: Setting up Octo (cascaded bridges) – https://github.com/jitsi/jitsi-videobridge/blob/master/doc/octo.md

- Boris Grozev. Improving Scale and Media Quality with Cascading SFUs – https://webrtchacks.com/sfu-cascading/

- https://github.com/jitsi/jitsi-meet-torture

Author: Martin Bock — martin-bock.com, @martbock

Leave a Reply

You must be logged in to post a comment.