Tag: Kubernetes

Die Meere der Systemtechnik navigieren: Eine Reise durch die Bereitstellung einer Aktien-Webanwendung in der Cloud

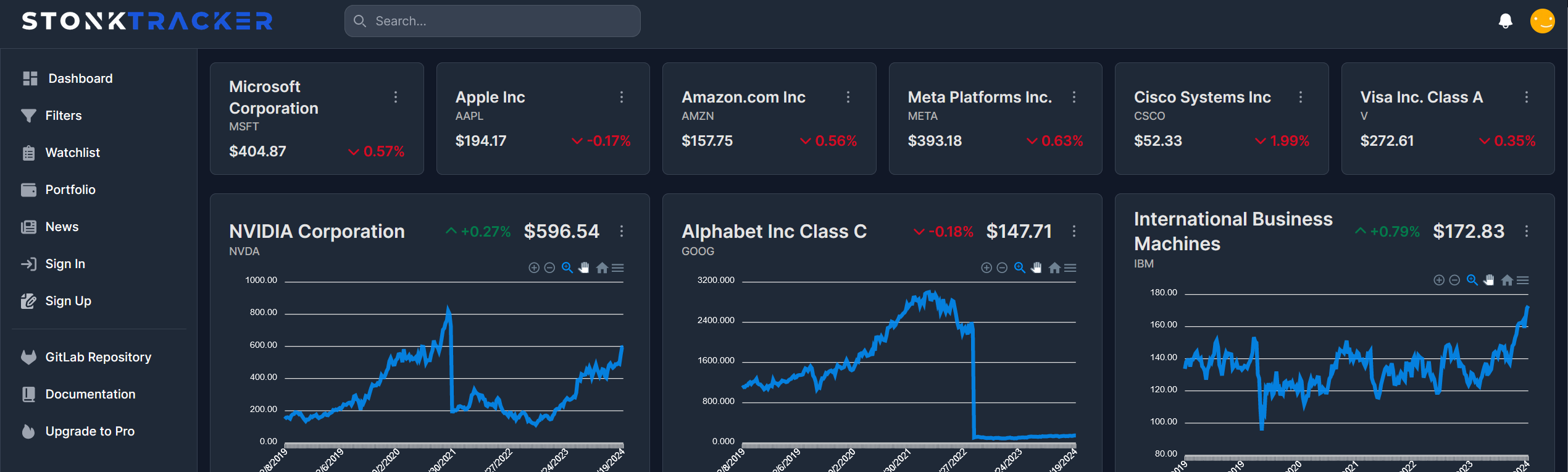

Auf zu neuen Ufern: Einleitung Die Cloud-Computing-Technologie hat die Art und Weise, wie Unternehmen Anwendungen entwickeln, bereitstellen und skalieren, revolutioniert. In diesem Beitrag, der im Rahmen der Vorlesung “143101a System Engineering und Management” entstanden ist, werden wir uns darauf konzentrieren, wie eine bereits bestehende Webanwendung zur Visualisierung und Filterung von Aktienkennzahlen auf der IBM Cloud-Infrastruktur…

Automate PDF – A Cloud-Driven Workflow Tool with Cloud Functions and Kubernetes

Gitlab You can find the Project under this link https://gitlab.mi.hdm-stuttgart.de/fb089/automatecloud Wiki You can find all the Infos in our Gitlab Wiki (https://gitlab.mi.hdm-stuttgart.de/fb089/automatecloud/-/wikis/AutomateCloud). You can even try it urself. Feel free Short Description Automate PDF is a workflow automation tool created in the course “Software Development for Cloud Computing”. The application provides a simple graph editor…

- Cloud Technologies, Design Patterns, Secure Systems, System Architecture, System Designs, System Engineering

High Availability and Reliability in Cloud Computing: Ensuring Seamless Operation Despite the Threat of Black Swan Events

Introduction Nowadays cloud computing has become the backbone of many businesses, offering unparalleled flexibility, scalability and cost-effectiveness. According to O’Reilly’s Cloud Adoption report from 2021, more than 90% of organizations rely on the cloud to run their critical applications and services [1]. High availability and reliability of cloud computing systems has never been more important, as…

Microservices – any good?

As software solutions continue to evolve and grow in size and complexity, the effort required to manage, maintain and update them increases. To address this issue, a modular and manageable approach to software development is required. Microservices architecture provides a solution by breaking down applications into smaller, independent services that can be managed and deployed individually.…

Migration einer REST API in die Cloud

Im Rahmen der Vorlesung “Software Development für Cloud Computing” haben wir uns zum Ziel gesetzt, eine bereits bestehende REST API in die Cloud zu migrieren.

“Himbeer Tarte und harte Fakten”: Im Interview mit Ansible, k3s, Infrastructure as Code und Raspberry Pi

Why so serious? – Ein Artikel von Sarah Schwab und Aliena Leonhard im Rahmen der Vorlesung Systems Engineering and Management. Die Idee, ein fiktives Interview zu erstellen, entstammt daraus komplexe Sachverhalte unterhaltsam und verständlich zu machen. Wir sind heute zu Gast in der Tech-Sendung “Himbeer Tarte und harte Fakten”. Heute geht es unter Anderem um…

Applikationsinfrastruktur einer modernen Web-Anwendung

ein Artikel von Nicolas Wyderka, Niklas Schildhauer, Lucas Crämer und Jannik Smidt Projektbeschreibung In diesem Blogeintrag wird die Entwicklung der Applikation- und Infrastruktur des Studienprojekts sharetopia beschrieben. Als Teil der Vorlesung System Engineering and Management wurde besonders darauf geachtet, die Anwendung nach heutigen Best Practices zu entwickeln und dabei kosteneffizient zu agieren

Deploying Random Chat Application on AWS EC2 with Kubernetes

1. Introduction For the examination of the lecture “Software Development for Cloud Computing”, I want to build a simple Random Chat Application. The idea of this application is based on the famous chat application called Omegle. Omegle is where people can meet random people in the world and can have a one-on-one chat. With Omegle…

KISS, DRY ‘n SOLID — Yet another Kubernetes System built with Ansible and observed with Metrics Server on arm64

This blog post shows how a plain Kubernetes cluster is automatically created and configured on three arm64 devices using an orchestration tool called Ansible. The main focus relies on Ansible; other components that set up and configure the cluster are Docker, Kubernetes, Helm, NGINX, Metrics Server and Kubernetes Dashboard. Individual steps are covered more or…

How to Scale Jitsi Meet

In today’s world, video conferencing is getting more and more important – be it for learning, business events or social interaction in general. Most people use one of the big players like Zoom or Microsoft Teams, which both have their share of privacy issues. However, there is an alternative approach: self-hosting open-source software like Jitsi…