Written by: Immanuel Haag, Christian Müller, Marc Rüttler

The goal of this blog entry is to automate the previously performed steps. At the end all manual steps should be automated when new code changes are added to the repository. The new version of the backend will be made available in the cloud at the end.

Step 1: Gitlab Repository Setup

The first step was to automate the manual execution of testing, image creation and deployment. Especially to prevent mistakes and to define a standardized procedure. We used the external hosted service of gitlab.com instead of the Medienhochschule Stuttgart GitLab. The reason for this is that mi.gitlab pipelines may have some strange behavior when connecting to external IP ranges. Therefore we have created a new repository under the following link: https://gitlab.com/system_eng_cup_app/radcup_backend (if you are interested, please contact us, because the project is private). In addition, we decided to use the Git Feature Branch Workflow for development. This results in many commits as well as several branches (background: there should not be one large but several small commits for customization’s).

To push images into the container registry or to deploy them in the cluster, different passwords or tokens are needed. These must not appear in plain text in the pipeline definition. For this reason these are stored under the CI/CD settings and are called via variable names.

Step 2: Define the architecture

After the repository and pipeline were created, we had to think about a rough architecture for our system. The following picture shows a simplified version of this:

Step 3: Gitlab CI/CD Setup

We defined multiple stages to divide the pipeline into multiple steps.

These are based on the steps previously performed manually as described above. Note: In gitlab you have to turn on the CI/CD Feature in the repository settings.

The pipeline stages

Our pipeline has the following stages:

- imagegen

- test

- build

- deploy

- curlcluster

.gitlab-ci.yml and runners

In short, this file defines how the project should be built by the GitLab Runner. It must be located in the root directory of the GitLab repository.

We found the Gitlab Documentation helpful.

And the quick start quide, which is also referenced in the documentation, was also interesting.

To get the pipeline running only the mentioned file has to be present and a GitLab Runner has to be configured.

Please note that the file does not configure the whole project with all branches and throughout all commits. This file is also version controlled like any other file in the repository, which means that this file could be different for every commit and on every branch. We decided to keep this file uniform on all branches.

Interesting notes.

We use the free, open for everyone “Shared Runners”. Runners can be configured via the website under “Settings” -> “CI / CD” -> “Runners”. Own Runners can also be configured there. Now every push/commit to the repository will cause the pipeline to run.

During development, there was often the problem of delays, so a dedicated runner was set up for longer debugging sessions. Feel free to consolidate the docs for more information about a separated runner.

If any job/stage returns a non zero value, the job will be interpreted as failed only if everything returns zero it will be considered passed.

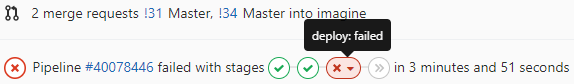

In the Picture one can see that on a commit two jobs passed and one failed. The failed job caused the whole pipeline to fail as expected. Also note that subsequent jobs were skipped an not even attempted.

In the following sections the setup of each stage in this file is described.

stages, variables and before_script

stages:

- imagegen

- test

- build

- deploy

- curlcluster

variables:

MAJOR: '1'

MINOR: '1'

PATCH: '1'

CLUSTERURL: '159.122.181.248:30304'

before_script:

- export BUILD_REF=`export TERM=xterm; git log --pretty=format:'%h' -n 1`

- export IMAGE_TAG=`export TERM=xterm; date +%Y%m%d_%H%M`

- export BUILD_TAG=${MAJOR}.${MINOR}.${PATCH}-build.${BUILD_REF}

Description:

Variables:

The variable section is used to introduce the so-called semantic versioning. This gives developers the advantage of listing the current version of the backend in individual sub-releases and can be considered as best practice. Details about that are shown under the following link: https://semver.org/.

For example if a developer fixes a small issue he has to increment the PATCH variable by one.

Before the pipeline starts executing, multiple variables are generated and exported in the before_script section. This part was inserted later because code duplications were available before.

For later image name tagging in the build-stage BUILD_REF and BUILD_TAG creates a string from the semantic-versioning variables and the latest commit id like this:

Successfully tagged registry.eu-de.bluemix.net/system_engineering_radcup/radcup_backend:1.1.1-build.b5ecee4

The BUILD_TAG variable is used in the imagegen stage. This construct creates a nametag like 20190106_1929 which represent the creation date of the immae1/ibmcloudkubectl image. Details about that are described below.

1. imagegen stage

imagegen:

image: immae1/ibmcloudkubectl # we use this image because here is git installed

services:

- docker:dind

stage: imagegen

only:

- image_gen

script:

- docker login --username ${DOCKERH_USR} --password ${DOCKERH_PWD}

- cd ibmcloudkubectlimage/; docker build -t immae1/ibmcloudkubectl:latest . ; docker push immae1/ibmcloudkubectl:latest ; docker tag immae1/ibmcloudkubectl:latest immae1/ibmcloudkubectl:${IMAGE_TAG} ; docker push immae1/ibmcloudkubectl:${IMAGE_TAG}

environment:

name: imagegen

Description:

The build and deploy stage require some tools for their doing. So we created the imagegen stage. This stage is responsible for creating a "default tooling-image".

The only keyword is used because only the imagegen branch of our repository is responsible for this stage and image generation. As we have no control over updates of the CLI tools which are installed in the image, we choose a decoupled approach. And the imagegen branch is executed by the gitlab schedules feature, on every Sunday.

Below you can see the Dockerfile in which is defined that the IBM-Cloud CLI-Tools and kubectl are installed.

FROM docker:latest

RUN apk add --update --no-cache curl git ca-certificates \

&& apk add --update -t deps curl \

&& apk add --update gettext \

&& curl -fsSL https://clis.ng.bluemix.net/install/linux | sh \

&& ibmcloud plugin install container-registry \

&& ibmcloud plugin install container-service \

&& curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl \

&& chmod +x ./kubectl \

&& mv ./kubectl /usr/local/bin/kubectl \

&& apk del --purge deps \

&& rm /var/cache/apk/*The result of this stage is online under the following link available: https://hub.docker.com/r/immae1/ibmcloudkubectl

Finding:

It was not easy to update an image within the dockerhub. But the trick is to tag twice whilst creating the image with latest and the current date. So after a second image creation and pushing, the are different versions of our image available in the dockerhub.

2. test stage

test:

image: node:jessie

services:

- name: mongo:4.0

alias: mongo

stage: test

except:

- image_gen

variables:

MONGO_URI: 'mongodb://mongo/radtest'

NODE_ENV: test

script:

- cd src/ && npm install --no-optional && npm test

environment:

name: testDescription:

The test stage is relativ straight forward. With the except keyword its defined that this stage is not performed in the imagegen branch. All other branches will perform this test stage. The other finding what we found here and is interesting to mention is the following fact. If you use shared gitlab runners, you never know which IP-Address a runner uses to talk with the internet. So if you want to use an external service like our managed external hosted MongoDB with IP-Whitelisting security feature you will encounter problems. Because of this the stage uses an additional local MongoDB container and the backend/test-suite can talk to this new local MongoDB gitlab container trough the MONGO_URI variable.

3. build stage

build:

image: immae1/ibmcloudkubectl:latest # or another arbitrary docker image

stage: build

only:

- master

services:

- docker:dind

script:

- ibmcloud login -a https://api.eu-de.bluemix.net -u ${BLUEMIX_USR} -p ${BLUEMIX_PWD} -c ${BLUEMIX_ORG} && ibmcloud cr login

- ibmcloud cr image-list --format "{{ .Created}} {{ if gt .Size 1 }}{{ .Repository }}:{{ .Tag }} {{end}}" | grep "system_engineering_radcup" | sort -n | cut -c12-98 | head -n -1 >> deprecated.txt && chmod +x clean.sh && sh clean.sh deprecated.txt

- docker build -t registry.eu-de.bluemix.net/system_engineering_radcup/radcup_backend:${BUILD_TAG} .

- docker push registry.eu-de.bluemix.net/system_engineering_radcup/radcup_backend:${BUILD_TAG}

environment:

name: buildDescription:

In this stage (is only executed on the master branch, see keyword only), the image is built based on the corresponding dockerfile of our backend (see first post). After the successful login, all images stored in the container registry are first read (the command output is formatted using the specified Go template), filtered and sorted, and the name of the oldest image is stored in a text file. This image is then deleted in the next step.

Background:

A CleanUp process has been defined to prevent the container registry from overflowing. This process ensures that there are never more than two images in the container registry at the same time. Keyword: resource management.

#!/bin/bash

while IFS= read -r line; do

ibmcloud cr image-rm $line

done < "$1"

If the oldest image is deleted, a new one is built and tagged before it is pushed into the container registry.

4. deploy stage

deploy:

image: immae1/ibmcloudkubectl:latest

stage: deploy

only:

- master

script:

- ibmcloud login -a https://api.eu-de.bluemix.net -u ${BLUEMIX_USR} -p ${BLUEMIX_PWD} -c ${BLUEMIX_ORG_FREE} && ibmcloud cs region-set eu-central && ibmcloud cs cluster-config radcup

- export KUBECONFIG=/root/.bluemix/plugins/container-service/clusters/radcup/kube-config-mil01-radcup.yml

- cd kubernetes/ && sed -i s/version-radcup/${BUILD_TAG}/g web-controller.yaml && kubectl get rc -o=custom-columns=NAME:.metadata.name > tmp.txt && sed -i '1d' tmp.txt && RC=`cat tmp.txt`

- kubectl rolling-update ${RC} -f web-controller.yaml

environment:

name: deployDescription:

In this stage (is only executed on the master branch, see keyword only), the previously built and pushed image is deployed in the cluster. After the successful login, the string version-radcup is replaced by ${BUILD_TAG} in the file web-controller.yaml. Then all replication controllers in the cluster are read to get the name of the affected controller and store it in a variable (background: this part has been added because the name of the web-controller has partly changed throughout the project). Finally, a rolling update to the replication controller is performed with the help of this and the image is updated accordingly.

Rolling update:

Rolling updates allow Deployment updates to take place with zero downtime by incrementally updating Pods instances with new ones.

- Example output:

Created web-controller-1.1.1-build Scaling up web-controller-1.1.1-build from 0 to 2, scaling down web-controller from 2 to 0 (keep 2 pods available, don't exceed 3 pods) Scaling web-controller-1.1.1-build up to 1 Scaling web-controller down to 1 Scaling web-controller-1.1.1-build up to 2 Scaling web-controller down to 0 Update succeeded. Deleting old controller: web-controller Renaming web-controller-1.1.1-build to web-controller

5. curlcluster stage

curlcluster:

stage: curlcluster

only:

- master

script:

- result="$(curl -m2 http://${CLUSTERURL}/api)"

- should='{"message":"this will be a beerpong app"}'

- if [[ "$result" != "$should" ]] ; then exit 1; fi

- resultgame="$(curl -m2 http://${CLUSTERURL}/api/games/5c2904bf172dbc0023ffdaea)"

- shouldgame=`cat gameresult.txt `

- if [[ "$resultgame" != "$shouldgame" ]] ; then exit 1; fi

environment:

name: curlclusterDescription:

This stage (is only executed on the master branch, see keyword only) is the last endpoint test. The cli tool curl is used to make a http call against the before updated cluster. If the API is not available – the return code becomes 1 and because of that the stage will fail. We decided to also test if the api is connected to the mongodbcluster – this is the second curl test which observes a unique gameid. This game is present in the mongo database at all times, so that this test won’t fail.

Step 4: The whole architecture

After the integration of the CI/CD pipeline described above, we can now present the entire architecture of our system. This can be seen in the following picture:

Step 5: Research questions and further project steps

- Scan images for vulnerabilities and challenge application logic (API vulnerability)

- Eliminate pipeline warnings (rollout for rolling update, credential manager for docker login in imagegen stage)

- Changing the ReplicationController to a ReplicaSet (newer version of the ReplicationController)

- Endpoint testing and load testing should be pursued further

- Set up Hooks in Slack etc.

- Apply 12-Factorapp principles to the project

- Further improve and simplify the pipeline

- Automate npm-check

Leave a Reply

You must be logged in to post a comment.