In the last months, nearly everybody has been talking about Kubernetes. It’s incredible! This semester the Stuttgart Media University even held a training course on this topic. For DevOps or “cloud-computing specialist” mastering K

This blog post won’t explain the nature of Kubernetes, so it’s not a tutorial. This blog post is written for people who have experience with Kubernetes. It will give you an overview of the current possibilities on how to install a K8S cluster and the most important installation types.

Besides, this article is going to give a short excursion on configuration management/infrastructure as code. Some K8S-Cluster installation tools rely on these concepts. If you are a K8S beginner – I recommend this short video that will give a good explanation:

The official documentation is also very helpful. If you want to test Kubernetes the first time I recommend Play with Kubernetes and Play with Kubernetes Classroom – these are some handy platforms which will teach you the first CLI steps with Kubernetes.

Now I’ll introduce some installation variations of Kubernetes. As a guide, I used the official documentation and my own experience as DevOps. Be prepared, I’m not able to mention all solutions out there!

Local installations on your Device

This Installation type only appears on your local device – let’s say your laptop. So if there is more than one cluster node (It depends on the tool you use) they are not physically separated, the nodes are only logic separated units within Docker containers. I recommend using this type of installation if you want to try Kubernetes more extensively than the ‘play with Kubernetes’ platform. If you will work on k8s-projects in the future, such an installation could be very helpful.

The table below gives an overview by operating system, number of nodes and complexity.

| Name | OS | Nodes | K8s V. | Features | Complexity & Notes |

| Docker Desktop | Windows MacOS | 1 | v1.10.11 | Configurable through Docker GUI | very easy to install, but it’s a minimalistic installation, so you have to install additional stuff like the dashboard by your own |

| Minikube | Linux MacOS Windows | 1 | v1.13.4 | Dashboard Multi hypervisor support (kvm, hyperkit, hyperV, VMware, VB & baremetal Docker) Addons GPU | very easy to setup but a bit harder than the docker-desktop installation |

| Kubeadm-dind | Linux MacOS (no IPv6-Support) | N | v1.13.x | Per default no additional features installed (if you want you have to maintain it by yourself) | Needs the most experience but multi node-setup support is available |

| microk8s | Linux | 1 | v1.11.3 | Dashboard No Hypervisors needed Metrics supported by Grafana Istio Addons GPU | it uses a snap environment, not available on all distros. But through this, good isolation from your other installations are given Relative new → not so matured as the other solutions. The additional features are a charm |

Table 1: Local installation types

So it depends on what you are looking for. Most of us out there have Docker-Desktop already installed – in my opinion, it’s the fastest way to get started with a local K8S installation with this inbuilt Docker feature. If you need some additional features like the K8S-Dashboard, use Minikube.

You have a Linux OS installed on your local machine? Then I recommend Minikube. If you use an

If you are experienced enough and looking for a multi-node Cluster on MacOS or Linux, try installing the cluster manually. Take for example 5 docker containers, install K8S with Kubeadm and connect them together. A faster and more comfortable opportunity is the project Kubeadm-dind which is a collection of bash scripts that simplify the deployment steps.

There are some other local installation solutions like Minishift and IBM Cloud Private-CE. These projects install more than Kubernetes and feel like bloatware if you only want to test some Kubernetes specific tasks! Use some of the above-mentioned tools in this case.

2. Cloud Installation Solutions

Going for ready-made hosted services like a cluster EKS provided by Amazon and configured ready is not considered. As already mentioned, this article is intended to show how installation and configuration can be made easier. So this paragraph won’t go into how to manually build a backbone network by booting several virtual machines, setting up a network and installing a cluster from scratch by hand. If you want to do this instead, have a look at this link: https://kubernetes.io/docs/setup/scratch/. The focus lies on running and demonstrating reproducible and automatable solutions. It might even be considerable to integrate such a setup into CI/CD pipelines so that developers get their own environments including clusters as soon as something is checked into the VCS. So the developers can work even closer to a productive world.

Tools like Ansible or Terraform offer such automated and reproducible results. Therefore a short excursus:

Software-Configuration Management vs. Infrastructure as Code ( IaC )

“Configuration management (CM) is a governance and systems engineering process for ensuring consistency among physical and logical assets in an operational environment. The configuration management process seeks to identify and track individual configuration items (CIs), documenting functional capabilities and interdependencies. Administrators, technicians and software developers can use configuration management tools to verify the effect a change to one configuration item has on other systems.”

https://searchitoperations.techtarget.com/definition/configuration-management-CM

VS.

“Infrastructure as code, also referred to as IaC, is a type of IT setup wherein developers or operations teams automatically manage and provision the technology stack for an application through software, rather than using a manual process to configure discrete hardware devices and operating systems. Infrastructure as code is sometimes referred to as programmable or software-defined infrastructure.”

https://searchitoperations.techtarget.com/definition/Infrastructure-as-Code-IAC

The distinction between the two definitions is therefore not easy. Furthermore there is a constant lack of clarity about this on the Internet. The keyword “Software-defined infrastructure” can also be found out there. Let’s ignore this keyword to simplify the distinction.

I personally understood the two concepts that way and will use them likewise after my research:

Infrastructure as code is the creation (build) of infrastructure components through code definitions such as describing virtual machines. It depends on how much resources a VM can get at startup, which networks the VM should have available and which OS is used, etc. So IaC always refers to a hardware-close approach. Configurations management refers more to the software perspective. IaC-Tools are also named “Orchestrators”.

Software-Configuration Management tools help to install and configure software with desired states. So if you want to install Nginx in a specific version and some virtual hosts you can do this for example with Ansible.

Conclusion:

First you define for example a virtual machine through IaC and then you provision the desired state of the virtual machine with a Software-Configuration Management tool.

But Configuration Management Tools can also define Infrastructure – the differentiation is very subtle. In my opinion, there are terminology issues and it depends on how you use the tools mentioned in table 2.

| Tool | Chef | Puppet | Ansible | SaltStack | CloudFormation | Terraform |

| Type | Config Mgmt | Config Mgmt | Config Mgmt | Config Mgmt | Orchestration (IaC) | Orchestration (IaC) |

| Cloud | All | All | All | All | AWS | All |

| Infrastructure | Mutable | Mutable | Mutable | Mutable | Immutable | Immutable |

| Language | Procedural | Declarative | Procedural | Declarative | Declarative | Declarative |

| VM Storage Network | Partial | Partial | Partial | Partial | Yes | Yes |

Table 2: Config Mgmt vs. Orchestration Tools Overview

Combination and adjustment of these tables: https://www.ibm.com/blogs/bluemix/2018/11/chef-Ansible-puppet-terraform/ https://wilsonmar.github.io/terraform/

Type: As above described differentiation

Cloud: Which cloud platforms are supported

Infrastructure: Mutable means that the configuration could be changed. So every deployed server changes over time – this could lead into some unwanted configuration drifts of the servers. Immutable is like the container solution of docker – each deployment will be creating a new server whereas the old one is being destroyed!

Language: Procedural means in this context: a step-by-step flow and define how the desired end state could be achieved. Declarative means the tool (IaC) can remember a state and it works according to the desired final state. Here you can read a detailed example regarding this.

VM provisioning, Storage & Networking: Manageable Features.

I give you a short example of how you can define an AWS EC2 Instance with Terraform. After this step you can run an Ansible Playbook to provision this machine. Please note, I couldn’t give a whole tutorial about Terraform / Ansible and their functionality. Anybody who is interested can have a look at their documentation.

Terraform Example on AWS EC2 Instance

All files are located in my example repository from the HDM Gitlab: https://gitlab.mi.hdm-stuttgart.de/ih038/uls If you need access as an external user, please contact me: ih038(AT-@))

- Install Terraform

- Create a Terraform configuration for your first build:

$ mkdir ~/.aws and add your aws creds there (https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-files.html)

$ touch ~/.aws/config

and add something like this:

[default]

region=us-east-1

output=json

$ touch example.tf

(in your project dir, and add this content:)

provider "aws" {

access_key = "ACCESS_KEY_HERE-PLS-CHANGE-OR-DELETE-ME"

secret_key = "SECRET_KEY_HERE"

region = "us-east-1"

}

if you delete the access keys - terraform search for it in ~/.aws/credentials !

resource "aws_instance" "example" {

ami = "ami-2757f631" #adjust for other regions/images this is a ubuntu trusty

instance_type = "t2.micro" #adjust for other instance types

}

3. Create an AWS IAM User for Terraform and save the credentials in the ~/.aws/credentials file! * Please keep this file top secret!

4. Run terraform init:

$ terraform init

Initializing provider plugins...

- Checking for available provider plugins on https://releases.hashicorp.com...

- Downloading plugin for provider "aws" (2.1.0)...

…

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure

…

you can see the newly created .terraform folder:

$ pwd

/Users/immi/repo/.terraform/plugins/darwin_amd64

# as you can see i got the needed aws darwin plugins (darwin is macos)

$ tree

.

├── lock.json

└── terraform-provider-aws_v2.1.0_x45. Run terraform apply to see what terraform will create for you:

$ terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ aws_instance.example

id: <computed>

ami: "ami-2757f631"

arn: <computed>

…

#”computed” means that this values are generated through aws after successful generation

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

…

aws_instance.example: Still creating... (10s elapsed)

aws_instance.example: Still creating... (20s elapsed)

aws_instance.example: Creation complete after 28s (ID: i-0a91a240db8555f5f)

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

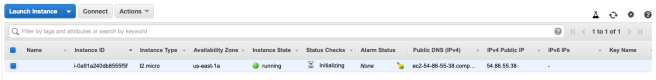

After logging into the AWS console you can see the newly created VM running:

Or you can run terraform show to confirm that everything worked well.

The VM can be terminated by running terraform destroy.

Because I want to show you a whole setup I created the solution available below.

I used the official example from this source:

https://github.com/terraform-providers/terraform-provider-aws/tree/master/examples/eip

Note: you have to create a private/public key pair and add the name in variables.

This setup creates an EC2 t2.micro instance. After this, a local provisioner script installs nginx. But I wanted more!

My final target was to run Ansible as provisioner to show you an extended setup. Therefore I installed Docker, then pulled the NGINX image instead of a local installation. After adjusting the setup, the final files look like this:

tree . ├── README.md ├── main.tf ├── outputs.tf ├── provision.yml ├── terraform.tfstate ├── terraform.tfstate.backup └── variables.tf

Terraform Files:

variables.tf

variable "aws_region" {

description = "The AWS region to create things in."

default = "us-east-1"

}

variable "aws_amis" {

default = {

"us-east-1" = "ami-07e101c2aebc37691"

"us-west-2" = "ami-7f675e4f"

}

}

variable "key_name" {

description = "Name of the SSH keypair to use in AWS."

default = "please-ask-politely"

}main.tf

# Specify the provider and access details

provider "aws" {

region = "${var.aws_region}"

}

resource "aws_eip" "default" {

instance = "${aws_instance.web.id}"

vpc = true

}

# Our default security group to access

# the instances over SSH and HTTP

resource "aws_security_group" "default" {

name = "eip_example"

description = "Used in the terraform"

# SSH access from anywhere

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# HTTP access from anywhere

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# outbound internet access

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_instance" "web" {

instance_type = "t2.micro"

# Lookup the correct AMI based on the region

# we specified

ami = "${lookup(var.aws_amis, var.aws_region)}"

# The name of our SSH keypair you've created and downloaded

# from the AWS console.

#

# https://console.aws.amazon.com/ec2/v2/home?region=us-west-2#KeyPairs:

#

key_name = "${var.key_name}"

# Our Security group to allow HTTP and SSH access

security_groups = ["${aws_security_group.default.name}"]

# Here you can see how terraform is able to provision a instance

provisioner "remote-exec" {

# Install Python for Ansible

inline = ["while [ ! -f /var/lib/cloud/instance/boot-finished ]; do echo 'Waiting for cloud-init...'; sleep 1; done; sudo rm /var/lib/apt/lists/* ; sudo apt-get update ; sudo apt-get install -y python python-pip "]

connection {

type = "ssh"

user = "ubuntu"

}

}

# Here we run provision from local disc to ec2-instance with the help of Ansible

provisioner "local-exec" {

#we dont want host-key-check because we don't knew the assigned eip

command = "ANSIBLE_HOST_KEY_CHECKING=False Ansible-playbook -u ubuntu -i '${self.public_ip},' -T 300 provision.yml"

}

#Instance tags, name the vm

tags = {

Name = "eip-docker-example"

}

}the other files are generated by the terraform init step and during the execution.

Ansible Files

I created a small playbook for simplicity. In a real-world project, you would separate the definitions.

- hosts: all

become: true #execute all steps with sudo

tasks:

- name: install python stuff and docker py package

pip:

name : ['urllib3','pyOpenSSL','ndg-httpsclient','pyasn1', 'docker']

- name: Add Docker GPG key

apt_key: url=https://download.docker.com/linux/ubuntu/gpg

- name: Add Docker APT repository

apt_repository:

repo: deb [arch=amd64] https://download.docker.com/linux/ubuntu {{Ansible_distribution_release}} stable

- name: Install list of packages

apt:

name: ['apt-transport-https','ca-certificates','curl','software-properties-common','docker-ce', 'python3','python3-pip']

state: present

update_cache: yes

- name: Build Docker Container

docker_container:

name: web

image: nginx:latest

state: started

ports: "0.0.0.0:80:80"Of

Encountered problems

At this point the article shows errors that occurred and how they were fixed.

Terraform specific problems:

The terraform apply step failed, and so did the creation of the VM

Error:

“aws_instance (remote-exec): E: Package ‘python’ has no installation candidate”

Solutions:

To fix this, add this one-liner before performing apt-get update

“while [ ! -f /var/lib/cloud/instance/boot-finished ]; do echo ‘Waiting for cloud-init…’; sleep 1; done; “

Explanation:

This problem was caused because the cloud-init process wasn’t finished and for that reason the apt-get upgrade step was blocked.

Ansible specific problems:

1. Running an Ansible Playbook from macOS to the remote Host for Docker-CE installation does not work with

Error:

FAILED! => {“changed”: false, “msg”: “Failed to validate the SSL certificate for download.docker.com:443. Make sure your managed systems have a valid CA certificate installed.

Solution:

Remove Ansible Installation, install Python3 and Ansible through Homebrew

Explanation:

It seems that the execution of Ansible was performed through python v2 – this creates this strange error.

2. Using the Ansible Docker Package for managing Containers within a playbook fails with the following error. The “docker” or “docker-py”-python package installation through pip was requested although it was already installed.

Error:

FAILED! => {“changed”: false, “msg”: “Failed to import docker or docker-py – cannot import name DependencyWarning. Try `pip install docker` or `pip install docker-py` (Python 2.6)”}

comes in combination with:

FAILED! => {“changed”: false, “msg”: “Ansible requires a minimum of Python2 version 2.6 or Python3 version 3.5. Current version: 3.4.3 [GCC 4.8.4]”}

Solution:

Use a Distribution / Image with Python version bigger than 3.5

Explanation:

It seems that the execution of Ansible was performed through Python v2 – this creates a strange error. The installed 14.04 Ubuntu LTS AMI-Image comes per default with Python 3.4.3 installed through apt-get. But Ansible wants Python > 3.5! After deploying an AMI-Image, which represents a Ubuntu 18.04 LTS, the Ansible provision was successful! The reason for that is 18.04 Ubuntu using Python 3.6.7.

So all these errors resulted from python version drifts! To avoid this always remember – if you use Python v2 you shouldn’t try to provision a remote host with Ansible where only Python 3 is installed and vice versa!

Notes: If the provisioning of Ansible went wrong, Terraform does not clearly recognize this – in this case it would be nice to find a workaround or you have to be aware of this and check the CLI-output carefully.

And now? What about the K8S-Cluster installation?

Due to the nature of the matter, it is logical that there is always a multi node cluster. The tools here can be seen as wrapper over kubeadm and give you the possibility of an easy installation and the flexibility for multi cloud platform support.

| Name | Supported Cloud | Provisioning Tool | K8s version | Complexity & Notes |

| Typhoon | AWS Azure Baremetal Digital Ocean Google Cloud | Terraform | v1.13.4 | Terraform knowledge needed |

| Kubespray | AWS Azure Baremetal (packet) Openstack Oracle Cloud Infrastructure (Experimental) vSphere | Ansible Vagrant | v1.13.4 | Ansible or Vagrant knowledge needed |

| Kops | AWS Google Cloud (beta) vSphere (alpha) other platforms planned | No standard provisioning tool – own binary like kubectl Export to Terraform possible | v1.11.x | Very matured tool Easy Setup (few steps) No baremetal support No Ansible/Terraform knowledge needed |

| Kubeify | AWS Azure Openstack | Terraform | v1.10.12 | Terraform knowledge needed Small Project → only 12 contributors in github |

What do we use now?

But as so often: there are hundreds of ways to install a cluster, so this is only a short overview. In the references, you can find useful links if you want to dive in deeper.

Conclusion

My personal advice is to use the tools you know best. If you have never used any of these tools, I would recommend you to give Ansible the first try in combination with Terraform. This is a rock solid combination frequently used by many people. The learning curve of Ansible is limited and there are many good ready-made Playbooks which can be used as examples. The documentation of Terraform and Ansible are very helpful. Although I had no previous experience with Terraform and very little AWS knowledge, the excursion could be completed within one day.

As research questions for this blog entry, some topics remain open, e.g. the best IaC/Configuration Management tool. Are there any noticeable differences between performance, security, features etc.? Regarding the Kubernetes installations in the cloud, you can ask yourself similar questions. There is no such thing as a “golden” installation. It always depends on what you want and what requirements are set by the project. In the beginning, it is probably easier to prefer a managed Kubernetes solution like EKS from AWS to a self-installed solution. As soon as the experience is available or you want to make flexible changes to the configurations of Kubernetes, soon installing and maintaining your own cluster will be worth it. Once again, the environment around Kubernetes and Cloud is very chaotic and it is growing and growing. If you want a really “super ultra large scale” systems approach with multiple Clusters, the https://gardener.cloud is a very promising project. They call it a Cluster as a service solution. Also this https://de.slideshare.net/QAware/kubernetes-clusters-as-a-service-with-gardener might be advisable.

References:

- https://www.pexels.com/de-de/foto/bart-boot-chillen-draussen-1121797/

- https://wilsonmar.github.io/terraform/

- https://slideshare.net/DevOpsMeetupBern/infrastructure-as-code-with-terraform-and-Ansible

- https://searchitoperations.techtarget.com/definition/Infrastructure-as-Code-IAC

- https://searchitoperations.techtarget.com/definition/configuration-management-CM

- https://reddit.com/r/devops/comments/9a0717/infrastructureascode_vs_configurationascode/

- https://reddit.com/r/devops/comments/8d0bo8/recommended_way_for_deploying_kubernetes_on_aws/

- https://medium.com/@mglover/deploying-to-aws-with-terraform-and-Ansible-81cccd4c563e

- https://kubernetes.io/docs/setup/pick-right-solution/

- https://ibm.com/blogs/bluemix/2018/11/chef-Ansible-puppet-terraform/

- https://wilsonmar.github.io/terraform/

- https://ibm.com/blogs/bluemix/2018/11/chef-ansible-puppet-terraform/

- https://github.com/hashicorp/terraform/issues/16656

- https://github.com/dzeban/c10k/tree/master/infrastructure/roles

- https://github.com/ansible/ansible/issues/37640

- https://gist.github.com/rbq/886587980894e98b23d0eee2a1d84933

- https://codementor.io/mamytianarakotomalala/how-to-deploy-docker-container-with-Ansible-on-debian-8-mavm48kw0

- https://askubuntu.com/questions/1028423/cant-upgrade-to-ubuntu-18-04-failed-to-fetch-http-np-archive-ubuntu-com-ubun/1028436

- https://andrewaadland.me/2018/10/14/installing-newer-versions-of-docker-in-ubuntu-18-04/

- http://inanzzz.com/index.php/post/6138/setting-up-a-nginx-docker-container-on-remote-server-with-ansible

Leave a Reply

You must be logged in to post a comment.