Tag: Microservices

- Cloud Technologies, Design Patterns, Secure Systems, System Architecture, System Designs, System Engineering

High Availability and Reliability in Cloud Computing: Ensuring Seamless Operation Despite the Threat of Black Swan Events

Introduction Nowadays cloud computing has become the backbone of many businesses, offering unparalleled flexibility, scalability and cost-effectiveness. According to O’Reilly’s Cloud Adoption report from 2021, more than 90% of organizations rely on the cloud to run their critical applications and services [1]. High availability and reliability of cloud computing systems has never been more important, as…

Die Zukunft ist Serverless?

Überblick Die “Cloud” ist ein Begriff, der in den letzten Jahren immens an Bedeutung gewonnen hat. Häufig wird sie für die Bereitstellung von Diensten und Services genutzt. Im Lauf der Zeit haben sich dabei verschiedene Architekturen entwickelt, die in der Cloud eingesetzt werden und unterschiedliche Ansätze für die Handhabung des Codes der Entwickler und die…

Microservices – any good?

As software solutions continue to evolve and grow in size and complexity, the effort required to manage, maintain and update them increases. To address this issue, a modular and manageable approach to software development is required. Microservices architecture provides a solution by breaking down applications into smaller, independent services that can be managed and deployed individually.…

Games aus der Cloud, wo sind wir und wohin geht die Reise?

Was genau ist Cloud Gaming? Cloud Gaming lässt sich mit Remote Desktops, Cloud Computing und Video on Demand Diensten vergleichen. Im Grunde beinhaltet Cloud Gaming das Streamen von Videospielen aus der Cloud zum Endkunden. Dabei erfasst und überträgt der Client seine Nutzereingaben (bspw. Maus, Tastatur, Controller) an den Server. Während dieser wiederum die Gesamtspielweltberechnung sowie…

Security Strategies and Best Practices for Microservices Architecture

Microservices architectures seem to be the new trend in the approach to application development. However, one should always keep in mind that microservices architectures are always closely associated with a specific environment: Companies want to develop faster and faster, but resources are also becoming more limited, so they now want to use multiple languages and approaches.…

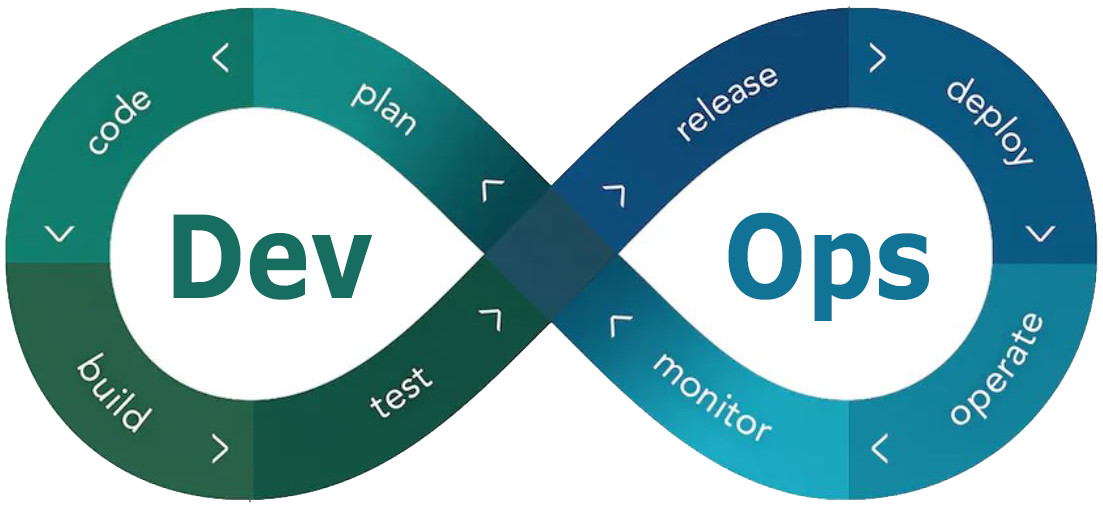

Getting Started with your first Software Project using DevOps

This Post is a getting started guide for creating a DevOps enabled web based software project.

Kubernetes: from Zero to Hero with Kompose, Minikube, k3sup and Helm — Part 2: Hands-On

This is part two of our series on how we designed and implemented a scalable, highly-available and fault-tolerant microservice-based Image Editor. This part depicts how we went from a basic Docker Compose setup to running our application on our own »bare-metal« Kubernetes cluster.

Kubernetes: from Zero to Hero with Kompose, Minikube, k3sup and Helm — Part 1: Design

This is part one of our series on how we designed and implemented a scalable, highly-available and fault-tolerant microservice-based Image Editor. The series covers the various design choices we made and the difficulties we faced during design and development of our system. It shows how we set up the scaling infrastructure with Kubernetes and what…

Observability?! – Where do we go from here?

The last two years in software development and operations have been characterized by the emerging idea of “observability”. The need for a novel concept guiding the efforts to control our systems arose from the accelerating paradigm changes driven by the need to scale and cloud native technologies. In contrast, the monitoring landscape stagnated and failed…

Microservices – Legolizing Software Development V

We finish with a concluding review about the use of microservices in small projects and give an overview about our top stumbling blocks.