Tag: machine learning

Large Scale Deployment for Deep Learning Models with TensorFlow Serving

Introduction “How do you turn a trained model into a product, that will bring value to your enterprise?” In recent years, serving has become a hot topic in machine learning. With the ongoing success of deep neural networks, there is a growing demand for solutions that address the increasing complexity of inference at scale. This…

Improved Vulnerability Detection using Deep Representation Learning

Today’s software is more vulnerable to cyber attacks than ever before. The number of recorded vulnerabilities has almost constantly increased since the early 90s. The advantage of Deep Learning algorithms is that they can learn vulnerability patterns on their own and achieve a much better vulnerability detection performance. In this Blog-Post, I will present a…

Federated Learning

The world is enriched daily with the latest and most sophisticated achievements of Artificial Intelligence (AI). But one challenge that all new technologies need to take seriously is training time. With deep neural networks and the computing power available today, it is finally possible to perform the most complex analyses without need of pre-processing and…

About using Machine Learning to improve performance of Go programs

This Blogpost contains some thoughts on learning the sizes arrays, slices or maps are going to reach using Machine Learning (ML) to increase programs’ performances by allocating the necessary memory in advance instead of reallocating every time new elements are appended.

Reproducibility in Machine Learning

The rise of Machine Learning has led to changes across all areas of computer science. From a very abstract point of view, heuristics are replaced by black-box machine-learning algorithms providing “better results”. But how do we actually quantify better results? ML-based solutions tend to focus more on absolute performance improvements (measured by metrics) instead of…

Observability?! – Where do we go from here?

The last two years in software development and operations have been characterized by the emerging idea of “observability”. The need for a novel concept guiding the efforts to control our systems arose from the accelerating paradigm changes driven by the need to scale and cloud native technologies. In contrast, the monitoring landscape stagnated and failed…

VVS-Delay – AI in the Cloud

Introduction Howdy, Geeks! Ever frustrated by public transportation around Stuttgart? Managed to get up early just to find out your train to university or work is delayed… again? Yeah, we all know that! We wondered if we could get around this issue by connecting our alarm clock to some algorithms. So we would never ever…

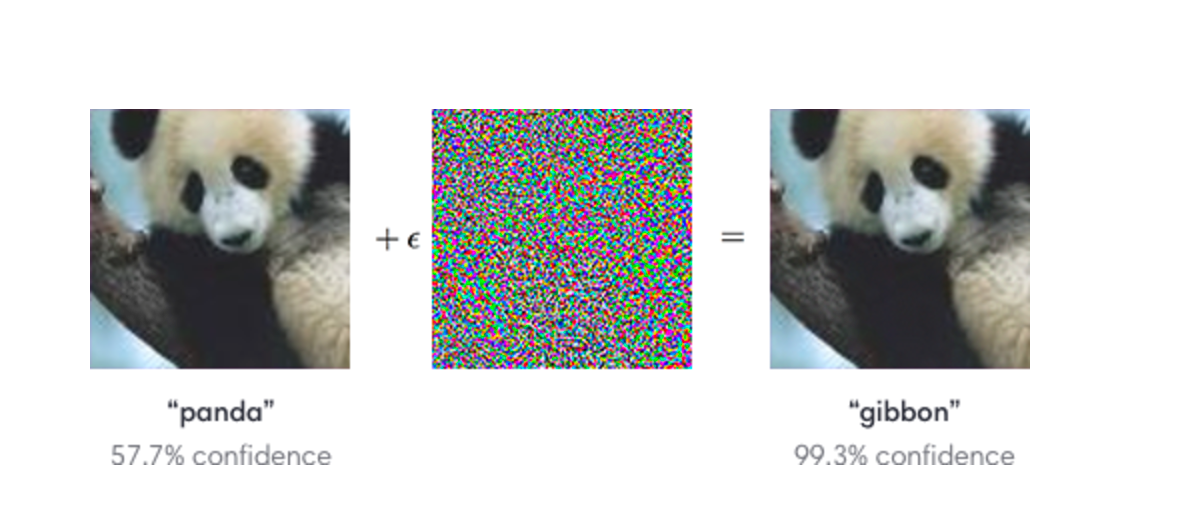

FOOLING THE INTELLIGENCE

Adversarial machine learning and its dangers The world is led by machines, humans are subjected to the robot’s rule. Omniscient computer systems hold the control of the world. The newest technology has outpaced human knowledge, while the mankind is powerless in the face of the stronger, faster, better and almighty cyborgs. Such dystopian visions of…

Machine Learning in secure systems

Sadly today’s security systems often be hacked and sensitive informations get stolen. To protect a company against cyber-attacks security experts define a “rule set” to detect and prevent any attack. This “analyst-driven solutions” are build up from human experts with their domain knowledge. This knowledge is based on experiences and build for attacks of the…