A blog about current topics in computer science and media, maintained by students of the Hochschule der Medien Stuttgart (Stuttgart Media University).

Recent Posts

Why system monitoring is important and how we approached it

Introduction Imagine building a service that aims to generate as much user traffic as possible to be as profitable as possible. The infrastructure of your service usually includes some kind of backend, a server and other frameworks. One day, something is not working as it should and you can’t seem to find out why. You…

Combining zerolog & Loki

Publish zerolog events to Loki in just a few lines of code.

- Allgemein, Student Projects, System Architecture, System Designs, System Engineering, Teaching and Learning

Using Keycloak as IAM for our hosting provider service

Discover how Keycloak can revolutionize your IAM strategy and propel your projects to new heights of security and efficiency.

CTF-Infrastruktur als Proof-of-Concept in der Microsoft Azure Cloud

Einführung Eine eigene Capture-The-Flag (CTF) Plattform zu betreiben bringt besondere Herausforderungen mit sich. Neben umfangreichem Benutzermanagement, dem Bereitstellen und sicherem Hosten von absichtlich verwundbaren Systemen, sowie einer möglichst einfachen Methode, spielbare Systeme von externen Quellen einzubinden. So möchte man vielleicht der eigenen Community die Möglichkeit bieten, eigene Szenarien zu entwickeln, welche im Anschluss in die…

Cybersecurity Breaches

Sicherheitsrisiken im digitalen Zeitalter: Eine Analyse aktueller Cyberangriffe und ihre Implikationen Data Breaches sind eine zunehmende Bedrohung, bei der Hacker und Cyberkriminelle weltweit nach Möglichkeiten suchen, sensible Informationen zu stehlen. Die Motive für solche Angriffe umfassen finanzielle Gewinne, Prestige und Spionage. Laut dem Verizon Report von 2023 machen technische Schwachstellen nur etwa 8% aller Angriffsmethoden…

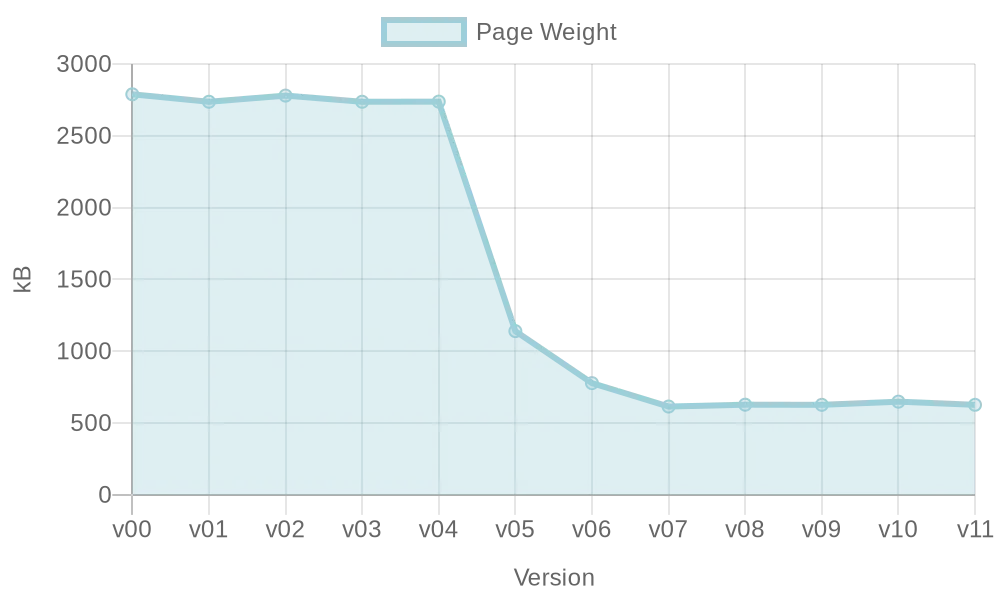

Optimierung einer VueJS-Webseite: Ladezeitenreduktion

Performance einer Webseite ist wichtig für Nutzer und Suchmaschinen, aber im Entwicklungsprozess nicht immer ersichtlich. Diese Hausarbeit untersucht Möglichkeiten zur automatischen Optimierung der Webperformance während der Entwicklung mit VueJS.

How to easily automate mikrotik-cert with a SCEP-server

Certificates are everywhere. But they also expire. Who would want to have to issue new certificates for every device on the company infrastructure? Every year?Not only is it time consuming, but also error prone. You could forget one device or how it is done. Therefore it is useful to invest time into automating this process.…

Entwickeln einer Edge-Anwendung mit Cloudflare

Einleitung Englisch spielt eine große Rolle in meinem Beruf und Alltag, doch immer noch passieren mir Grammatikfehler. Um meine Englischkenntnisse zu verbessern, habe ich eine kleine Webseite entwickelt, auf der das Schreiben von englischen Sätzen geübt werden kann. Dem Nutzer wird ein Satz präsentiert, der dann in die festgelegte Sprache übersetzt werden muss. Satzteile, die…

Automate PDF – A Cloud-Driven Workflow Tool with Cloud Functions and Kubernetes

Gitlab You can find the Project under this link https://gitlab.mi.hdm-stuttgart.de/fb089/automatecloud Wiki You can find all the Infos in our Gitlab Wiki (https://gitlab.mi.hdm-stuttgart.de/fb089/automatecloud/-/wikis/AutomateCloud). You can even try it urself. Feel free Short Description Automate PDF is a workflow automation tool created in the course “Software Development for Cloud Computing”. The application provides a simple graph editor…

Buzzwords

AI Amazon Web Services architecture artificial intelligence Automation AWS AWS Lambda Ci-Pipeline CI/CD Cloud Cloud-Computing Containers Continuous Integration data protection deep learning DevOps distributed systems Docker docker compose Games Git gitlab Gitlab CI Google Cloud ibm IBM Bluemix Jenkins Kubernetes Linux loadbalancing machine learning Microservices Monitoring Node.js privacy Python scaling secure systems security serverless social media Test-Driven Development ULS ultra large scale systems Web Performance